This tutorial depends on step-67.

This program was contributed by Martin Kronbichler, Peter Munch, and David Schneider. Many of the features shown here have been added to deal.II during and for the development of the deal.II-based, efficient, matrix-free finite-element library for high-dimensional partial differential equations hyper.deal (see https://github.com/hyperdeal/hyperdeal). For more details and for applications of the presented features in slightly different contexts (high-dimensional advection equation and Vlasov-Poisson equations) see the release paper [158].

This work was partly supported by the German Research Foundation (DFG) through the project "High-order discontinuous Galerkin for the exa-scale" (ExaDG) within the priority program "Software for Exascale Computing" (SPPEXA) and by the Bavarian government through the project "High-order matrix-free finite

element implementations with hybrid parallelization and improved data locality" within the KONWIHR program.

Introduction

This tutorial program solves the Euler equations of fluid dynamics, using an explicit time integrator with the matrix-free framework applied to a high-order discontinuous Galerkin discretization in space. The numerical approach used here is identical to that used in step-67, however, we utilize different advanced MatrixFree techniques to reach even a higher throughput.

The two main features of this tutorial are:

- the usage of shared-memory features from MPI-3.0 and

- the usage of cell-centric loops, which allow to write to the global vector only once and, therefore, are ideal for the usage of shared memory.

Further topics we discuss in this tutorial are the usage and benefits of the template argument VectorizedArrayType (instead of simply using VectorizedArray<Number>) as well as the possibility to pass lambdas to MatrixFree loops.

For details on the numerics, we refer to the documentation of step-67. We concentrate here only on the key differences.

Shared-memory and hybrid parallelization with MPI-3.0

Motivation

There exist many shared-memory libraries that are based on threads like TBB, OpenMP, or TaskFlow. Integrating such libraries into existing MPI programs allows one to use shared memory. However, these libraries come with an overhead for the programmer, since all parallelizable code sections have to be found and transformed according to the library used, including the difficulty when some third-party numerical library, like an iterative solver package, only relies on MPI.

Considering a purely MPI-parallelized FEM application, one can identify that the major time and memory benefit of using shared memory would come from accessing the part of the solution vector owned by the processes on the same compute node without the need to make explicit copies and buffering them. Fur this propose, MPI-3.0 provides shared-memory features based on so-called windows, where processes can directly access the data of the neighbors on the same shared-memory domain.

Basic MPI-3.0 commands

A few relevant MPI-3.0 commands are worth discussing in detail. A new MPI communicator comm_sm, which consists of processes from the communicator comm that have access to the same shared memory, can be created via:

MPI_Comm_split_type(

comm, MPI_COMM_TYPE_SHARED, rank, MPI_INFO_NULL, &comm_sm);

*braid_SplitCommworld & comm

The following code snippet shows the simplified allocation routines of shared memory for the value type T and the size local_size, as well as, how to query pointers to the data belonging to processes in the same shared-memory domain:

MPI_Win win;

T * data_this;

std::vector<T *> data_others;

MPI_Info info;

MPI_Info_create(&info);

MPI_Info_set(info, "alloc_shared_noncontig", "true");

MPI_Win_allocate_shared(local_size * sizeof(T), sizeof(T), info, comm_sm, &data_this, &win);

data_others.resize(size_sm);

int disp_unit = 0;

MPI_Aint ssize = 0;

for (int i = 0; i < size_sm; ++i)

MPI_Win_shared_query(win, i, &ssize, &disp_unit, &data_others[i]);

Assert(data_this == data_others[rank_sm], ExcMessage(

"Something went wrong!"));

#define Assert(cond, exc)

Once the data is not needed anymore, the window has to be freed, which also frees the locally-owned data:

The commands mentioned in the last section are integrated into LinearAlgebra::distributed::Vector and are used to allocate shared memory if an optional (second) communicator is provided to the reinit()-functions.

For example, a vector can be set up with a partitioner (containing the global communicator) and a sub-communicator (containing the processes on the same compute node):

vec.reinit(partitioner, comm_sm);

Locally owned values and ghost values can be processed as usual. However, now users also have read access to the values of the shared-memory neighbors via the function:

const std::vector<ArrayView<const Number>> &

const std::vector< ArrayView< const Number > > & shared_vector_data() const

While LinearAlgebra::distributed::Vector provides the option to allocate shared memory and to access the values of shared memory of neighboring processes in a coordinated way, it does not actually exploit the benefits of the usage of shared memory itself.

The MatrixFree infrastructure, however, does:

- On the one hand, within the matrix-free loops MatrixFree::loop(), MatrixFree::cell_loop(), and MatrixFree::loop_cell_centric(), only ghost values that need to be updated are updated. Ghost values from shared-memory neighbors can be accessed directly, making buffering, i.e., copying of the values into the ghost region of a vector possibly redundant. To deal with possible race conditions, necessary synchronizations are performed within MatrixFree. In the case that values have to be buffered, values are copied directly from the neighboring shared-memory process, bypassing more expensive MPI operations based on

MPI_ISend and MPI_IRecv.

- On the other hand, classes like FEEvaluation and FEFaceEvaluation can read directly from the shared memory, so buffering the values is indeed not necessary in certain cases.

To be able to use the shared-memory capabilities of MatrixFree, MatrixFree has to be appropriately configured by providing the user-created sub-communicator:

data.reinit(mapping, dof_handler, constraint, quadrature, additional_data);

Cell-centric loops

Motivation: FCL vs. CCL

"Face-centric loops" (short FCL) visit cells and faces (inner and boundary ones) in separate loops. As a consequence, each entity is visited only once and fluxes between cells are evaluated only once. How to perform face-centric loops with the help of MatrixFree::loop() by providing three functions (one for the cell integrals, one for the inner, and one for the boundary faces) has been presented in step-59 and step-67.

"Cell-centric loops" (short CCL or ECL (for element-centric loops) in the hyper.deal release paper), in contrast, process a cell and in direct succession process all its faces (i.e., visit all faces twice). Their benefit has become clear for modern CPU processor architecture in the literature [135], although this kind of loop implies that fluxes have to be computed twice (for each side of an interior face). CCL has two primary advantages:

- On the one hand, entries in the solution vector are written exactly once back to main memory in the case of CCL, while in the case of FCL at least once despite of cache-efficient scheduling of cell and face loops-due to cache capacity misses.

- On the other hand, since each entry of the solution vector is accessed exactly once, no synchronization between threads is needed while accessing the solution vector in the case of CCL. This absence of race conditions during writing into the destination vector makes CCL particularly suitable for shared-memory parallelization.

One should also note that although fluxes are computed twice in the case of CCL, this does not automatically translate into doubling of the computation, since values already interpolated to the cell quadrature points can be interpolated to a face with a simple 1D interpolation.

Cell-centric loops and MatrixFree

For cell-centric loop implementations, the function MatrixFree::loop_cell_centric() can be used, to which the user can pass a function that should be performed on each cell.

To derive an appropriate function, which can be passed in MatrixFree::loop_cell_centric(), one might, in principle, transform/merge the following three functions, which can be passed to a MatrixFree::loop():

matrix_free.template loop<VectorType, VectorType>(

[&](const auto &data, auto &dst, const auto &src, const auto range) {

for (unsigned int cell = range.first; cell < range.second; ++cell)

{

phi.reinit(cell);

phi.gather_evaluate(src, cell_evaluation_flags);

phi.integrate_scatter(cell_evaluation_flags, dst);

}

},

[&](const auto &data, auto &dst, const auto &src, const auto range) {

for (unsigned int face = range.first; face < range.second; ++face)

{

phi_m.reinit(face);

phi_m.gather_evaluate(src, face_evaluation_flags);

phi_p.reinit(face);

phi_p.gather_evaluate(src, face_evaluation_flags);

phi_m.integrate_scatter(face_evaluation_flags, dst);

phi_p.integrate_scatter(face_evaluation_flags, dst);

}

},

[&](const auto &data, auto &dst, const auto &src, const auto range) {

for (unsigned int face = range.first; face < range.second; ++face)

{

phi_m.reinit(face);

phi_m.gather_evaluate(src, face_evaluation_flags);

phi_m.integrate_scatter(face_evaluation_flags, dst);

}

},

dst,

src);

in the following way:

matrix_free.template loop_cell_centric<VectorType, VectorType>(

[&](const auto &data, auto &dst, const auto &src, const auto range) {

for (unsigned int cell = range.first; cell < range.second; ++cell)

{

phi.reinit(cell);

phi.gather_evaluate(src, cell_evaluation_flags);

phi.integrate_scatter(cell_evaluation_flags, dst);

for (unsigned int face = 0; face < GeometryInfo<dim>::faces_per_cell; ++face)

{

if (data.get_faces_by_cells_boundary_id(cell, face)[0] ==

{

phi_m.reinit(cell, face);

phi_m.gather_evaluate(src, face_evaluation_flags);

phi_p.reinit(cell, face);

phi_p.gather_evaluate(src, face_evaluation_flags);

phi_m.integrate_scatter(face_evaluation_flags, dst);

}

else

{

phi_m.reinit(cell, face);

phi_m.gather_evaluate(src, face_evaluation_flags);

phi_m.integrate_scatter(face_evaluation_flags, dst);

}

}

}

},

dst,

src);

const types::boundary_id internal_face_boundary_id

It should be noted that FEFaceEvaluation is initialized now with two numbers, the cell number and the local face number. The given example only highlights how to transform face-centric loops into cell-centric loops and is by no means efficient, since data is read and written multiple times from and to the global vector as well as computations are performed redundantly. Below, we will discuss advanced techniques that target these issues.

To be able to use MatrixFree::loop_cell_centric(), following flags of MatrixFree::AdditionalData have to be enabled:

data.reinit(mapping, dof_handler, constraint, quadrature, additional_data);

bool hold_all_faces_to_owned_cells

UpdateFlags mapping_update_flags_inner_faces

UpdateFlags mapping_update_flags_boundary_faces

UpdateFlags mapping_update_flags_faces_by_cells

In particular, these flags enable that the internal data structures are set up for all faces of the cells.

Currently, cell-centric loops in deal.II only work for uniformly refined meshes and if no constraints are applied (which is the standard case DG is normally used).

Providing lambdas to MatrixFree loops

The examples given above have already used lambdas, which have been provided to matrix-free loops. The following short examples present how to transform functions between a version where a class and a pointer to one of its methods are used and a variant where lambdas are utilized.

In the following code, a class and a pointer to one of its methods, which should be interpreted as cell integral, are passed to MatrixFree::loop():

void

VectorType & dst,

const VectorType & src,

const std::pair<unsigned int, unsigned int> &range) const

{

for (unsigned int cell = range.first; cell < range.second; ++cell)

{

phi.reinit(cell);

phi.gather_evaluate(src, cell_evaluation_flags);

phi.integrate_scatter(cell_evaluation_flags, dst);

}

}

matrix_free.cell_loop(&Operator::local_apply_cell, this, dst, src);

However, it is also possible to pass an anonymous function via a lambda function with the same result:

matrix_free.template cell_loop<VectorType, VectorType>(

[&](const auto &data, auto &dst, const auto &src, const auto range) {

for (unsigned int cell = range.first; cell < range.second; ++cell)

{

phi.reinit(cell);

phi.gather_evaluate(src, cell_evaluation_flags);

phi.integrate_scatter(cell_evaluation_flags, dst);

}

},

dst,

src);

VectorizedArrayType

The class VectorizedArray<Number> is a key component to achieve the high node-level performance of the matrix-free algorithms in deal.II. It is a wrapper class around a short vector of n entries of type Number and maps arithmetic operations to appropriate single-instruction/multiple-data (SIMD) concepts by intrinsic functions. The length of the vector can be queried by VectorizedArray::size() and its underlying number type by VectorizedArray::value_type.

In the default case (VectorizedArray<Number>), the vector length is set at compile time of the library to match the highest value supported by the given processor architecture. However, also a second optional template argument can be specified as VectorizedArray<Number, size>, where size explicitly controls the vector length within the capabilities of a particular instruction set. A full list of supported vector lengths is presented in the following table:

| double | float | ISA |

VectorizedArray<double, 1> | VectorizedArray<float, 1> | (auto-vectorization) |

VectorizedArray<double, 2> | VectorizedArray<float, 4> | SSE2/AltiVec |

VectorizedArray<double, 4> | VectorizedArray<float, 8> | AVX/AVX2 |

VectorizedArray<double, 8> | VectorizedArray<float, 16> | AVX-512 |

This allows users to select the vector length/ISA and, as a consequence, the number of cells to be processed at once in matrix-free operator evaluations, possibly reducing the pressure on the caches, an severe issue for very high degrees (and dimensions).

A possible further reason to reduce the number of filled lanes is to simplify debugging: instead of having to look at, e.g., 8 cells, one can concentrate on a single cell.

The interface of VectorizedArray also enables the replacement by any type with a matching interface. Specifically, this prepares deal.II for the std::simd class that is planned to become part of the C++23 standard. The following table compares the deal.II-specific SIMD classes and the equivalent C++23 classes:

The commented program

Parameters and utility functions

The same includes as in step-67:

#include <deal.II/base/conditional_ostream.h>

#include <deal.II/base/function.h>

#include <deal.II/base/time_stepping.h>

#include <deal.II/base/timer.h>

#include <deal.II/base/utilities.h>

#include <deal.II/base/vectorization.h>

#include <deal.II/distributed/tria.h>

#include <deal.II/dofs/dof_handler.h>

#include <deal.II/fe/fe_dgq.h>

#include <deal.II/fe/fe_system.h>

#include <deal.II/grid/grid_generator.h>

#include <deal.II/grid/tria.h>

#include <deal.II/grid/tria_accessor.h>

#include <deal.II/grid/tria_iterator.h>

#include <deal.II/lac/affine_constraints.h>

#include <deal.II/lac/la_parallel_vector.h>

#include <deal.II/matrix_free/fe_evaluation.h>

#include <deal.II/matrix_free/matrix_free.h>

#include <deal.II/matrix_free/operators.h>

#include <deal.II/numerics/data_out.h>

#include <fstream>

#include <iomanip>

#include <iostream>

A new include for categorizing of cells according to their boundary IDs:

#include <deal.II/matrix_free/tools.h>

namespace Euler_DG

{

The same input parameters as in step-67:

constexpr unsigned int testcase = 1;

constexpr unsigned int dimension = 2;

constexpr unsigned int n_global_refinements = 2;

constexpr unsigned int fe_degree = 5;

constexpr unsigned int n_q_points_1d = fe_degree + 2;

This parameter specifies the size of the shared-memory group. Currently, only the values 1 and numbers::invalid_unsigned_int is possible, leading to the options that the memory features can be turned off or all processes having access to the same shared-memory domain are grouped together.

using Number = double;

static const unsigned int invalid_unsigned_int

Here, the type of the data structure is chosen for vectorization. In the default case, VectorizedArray<Number> is used, i.e., the highest instruction-set-architecture extension available on the given hardware with the maximum number of vector lanes is used. However, one might reduce the number of filled lanes, e.g., by writing using VectorizedArrayType = VectorizedArray<Number, 4> to only process 4 cells.

The following parameters have not changed:

constexpr double gamma = 1.4;

constexpr double final_time = testcase == 0 ? 10 : 2.0;

constexpr double output_tick = testcase == 0 ? 1 : 0.05;

const double courant_number = 0.15 /

std::pow(fe_degree, 1.5);

::VectorizedArray< Number, width > pow(const ::VectorizedArray< Number, width > &, const Number p)

Specify max number of time steps useful for performance studies.

Runge-Kutta-related functions copied from step-67 and slightly modified with the purpose to minimize global vector access:

enum LowStorageRungeKuttaScheme

{

stage_3_order_3,

stage_5_order_4,

stage_7_order_4,

stage_9_order_5,

};

constexpr LowStorageRungeKuttaScheme lsrk_scheme = stage_5_order_4;

class LowStorageRungeKuttaIntegrator

{

public:

LowStorageRungeKuttaIntegrator(const LowStorageRungeKuttaScheme scheme)

{

switch (scheme)

{

case stage_3_order_3:

break;

case stage_5_order_4:

break;

case stage_7_order_4:

break;

case stage_9_order_5:

break;

default:

}

rk_integrator(lsrk);

std::vector<double> ci;

rk_integrator.get_coefficients(ai, bi, ci);

}

unsigned int n_stages() const

{

return bi.size();

}

template <typename VectorType, typename Operator>

void perform_time_step(const Operator &pde_operator,

const double current_time,

const double time_step,

VectorType &solution,

VectorType &vec_ri,

VectorType &vec_ki) const

{

vec_ki.swap(solution);

double sum_previous_bi = 0;

for (unsigned int stage = 0; stage < bi.size(); ++stage)

{

const double c_i = stage == 0 ? 0 : sum_previous_bi + ai[stage - 1];

pde_operator.perform_stage(stage,

current_time + c_i * time_step,

bi[stage] * time_step,

(stage == bi.size() - 1 ?

0 :

ai[stage] * time_step),

(stage % 2 == 0 ? vec_ki : vec_ri),

(stage % 2 == 0 ? vec_ri : vec_ki),

solution);

if (stage > 0)

sum_previous_bi += bi[stage - 1];

}

}

private:

std::vector<double> bi;

std::vector<double> ai;

};

#define AssertDimension(dim1, dim2)

#define AssertThrow(cond, exc)

@ LOW_STORAGE_RK_STAGE9_ORDER5

@ LOW_STORAGE_RK_STAGE3_ORDER3

@ LOW_STORAGE_RK_STAGE7_ORDER4

@ LOW_STORAGE_RK_STAGE5_ORDER4

Euler-specific utility functions from step-67:

enum EulerNumericalFlux

{

lax_friedrichs_modified,

harten_lax_vanleer,

};

constexpr EulerNumericalFlux numerical_flux_type = lax_friedrichs_modified;

template <int dim>

class ExactSolution :

public Function<dim>

{

public:

ExactSolution(const double time)

{}

virtual double value(const

Point<dim> &p,

const unsigned

int component = 0) const override;

};

template <int dim>

double ExactSolution<dim>::value(

const Point<dim> &x,

const unsigned int component) const

{

switch (testcase)

{

case 0:

{

Assert(dim == 2, ExcNotImplemented());

const double beta = 5;

x0[0] = 5.;

const double radius_sqr =

(x - x0).

norm_square() - 2. * (x[0] - x0[0]) * t + t * t;

const double factor =

const double density_log = std::log2(

std::abs(1. - (gamma - 1.) / gamma * 0.25 * factor * factor));

const double density = std::exp2(density_log * (1. / (gamma - 1.)));

const double u = 1. - factor * (x[1] - x0[1]);

const double v = factor * (x[0] - t - x0[0]);

if (component == 0)

return density;

else if (component == 1)

return density * u;

else if (component == 2)

return density * v;

else

{

const double pressure =

std::exp2(density_log * (gamma / (gamma - 1.)));

return pressure / (

gamma - 1.) +

0.5 * (density * u * u + density * v * v);

}

}

case 1:

{

if (component == 0)

return 1.;

else if (component == 1)

return 0.4;

else if (component == dim + 1)

return 3.097857142857143;

else

return 0.;

}

default:

return 0.;

}

}

template <int dim, typename Number>

{

const Number inverse_density = Number(1.) / conserved_variables[0];

for (

unsigned int d = 0;

d < dim; ++

d)

velocity[d] = conserved_variables[1 + d] * inverse_density;

return velocity;

}

template <int dim, typename Number>

Number

{

euler_velocity<dim>(conserved_variables);

Number rho_u_dot_u = conserved_variables[1] * velocity[0];

for (

unsigned int d = 1;

d < dim; ++

d)

rho_u_dot_u += conserved_variables[1 + d] * velocity[d];

return (gamma - 1.) * (conserved_variables[dim + 1] - 0.5 * rho_u_dot_u);

}

template <int dim, typename Number>

{

euler_velocity<dim>(conserved_variables);

const Number pressure = euler_pressure<dim>(conserved_variables);

for (

unsigned int d = 0;

d < dim; ++

d)

{

flux[0][

d] = conserved_variables[1 +

d];

for (

unsigned int e = 0;

e < dim; ++

e)

flux[e + 1][d] = conserved_variables[e + 1] * velocity[d];

flux[

d + 1][

d] += pressure;

velocity[

d] * (conserved_variables[dim + 1] + pressure);

}

return flux;

}

template <int n_components, int dim, typename Number>

{

for (

unsigned int d = 0;

d < n_components; ++

d)

result[d] = matrix[d] * vector;

return result;

}

template <int dim, typename Number>

{

const auto velocity_m = euler_velocity<dim>(u_m);

const auto velocity_p = euler_velocity<dim>(u_p);

const auto pressure_m = euler_pressure<dim>(u_m);

const auto pressure_p = euler_pressure<dim>(u_p);

const auto flux_m = euler_flux<dim>(u_m);

const auto flux_p = euler_flux<dim>(u_p);

switch (numerical_flux_type)

{

case lax_friedrichs_modified:

{

gamma * pressure_p * (1. / u_p[0]),

velocity_m.norm_square() +

gamma * pressure_m * (1. / u_m[0])));

return 0.5 * (flux_m * normal + flux_p * normal) +

0.5 * lambda * (u_m - u_p);

}

case harten_lax_vanleer:

{

const auto avg_velocity_normal =

0.5 * ((velocity_m + velocity_p) * normal);

0.5 * gamma *

(pressure_p * (1. / u_p[0]) + pressure_m * (1. / u_m[0]))));

const Number s_pos =

std::max(Number(), avg_velocity_normal + avg_c);

const Number s_neg =

std::min(Number(), avg_velocity_normal - avg_c);

const Number inverse_s = Number(1.) / (s_pos - s_neg);

return inverse_s *

((s_pos * (flux_m * normal) - s_neg * (flux_p * normal)) -

s_pos * s_neg * (u_m - u_p));

}

default:

{

return {};

}

}

}

constexpr numbers::NumberTraits< Number >::real_type norm_square() const

std::enable_if_t< std::is_floating_point_v< T > &&std::is_floating_point_v< U >, typename ProductType< std::complex< T >, std::complex< U > >::type > operator*(const std::complex< T > &left, const std::complex< U > &right)

#define DEAL_II_ALWAYS_INLINE

#define DEAL_II_NOT_IMPLEMENTED()

SymmetricTensor< 2, dim, Number > e(const Tensor< 2, dim, Number > &F)

SymmetricTensor< 2, dim, Number > d(const Tensor< 2, dim, Number > &F, const Tensor< 2, dim, Number > &dF_dt)

long double gamma(const unsigned int n)

static constexpr double PI

::VectorizedArray< Number, width > exp(const ::VectorizedArray< Number, width > &)

::VectorizedArray< Number, width > min(const ::VectorizedArray< Number, width > &, const ::VectorizedArray< Number, width > &)

::VectorizedArray< Number, width > max(const ::VectorizedArray< Number, width > &, const ::VectorizedArray< Number, width > &)

::VectorizedArray< Number, width > sqrt(const ::VectorizedArray< Number, width > &)

::VectorizedArray< Number, width > abs(const ::VectorizedArray< Number, width > &)

General-purpose utility functions from step-67:

template <int dim, typename VectorizedArrayType>

VectorizedArrayType

const unsigned int component)

{

VectorizedArrayType result;

for (unsigned int v = 0; v < VectorizedArrayType::size(); ++v)

{

for (unsigned int d = 0; d < dim; ++d)

p[d] = p_vectorized[d][v];

result[v] = function.

value(p, component);

}

return result;

}

template <int dim, typename VectorizedArrayType, int n_components = dim + 2>

{

for (unsigned int v = 0; v < VectorizedArrayType::size(); ++v)

{

for (

unsigned int d = 0;

d < dim; ++

d)

p[d] = p_vectorized[d][v];

for (

unsigned int d = 0;

d < n_components; ++

d)

result[d][v] = function.

value(p, d);

}

return result;

}

const unsigned int n_components

virtual RangeNumberType value(const Point< dim > &p, const unsigned int component=0) const

Euler operator using a cell-centric loop and MPI-3.0 shared memory

Euler operator from step-67 with some changes as detailed below:

template <int dim, int degree, int n_points_1d>

class EulerOperator

{

public:

static constexpr unsigned int n_quadrature_points_1d = n_points_1d;

~EulerOperator();

void set_subsonic_outflow_boundary(

void

perform_stage(const unsigned int stage,

const Number cur_time,

const Number bi,

const Number ai,

std::array<double, 3> compute_errors(

double compute_cell_transport_speed(

void

private:

Abstract base class for mapping classes.

Instance of SubCommunicatorWrapper containing the sub-communicator, which we need to pass to MatrixFree::reinit() to be able to exploit MPI-3.0 shared-memory capabilities:

std::map<types::boundary_id, std::unique_ptr<Function<dim>>>

inflow_boundaries;

std::map<types::boundary_id, std::unique_ptr<Function<dim>>>

subsonic_outflow_boundaries;

std::set<types::boundary_id> wall_boundaries;

std::unique_ptr<Function<dim>> body_force;

};

New constructor, which creates a sub-communicator. The user can specify the size of the sub-communicator via the global parameter group_size. If the size is set to -1, all MPI processes of a shared-memory domain are combined to a group. The specified size is decisive for the benefit of the shared-memory capabilities of MatrixFree and, therefore, setting the size to -1 is a reasonable choice. By setting, the size to 1 users explicitly disable the MPI-3.0 shared-memory features of MatrixFree and rely completely on MPI-2.0 features, like MPI_Isend and MPI_Irecv.

template <int dim, int degree, int n_points_1d>

EulerOperator<dim, degree, n_points_1d>::EulerOperator(

TimerOutput &timer)

: timer(timer)

{

#ifdef DEAL_II_WITH_MPI

if (group_size == 1)

{

this->subcommunicator = MPI_COMM_SELF;

}

{

MPI_Comm_split_type(MPI_COMM_WORLD,

MPI_COMM_TYPE_SHARED,

rank,

MPI_INFO_NULL,

&subcommunicator);

}

else

{

}

#else

(void)subcommunicator;

(void)group_size;

this->subcommunicator = MPI_COMM_SELF;

#endif

}

unsigned int this_mpi_process(const MPI_Comm mpi_communicator)

New destructor responsible for freeing of the sub-communicator.

template <int dim, int degree, int n_points_1d>

EulerOperator<dim, degree, n_points_1d>::~EulerOperator()

{

#ifdef DEAL_II_WITH_MPI

if (this->subcommunicator != MPI_COMM_SELF)

MPI_Comm_free(&subcommunicator);

#endif

}

Modified reinit() function to set up the internal data structures in MatrixFree in a way that it is usable by the cell-centric loops and the MPI-3.0 shared-memory capabilities are used:

template <int dim, int degree, int n_points_1d>

void EulerOperator<dim, degree, n_points_1d>::reinit(

{

const std::vector<const DoFHandler<dim> *> dof_handlers = {&dof_handler};

const std::vector<const AffineConstraints<double> *> constraints = {&dummy};

const std::vector<Quadrature<1>> quadratures = {

QGauss<1>(n_q_points_1d),

additional_data;

additional_data.mapping_update_flags_inner_faces =

additional_data.mapping_update_flags_boundary_faces =

additional_data.tasks_parallel_scheme =

@ update_values

Shape function values.

@ update_normal_vectors

Normal vectors.

@ update_JxW_values

Transformed quadrature weights.

@ update_gradients

Shape function gradients.

@ update_quadrature_points

Transformed quadrature points.

UpdateFlags mapping_update_flags

Categorize cells so that all lanes have the same boundary IDs for each face. This is strictly not necessary, however, allows to write simpler code in EulerOperator::perform_stage() without masking, since it is guaranteed that all cells grouped together (in a VectorizedArray) have to perform exactly the same operation also on the faces.

Enable MPI-3.0 shared-memory capabilities within MatrixFree by providing the sub-communicator:

additional_data.communicator_sm = subcommunicator;

mapping, dof_handlers, constraints, quadratures, additional_data);

}

void reinit(const MappingType &mapping, const DoFHandler< dim > &dof_handler, const AffineConstraints< number2 > &constraint, const QuadratureType &quad, const AdditionalData &additional_data=AdditionalData())

The following function does an entire stage of a Runge–Kutta update and is, alongside the slightly modified setup, the heart of this tutorial compared to step-67.

In contrast to step-67, we are not executing the advection step (using MatrixFree::loop()) and the inverse mass-matrix step (using MatrixFree::cell_loop()) in sequence, but evaluate everything in one go inside of MatrixFree::loop_cell_centric(). This function expects a single function that is executed on each locally-owned (macro) cell as parameter so that we need to loop over all faces of that cell and perform needed integration steps on our own.

The following function contains to a large extent copies of the following functions from step-67 so that comments related the evaluation of the weak form are skipped here:

EulerDG::EulerOperator::local_apply_cellEulerDG::EulerOperator::local_apply_faceEulerDG::EulerOperator::local_apply_boundary_faceEulerDG::EulerOperator::local_apply_inverse_mass_matrix

template <int dim, int degree, int n_points_1d>

void EulerOperator<dim, degree, n_points_1d>::perform_stage(

const unsigned int stage,

const Number current_time,

const Number bi,

const Number ai,

{

for (auto &i : inflow_boundaries)

i.

second->set_time(current_time);

for (auto &i : subsonic_outflow_boundaries)

i.

second->set_time(current_time);

Run a cell-centric loop by calling MatrixFree::loop_cell_centric() and providing a lambda containing the effects of the cell, face and boundary-face integrals:

data.template loop_cell_centric<LinearAlgebra::distributed::Vector<Number>,

[&](const auto &data, auto &dst, const auto &src, const auto cell_range) {

degree,

n_points_1d,

dim + 2,

Number,

VectorizedArrayType>;

degree,

n_points_1d,

dim + 2,

Number,

VectorizedArrayType>;

FECellIntegral phi(data);

FECellIntegral phi_temp(data);

FEFaceIntegral phi_m(data, true);

FEFaceIntegral phi_p(data, false);

if (constant_function)

constant_body_force =

evaluate_function<dim, VectorizedArrayType, dim>(

dim,

n_points_1d,

n_points_1d,

VectorizedArrayType,

Number>

eval({},

data.get_shape_info().data[0].shape_gradients_collocation_eo,

{});

phi.n_components);

Loop over all cell batches:

for (unsigned int cell = cell_range.first; cell < cell_range.second;

++cell)

{

phi.reinit(cell);

if (ai != Number())

phi_temp.reinit(cell);

Read values from global vector and compute the values at the quadrature points:

if (ai != Number() && stage == 0)

{

phi.read_dof_values(src);

for (unsigned int i = 0;

i < phi.static_dofs_per_component * (dim + 2);

++i)

phi_temp.begin_dof_values()[i] = phi.begin_dof_values()[i];

}

else

{

}

Buffer the computed values at the quadrature points, since these are overridden by FEEvaluation::submit_value() in the next step, however, are needed later on for the face integrals:

for (unsigned int i = 0; i < phi.static_n_q_points * (dim + 2); ++i)

buffer[i] = phi.begin_values()[i];

Apply the cell integral at the cell quadrature points. See also the function EulerOperator::local_apply_cell() from step-67:

for (const unsigned int q : phi.quadrature_point_indices())

{

const auto w_q = phi.get_value(q);

phi.submit_gradient(euler_flux<dim>(w_q), q);

if (body_force.get() != nullptr)

{

constant_function ?

constant_body_force :

evaluate_function<dim, VectorizedArrayType, dim>(

*body_force, phi.quadrature_point(q));

for (unsigned int d = 0; d < dim; ++d)

forcing[d + 1] = w_q[0] * force[d];

for (unsigned int d = 0; d < dim; ++d)

forcing[dim + 1] += force[d] * w_q[d + 1];

phi.submit_value(forcing, q);

}

}

Test with the gradient of the test functions in the quadrature points. We skip the interpolation back to the support points of the element, since we first collect all contributions in the cell quadrature points and only perform the interpolation back as the final step.

{

auto *values_ptr = phi.begin_values();

auto *gradient_ptr = phi.begin_gradients();

for (unsigned int c = 0; c < dim + 2; ++c)

{

if (dim >= 1 && body_force.get() == nullptr)

eval.template gradients<0, false, false, dim>(gradient_ptr,

values_ptr);

else if (dim >= 1)

eval.template gradients<0, false, true, dim>(gradient_ptr,

values_ptr);

if (dim >= 2)

eval.template gradients<1, false, true, dim>(gradient_ptr +

1,

values_ptr);

if (dim >= 3)

eval.template gradients<2, false, true, dim>(gradient_ptr +

2,

values_ptr);

values_ptr += phi.static_n_q_points;

gradient_ptr += phi.static_n_q_points * dim;

}

}

Loop over all faces of the current cell:

for (unsigned int face = 0;

face < GeometryInfo<dim>::faces_per_cell;

++face)

{

Determine the boundary ID of the current face. Since we have set up MatrixFree in a way that all filled lanes have guaranteed the same boundary ID, we can select the boundary ID of the first lane.

const auto boundary_ids =

data.get_faces_by_cells_boundary_id(cell, face);

Assert(std::equal(boundary_ids.begin(),

boundary_ids.begin() +

data.n_active_entries_per_cell_batch(cell),

boundary_ids.begin()),

ExcMessage("Boundary IDs of lanes differ."));

const auto boundary_id = boundary_ids[0];

phi_m.reinit(cell, face);

Interpolate the values from the cell quadrature points to the quadrature points of the current face via a simple 1d interpolation:

n_points_1d - 1,

VectorizedArrayType>::

template interpolate_quadrature<true, false>(

dim + 2,

data.get_shape_info(),

buffer.data(),

phi_m.begin_values(),

face);

Check if the face is an internal or a boundary face and select a different code path based on this information:

Process and internal face. The following lines of code are a copy of the function EulerDG::EulerOperator::local_apply_face from step-67:

phi_p.reinit(cell, face);

for (const unsigned int q :

phi_m.quadrature_point_indices())

{

const auto numerical_flux =

euler_numerical_flux<dim>(phi_m.get_value(q),

phi_p.get_value(q),

phi_m.normal_vector(q));

phi_m.submit_value(-numerical_flux, q);

}

}

else

{

Process a boundary face. These following lines of code are a copy of the function EulerDG::EulerOperator::local_apply_boundary_face from step-67:

for (const unsigned int q :

phi_m.quadrature_point_indices())

{

const auto w_m = phi_m.get_value(q);

const auto normal = phi_m.normal_vector(q);

auto rho_u_dot_n = w_m[1] * normal[0];

for (unsigned int d = 1; d < dim; ++d)

rho_u_dot_n += w_m[1 + d] * normal[d];

bool at_outflow = false;

if (wall_boundaries.find(boundary_id) !=

wall_boundaries.end())

{

w_p[0] = w_m[0];

for (unsigned int d = 0; d < dim; ++d)

w_p[d + 1] =

w_m[d + 1] - 2. * rho_u_dot_n * normal[d];

w_p[dim + 1] = w_m[dim + 1];

}

else if (inflow_boundaries.find(boundary_id) !=

inflow_boundaries.end())

w_p = evaluate_function(

*inflow_boundaries.find(boundary_id)->second,

phi_m.quadrature_point(q));

else if (subsonic_outflow_boundaries.find(

boundary_id) !=

subsonic_outflow_boundaries.end())

{

w_p = w_m;

w_p[dim + 1] =

evaluate_function(*subsonic_outflow_boundaries

.find(boundary_id)

phi_m.quadrature_point(q),

dim + 1);

at_outflow = true;

}

else

ExcMessage(

"Unknown boundary id, did "

"you set a boundary condition for "

"this part of the domain boundary?"));

auto flux = euler_numerical_flux<dim>(w_m, w_p, normal);

if (at_outflow)

for (unsigned int v = 0;

v < VectorizedArrayType::size();

++v)

{

if (rho_u_dot_n[v] < -1e-12)

for (

unsigned int d = 0;

d < dim; ++

d)

flux[d + 1][v] = 0.;

}

phi_m.submit_value(-flux, q);

}

}

Evaluate local integrals related to cell by quadrature and add into cell contribution via a simple 1d interpolation:

n_points_1d - 1,

VectorizedArrayType>::

template interpolate_quadrature<false, true>(

dim + 2,

data.get_shape_info(),

phi_m.begin_values(),

phi.begin_values(),

face);

}

Apply inverse mass matrix in the cell quadrature points. See also the function EulerDG::EulerOperator::local_apply_inverse_mass_matrix() from step-67:

for (unsigned int q = 0; q < phi.static_n_q_points; ++q)

{

const auto factor = VectorizedArrayType(1.0) / phi.JxW(q);

for (unsigned int c = 0; c < dim + 2; ++c)

phi.begin_values()[c * phi.static_n_q_points + q] =

phi.begin_values()[c * phi.static_n_q_points + q] * factor;

}

Transform values from collocation space to the original Gauss-Lobatto space:

dim,

degree + 1,

n_points_1d>::do_backward(dim + 2,

data.get_shape_info()

.data[0]

.inverse_shape_values_eo,

false,

phi.begin_values(),

phi.begin_dof_values());

Perform Runge-Kutta update and write results back to global vectors:

if (ai == Number())

{

for (unsigned int q = 0; q < phi.static_dofs_per_cell; ++q)

phi.begin_dof_values()[q] = bi * phi.begin_dof_values()[q];

phi.distribute_local_to_global(solution);

}

else

{

if (stage != 0)

phi_temp.read_dof_values(solution);

for (unsigned int q = 0; q < phi.static_dofs_per_cell; ++q)

{

const auto K_i = phi.begin_dof_values()[q];

phi.begin_dof_values()[q] =

phi_temp.begin_dof_values()[q] + (ai * K_i);

phi_temp.begin_dof_values()[q] += bi * K_i;

}

phi.set_dof_values(dst);

phi_temp.set_dof_values(solution);

}

}

},

vec_ki,

current_ri,

true,

}

From here, the code of step-67 has not changed.

template <int dim, int degree, int n_points_1d>

void EulerOperator<dim, degree, n_points_1d>::initialize_vector(

{

data.initialize_dof_vector(vector);

}

template <int dim, int degree, int n_points_1d>

void EulerOperator<dim, degree, n_points_1d>::set_inflow_boundary(

{

AssertThrow(subsonic_outflow_boundaries.find(boundary_id) ==

subsonic_outflow_boundaries.end() &&

wall_boundaries.find(boundary_id) == wall_boundaries.end(),

ExcMessage("You already set the boundary with id " +

std::to_string(static_cast<int>(boundary_id)) +

" to another type of boundary before now setting " +

"it as inflow"));

ExcMessage("Expected function with dim+2 components"));

inflow_boundaries[boundary_id] = std::move(inflow_function);

}

template <int dim, int degree, int n_points_1d>

void EulerOperator<dim, degree, n_points_1d>::set_subsonic_outflow_boundary(

{

inflow_boundaries.end() &&

wall_boundaries.find(boundary_id) == wall_boundaries.end(),

ExcMessage("You already set the boundary with id " +

std::to_string(static_cast<int>(boundary_id)) +

" to another type of boundary before now setting " +

"it as subsonic outflow"));

ExcMessage("Expected function with dim+2 components"));

subsonic_outflow_boundaries[

boundary_id] = std::move(outflow_function);

}

template <int dim, int degree, int n_points_1d>

void EulerOperator<dim, degree, n_points_1d>::set_wall_boundary(

{

inflow_boundaries.end() &&

subsonic_outflow_boundaries.find(boundary_id) ==

subsonic_outflow_boundaries.end(),

ExcMessage("You already set the boundary with id " +

std::to_string(static_cast<int>(boundary_id)) +

" to another type of boundary before now setting " +

"it as wall boundary"));

wall_boundaries.insert(boundary_id);

}

template <int dim, int degree, int n_points_1d>

void EulerOperator<dim, degree, n_points_1d>::set_body_force(

{

this->body_force = std::move(body_force);

}

template <int dim, int degree, int n_points_1d>

void EulerOperator<dim, degree, n_points_1d>::project(

{

phi(data, 0, 1);

degree,

dim + 2,

Number,

VectorizedArrayType>

inverse(phi);

solution.zero_out_ghost_values();

for (unsigned int cell = 0; cell < data.n_cell_batches(); ++cell)

{

phi.reinit(cell);

for (const unsigned int q : phi.quadrature_point_indices())

phi.submit_dof_value(evaluate_function(function,

phi.quadrature_point(q)),

q);

inverse.transform_from_q_points_to_basis(dim + 2,

phi.begin_dof_values(),

phi.begin_dof_values());

phi.set_dof_values(solution);

}

}

template <int dim, int degree, int n_points_1d>

std::array<double, 3> EulerOperator<dim, degree, n_points_1d>::compute_errors(

{

double errors_squared[3] = {};

phi(data, 0, 0);

for (unsigned int cell = 0; cell < data.n_cell_batches(); ++cell)

{

phi.reinit(cell);

VectorizedArrayType local_errors_squared[3] = {};

for (const unsigned int q : phi.quadrature_point_indices())

{

const auto error =

evaluate_function(function, phi.quadrature_point(q)) -

phi.get_value(q);

const auto JxW = phi.JxW(q);

local_errors_squared[0] += error[0] * error[0] * JxW;

for (

unsigned int d = 0;

d < dim; ++

d)

local_errors_squared[1] += (error[d + 1] * error[d + 1]) * JxW;

local_errors_squared[2] += (error[dim + 1] * error[dim + 1]) * JxW;

}

for (unsigned int v = 0; v < data.n_active_entries_per_cell_batch(cell);

++v)

for (

unsigned int d = 0;

d < 3; ++

d)

errors_squared[d] += local_errors_squared[d][v];

}

std::array<double, 3> errors;

for (

unsigned int d = 0;

d < 3; ++

d)

return errors;

}

template <int dim, int degree, int n_points_1d>

double EulerOperator<dim, degree, n_points_1d>::compute_cell_transport_speed(

{

Number max_transport = 0;

phi(data, 0, 1);

for (unsigned int cell = 0; cell < data.n_cell_batches(); ++cell)

{

phi.reinit(cell);

VectorizedArrayType local_max = 0.;

for (const unsigned int q : phi.quadrature_point_indices())

{

const auto solution = phi.get_value(q);

const auto velocity = euler_velocity<dim>(solution);

const auto pressure = euler_pressure<dim>(solution);

const auto inverse_jacobian = phi.inverse_jacobian(q);

const auto convective_speed = inverse_jacobian * velocity;

VectorizedArrayType convective_limit = 0.;

for (

unsigned int d = 0;

d < dim; ++

d)

convective_limit =

const auto speed_of_sound =

std::sqrt(gamma * pressure * (1. / solution[0]));

for (

unsigned int d = 0;

d < dim; ++

d)

eigenvector[d] = 1.;

for (unsigned int i = 0; i < 5; ++i)

{

(inverse_jacobian * eigenvector);

VectorizedArrayType eigenvector_norm = 0.;

for (

unsigned int d = 0;

d < dim; ++

d)

eigenvector_norm =

eigenvector /= eigenvector_norm;

}

const auto jac_times_ev = inverse_jacobian * eigenvector;

(jac_times_ev * jac_times_ev) / (eigenvector * eigenvector));

local_max =

max_eigenvalue * speed_of_sound + convective_limit);

}

for (unsigned int v = 0; v < data.n_active_entries_per_cell_batch(cell);

++v)

max_transport =

std::max(max_transport, local_max[v]);

}

return max_transport;

}

template <int dim>

class EulerProblem

{

public:

EulerProblem();

private:

void make_grid_and_dofs();

void output_results(const unsigned int result_number);

#ifdef DEAL_II_WITH_P4EST

#else

#endif

EulerOperator<dim, fe_degree, n_q_points_1d> euler_operator;

double time, time_step;

{

public:

Postprocessor();

virtual void evaluate_vector_field(

virtual std::vector<std::string> get_names() const override;

virtual std::vector<

get_data_component_interpretation() const override;

virtual UpdateFlags get_needed_update_flags()

const override;

private:

const bool do_schlieren_plot;

};

};

template <int dim>

EulerProblem<dim>::Postprocessor::Postprocessor()

: do_schlieren_plot(dim == 2)

{}

template <int dim>

void EulerProblem<dim>::Postprocessor::evaluate_vector_field(

{

const unsigned int n_evaluation_points = inputs.solution_values.size();

if (do_schlieren_plot == true)

Assert(inputs.solution_gradients.size() == n_evaluation_points,

ExcInternalError());

Assert(computed_quantities.size() == n_evaluation_points,

ExcInternalError());

Assert(inputs.solution_values[0].size() == dim + 2, ExcInternalError());

Assert(computed_quantities[0].size() ==

dim + 2 + (do_schlieren_plot == true ? 1 : 0),

ExcInternalError());

for (unsigned int p = 0; p < n_evaluation_points; ++p)

{

for (

unsigned int d = 0;

d < dim + 2; ++

d)

solution[d] = inputs.solution_values[p](d);

const double density = solution[0];

const double pressure = euler_pressure<dim>(solution);

for (

unsigned int d = 0;

d < dim; ++

d)

computed_quantities[p](d) = velocity[

d];

computed_quantities[p](dim) = pressure;

computed_quantities[p](dim + 1) =

std::sqrt(gamma * pressure / density);

if (do_schlieren_plot == true)

computed_quantities[p](dim + 2) =

inputs.solution_gradients[p][0] * inputs.solution_gradients[p][0];

}

}

template <int dim>

std::vector<std::string> EulerProblem<dim>::Postprocessor::get_names() const

{

std::vector<std::string> names;

for (

unsigned int d = 0;

d < dim; ++

d)

names.emplace_back("velocity");

names.emplace_back("pressure");

names.emplace_back("speed_of_sound");

if (do_schlieren_plot == true)

names.emplace_back("schlieren_plot");

return names;

}

template <int dim>

std::vector<DataComponentInterpretation::DataComponentInterpretation>

EulerProblem<dim>::Postprocessor::get_data_component_interpretation() const

{

std::vector<DataComponentInterpretation::DataComponentInterpretation>

interpretation;

for (

unsigned int d = 0;

d < dim; ++

d)

interpretation.push_back(

if (do_schlieren_plot == true)

interpretation.push_back(

return interpretation;

}

template <int dim>

UpdateFlags EulerProblem<dim>::Postprocessor::get_needed_update_flags() const

{

if (do_schlieren_plot == true)

else

}

template <int dim>

EulerProblem<dim>::EulerProblem()

#ifdef DEAL_II_WITH_P4EST

#endif

, mapping(fe_degree)

, euler_operator(timer)

, time(0)

, time_step(0)

{}

template <int dim>

void EulerProblem<dim>::make_grid_and_dofs()

{

switch (testcase)

{

case 0:

{

for (

unsigned int d = 1;

d < dim; ++

d)

lower_left[d] = -5;

upper_right[0] = 10;

for (

unsigned int d = 1;

d < dim; ++

d)

upper_right[d] = 5;

lower_left,

upper_right);

euler_operator.set_inflow_boundary(

0, std::make_unique<ExactSolution<dim>>(0));

break;

}

case 1:

{

euler_operator.set_inflow_boundary(

0, std::make_unique<ExactSolution<dim>>(0));

euler_operator.set_subsonic_outflow_boundary(

1, std::make_unique<ExactSolution<dim>>(0));

euler_operator.set_wall_boundary(2);

euler_operator.set_wall_boundary(3);

if (dim == 3)

euler_operator.set_body_force(

std::vector<double>({0., 0., -0.2})));

break;

}

default:

}

dof_handler.distribute_dofs(fe);

euler_operator.reinit(mapping, dof_handler);

euler_operator.initialize_vector(solution);

std::locale s = pcout.get_stream().getloc();

pcout.get_stream().imbue(std::locale(""));

pcout << "Number of degrees of freedom: " << dof_handler.n_dofs()

<< " ( = " << (dim + 2) << " [vars] x "

<< std::endl;

pcout.get_stream().imbue(s);

}

template <int dim>

void EulerProblem<dim>::output_results(const unsigned int result_number)

{

const std::array<double, 3> errors =

euler_operator.compute_errors(ExactSolution<dim>(time), solution);

const std::string quantity_name = testcase == 0 ? "error" : "norm";

pcout << "Time:" << std::setw(8) << std::setprecision(3) << time

<< ", dt: " << std::setw(8) << std::setprecision(2) << time_step

<< ", " << quantity_name << " rho: " << std::setprecision(4)

<<

std::setw(10) << errors[0] << ", rho * u: " <<

std::setprecision(4)

<<

std::setw(10) << errors[1] << ", energy:" <<

std::setprecision(4)

<<

std::setw(10) << errors[2] <<

std::endl;

{

Postprocessor postprocessor;

data_out.set_flags(flags);

data_out.attach_dof_handler(dof_handler);

{

std::vector<std::string> names;

names.emplace_back("density");

for (

unsigned int d = 0;

d < dim; ++

d)

names.emplace_back("momentum");

names.emplace_back("energy");

std::vector<DataComponentInterpretation::DataComponentInterpretation>

interpretation;

interpretation.push_back(

for (

unsigned int d = 0;

d < dim; ++

d)

interpretation.push_back(

interpretation.push_back(

data_out.add_data_vector(dof_handler, solution, names, interpretation);

}

data_out.add_data_vector(solution, postprocessor);

if (testcase == 0 && dim == 2)

{

euler_operator.project(ExactSolution<dim>(time), reference);

reference.sadd(-1., 1, solution);

std::vector<std::string> names;

names.emplace_back("error_density");

for (

unsigned int d = 0;

d < dim; ++

d)

names.emplace_back("error_momentum");

names.emplace_back("error_energy");

std::vector<DataComponentInterpretation::DataComponentInterpretation>

interpretation;

interpretation.push_back(

for (

unsigned int d = 0;

d < dim; ++

d)

interpretation.push_back(

interpretation.push_back(

data_out.add_data_vector(dof_handler,

reference,

names,

interpretation);

}

data_out.add_data_vector(mpi_owner, "owner");

data_out.build_patches(mapping,

fe.degree,

const std::string filename =

data_out.write_vtu_in_parallel(filename, MPI_COMM_WORLD);

}

}

template <int dim>

void EulerProblem<dim>::run()

{

{

const unsigned int n_vect_number = VectorizedArrayType::size();

const unsigned int n_vect_bits = 8 * sizeof(Number) * n_vect_number;

pcout << "Running with "

<< " MPI processes" << std::endl;

pcout << "Vectorization over " << n_vect_number << ' '

<< (std::is_same_v<Number, double> ? "doubles" : "floats") << " = "

<< n_vect_bits << " bits ("

<< std::endl;

}

make_grid_and_dofs();

const LowStorageRungeKuttaIntegrator integrator(lsrk_scheme);

rk_register_1.

reinit(solution);

rk_register_2.reinit(solution);

euler_operator.project(ExactSolution<dim>(time), solution);

double min_vertex_distance = std::numeric_limits<double>::max();

if (cell->is_locally_owned())

min_vertex_distance =

std::

min(min_vertex_distance, cell->minimum_vertex_distance());

min_vertex_distance =

time_step = courant_number * integrator.n_stages() /

euler_operator.compute_cell_transport_speed(solution);

pcout << "Time step size: " << time_step

<< ", minimal h: " << min_vertex_distance

<< ", initial transport scaling: "

<< 1. / euler_operator.compute_cell_transport_speed(solution)

<< std::endl

<< std::endl;

output_results(0);

unsigned int timestep_number = 0;

while (time < final_time - 1e-12 && timestep_number < max_time_steps)

{

++timestep_number;

if (timestep_number % 5 == 0)

time_step =

courant_number * integrator.n_stages() /

euler_operator.compute_cell_transport_speed(solution), 3);

{

integrator.perform_time_step(euler_operator,

time,

time_step,

solution,

rk_register_1,

rk_register_2);

}

time += time_step;

if (static_cast<int>(time / output_tick) !=

static_cast<int>((time - time_step) / output_tick) ||

time >= final_time - 1e-12)

output_results(

static_cast<unsigned int>(std::round(time / output_tick)));

}

timer.print_wall_time_statistics(MPI_COMM_WORLD);

pcout << std::endl;

}

}

int main(int argc, char **argv)

{

using namespace Euler_DG;

try

{

EulerProblem<dimension> euler_problem;

euler_problem.run();

}

catch (std::exception &exc)

{

std::cerr << std::endl

<< std::endl

<< "----------------------------------------------------"

<< std::endl;

std::cerr << "Exception on processing: " << std::endl

<< exc.what() << std::endl

<< "Aborting!" << std::endl

<< "----------------------------------------------------"

<< std::endl;

return 1;

}

catch (...)

{

std::cerr << std::endl

<< std::endl

<< "----------------------------------------------------"

<< std::endl;

std::cerr << "Unknown exception!" << std::endl

<< "Aborting!" << std::endl

<< "----------------------------------------------------"

<< std::endl;

return 1;

}

return 0;

}

void reinit(const size_type size, const bool omit_zeroing_entries=false)

unsigned int n_active_cells() const

void refine_global(const unsigned int times=1)

virtual types::global_cell_index n_global_active_cells() const override

DataComponentInterpretation

@ component_is_part_of_vector

void hyper_rectangle(Triangulation< dim, spacedim > &tria, const Point< dim > &p1, const Point< dim > &p2, const bool colorize=false)

void channel_with_cylinder(Triangulation< dim > &tria, const double shell_region_width=0.03, const unsigned int n_shells=2, const double skewness=2.0, const bool colorize=false)

T sum(const T &t, const MPI_Comm mpi_communicator)

unsigned int n_mpi_processes(const MPI_Comm mpi_communicator)

T max(const T &t, const MPI_Comm mpi_communicator)

T min(const T &t, const MPI_Comm mpi_communicator)

std::string get_current_vectorization_level()

Number truncate_to_n_digits(const Number number, const unsigned int n_digits)

std::string int_to_string(const unsigned int value, const unsigned int digits=numbers::invalid_unsigned_int)

constexpr T pow(const T base, const int iexp)

void run(const Iterator &begin, const std_cxx20::type_identity_t< Iterator > &end, Worker worker, Copier copier, const ScratchData &sample_scratch_data, const CopyData &sample_copy_data, const unsigned int queue_length, const unsigned int chunk_size)

const ::parallel::distributed::Triangulation< dim, spacedim > * triangulation

bool write_higher_order_cells

Results

Running the program with the default settings on a machine with 40 processes produces the following output:

Running with 40

MPI processes

Vectorization over 8 doubles = 512 bits (AVX512)

Number of degrees of freedom: 27.648.000 ( = 5 [vars] x 25.600 [cells] x 216 [dofs/cell/var] )

Time step size: 0.000295952, minimal h: 0.0075, initial transport scaling: 0.00441179

Time: 0, dt: 0.0003, norm rho: 5.385e-16, rho * u: 1.916e-16, energy: 1.547e-15

+--------------------------------------+------------------+------------+------------------+

| Total wallclock time elapsed | 17.52s 10 | 17.52s | 17.52s 11 |

| | | |

| Section | no. calls | min time rank | avg time | max time rank |

+--------------------------------------+------------------+------------+------------------+

| compute errors | 1 | 0.009594s 16 | 0.009705s | 0.009819s 8 |

| compute transport speed | 22 | 0.1366s 0 | 0.1367s | 0.1368s 18 |

| output | 1 | 1.233s 0 | 1.233s | 1.233s 32 |

| rk time stepping total | 100 | 8.746s 35 | 8.746s | 8.746s 0 |

| rk_stage - integrals L_h | 500 | 8.742s 36 | 8.742s | 8.743s 2 |

+--------------------------------------+------------------+------------+------------------+

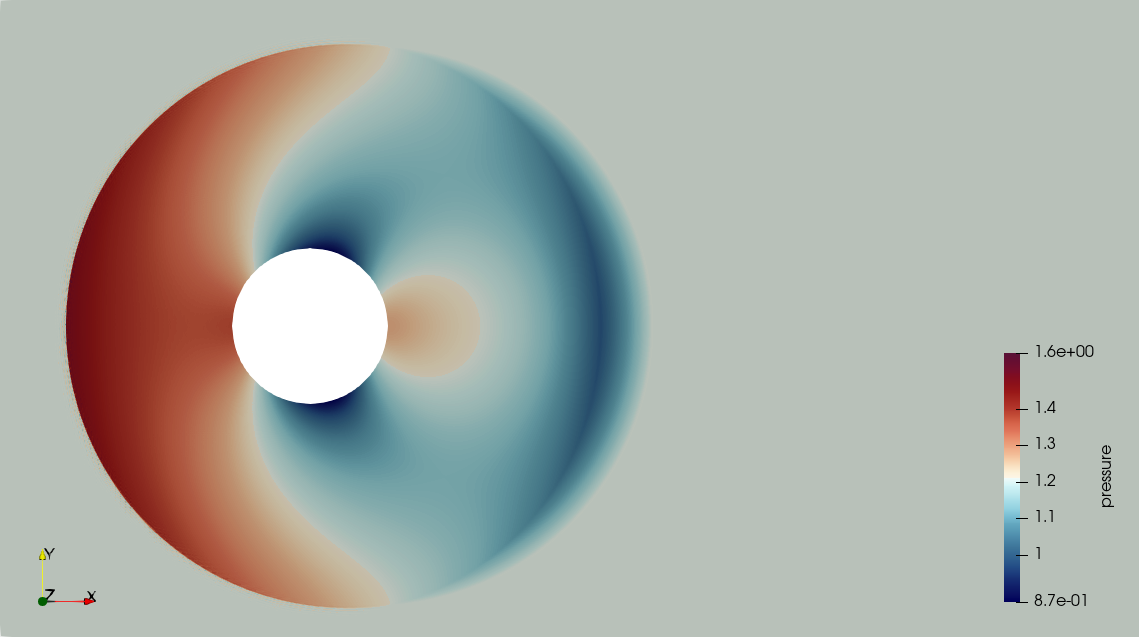

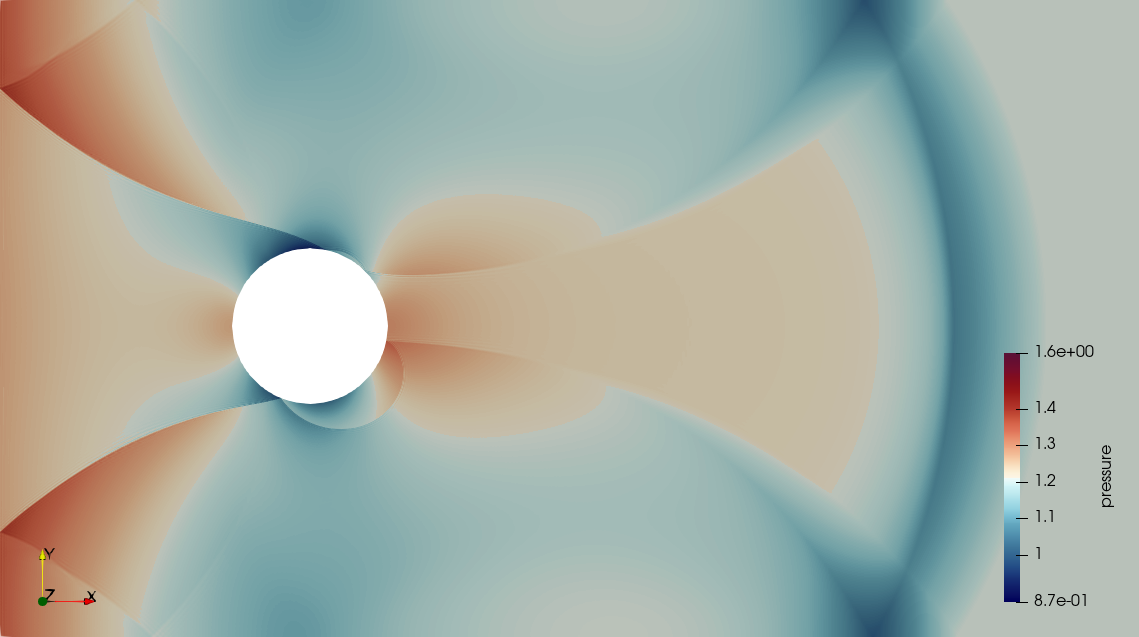

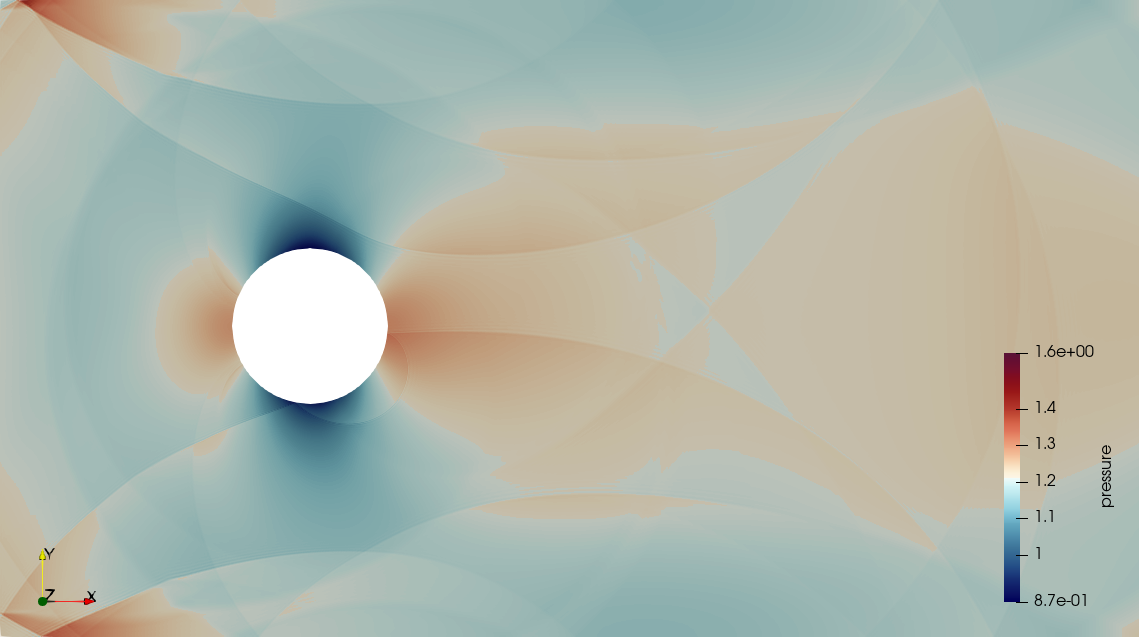

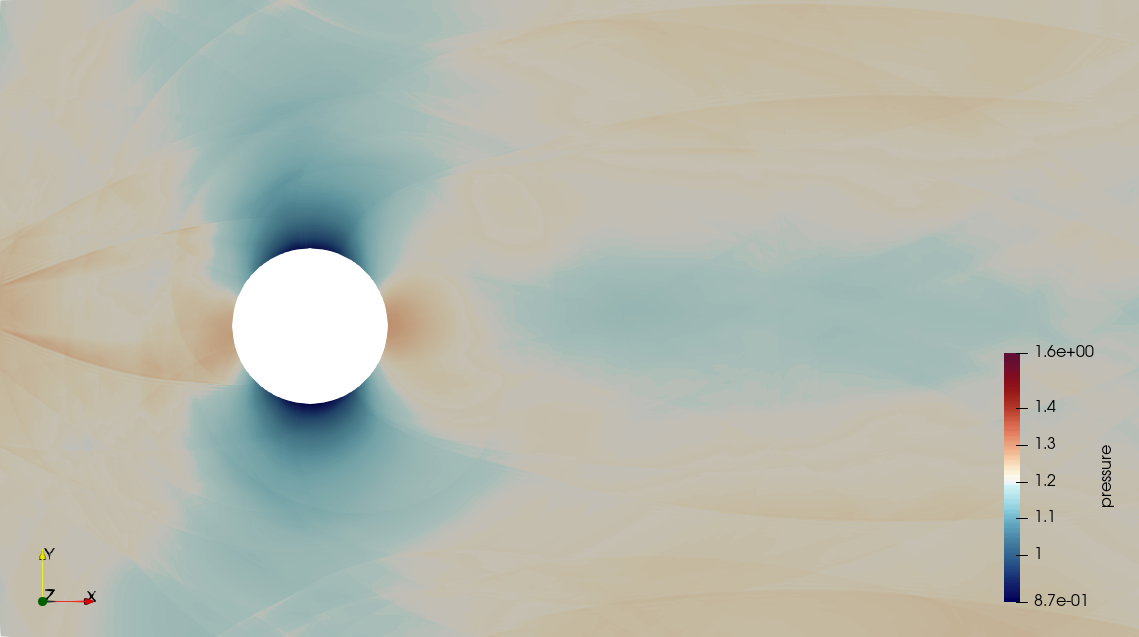

and the following visual output:

As a reference, the results of step-67 using FCL are:

Running with 40

MPI processes

Vectorization over 8 doubles = 512 bits (AVX512)

Number of degrees of freedom: 27.648.000 ( = 5 [vars] x 25.600 [cells] x 216 [dofs/cell/var] )

Time step size: 0.000295952, minimal h: 0.0075, initial transport scaling: 0.00441179

Time: 0, dt: 0.0003, norm rho: 5.385e-16, rho * u: 1.916e-16, energy: 1.547e-15

+-------------------------------------------+------------------+------------+------------------+

| Total wallclock time elapsed | 13.33s 0 | 13.34s | 13.35s 34 |

| | | |

| Section | no. calls | min time rank | avg time | max time rank |

+-------------------------------------------+------------------+------------+------------------+

| compute errors | 1 | 0.007977s 10 | 0.008053s | 0.008161s 30 |

| compute transport speed | 22 | 0.1228s 34 | 0.2227s | 0.3845s 0 |

| output | 1 | 1.255s 3 | 1.257s | 1.259s 27 |

| rk time stepping total | 100 | 11.15s 0 | 11.32s | 11.42s 34 |

| rk_stage - integrals L_h | 500 | 8.719s 10 | 8.932s | 9.196s 0 |

| rk_stage - inv mass + vec upd | 500 | 1.944s 0 | 2.377s | 2.55s 10 |

+-------------------------------------------+------------------+------------+------------------+

By the modifications shown in this tutorial, we were able to achieve a speedup of 27% for the Runge-Kutta stages.

Possibilities for extensions

The algorithms are easily extendable to higher dimensions: a high-dimensional advection operator based on cell-centric loops is part of the hyper.deal library. An extension of cell-centric loops to locally-refined meshes is more involved.

Extension to the compressible Navier-Stokes equations

The solver presented in this tutorial program can also be extended to the compressible Navier–Stokes equations by adding viscous terms, as also suggested in step-67. To keep as much of the performance obtained here despite the additional cost of elliptic terms, e.g. via an interior penalty method, that tutorial has proposed to switch the basis from FE_DGQ to FE_DGQHermite like in the step-59 tutorial program. The reasoning behind this switch is that in the case of FE_DGQ all values of neighboring cells (i.e., k+1 layers) are needed, whilst in the case of FE_DGQHermite only 2 layers, making the latter significantly more suitable for higher degrees. The additional layers have to be, on the one hand, loaded from main memory during flux computation and, one the other hand, have to be communicated. Using the shared-memory capabilities introduced in this tutorial, the second point can be eliminated on a single compute node or its influence can be reduced in a hybrid context.

Block Gauss-Seidel-like preconditioners

Cell-centric loops could be used to create block Gauss-Seidel preconditioners that are multiplicative within one process and additive over processes. These type of preconditioners use during flux computation, in contrast to Jacobi-type preconditioners, already updated values from neighboring cells. The following pseudo-code visualizes how this could in principal be achieved:

Vector<Number> visit_flags(data.n_cell_batches () + data.n_ghost_cell_batches ());

data.template loop_cell_centric<VectorType, VectorType>(

[&](const auto &data, auto &dst, const auto &src, const auto cell_range) {

for (unsigned int cell = cell_range.first; cell < cell_range.second; ++cell)

{

for (unsigned int face = 0; face < GeometryInfo<dim>::faces_per_cell; ++face)

{

const auto boundary_id = data.get_faces_by_cells_boundary_id(cell, face)[0];

{

phi_p.reinit(cell, face);

const auto flags = phi_p.read_cell_data(visit_flags);

const auto all_neighbors_have_been_updated =

flags().begin() + data.n_active_entries_per_cell_batch(cell) == 1;

if(all_neighbors_have_been_updated)

else

}

else

{

}

}

phi.set_cell_data(visit_flags, VectorizedArrayType(1.0));

}

},

dst,

src,

true,

For this purpose, one can exploit the cell-data vector capabilities of MatrixFree and the range-based iteration capabilities of VectorizedArray.

Please note that in the given example we process VectorizedArrayType::size() number of blocks, since each lane corresponds to one block. We consider blocks as updated if all blocks processed by a vector register have been updated. In the case of Cartesian meshes this is a reasonable approach, however, for general unstructured meshes this conservative approach might lead to a decrease in the efficiency of the preconditioner. A reduction of cells processed in parallel by explicitly reducing the number of lanes used by VectorizedArrayType might increase the quality of the preconditioner, but with the cost that each iteration might be more expensive. This dilemma leads us to a further "possibility for extension": vectorization within an element.

The plain program

#include <fstream>

#include <iomanip>

#include <iostream>

namespace Euler_DG

{

constexpr unsigned int testcase = 1;

constexpr unsigned int dimension = 2;

constexpr unsigned int n_global_refinements = 2;

constexpr unsigned int fe_degree = 5;

constexpr unsigned int n_q_points_1d = fe_degree + 2;

using Number = double;

constexpr double gamma = 1.4;

constexpr double final_time = testcase == 0 ? 10 : 2.0;

constexpr double output_tick = testcase == 0 ? 1 : 0.05;

const double courant_number = 0.15 /

std::pow(fe_degree, 1.5);

enum LowStorageRungeKuttaScheme

{

stage_3_order_3,

stage_5_order_4,

stage_7_order_4,

stage_9_order_5,

};

constexpr LowStorageRungeKuttaScheme lsrk_scheme = stage_5_order_4;

class LowStorageRungeKuttaIntegrator

{

public:

LowStorageRungeKuttaIntegrator(const LowStorageRungeKuttaScheme scheme)

{

switch (scheme)

{

case stage_3_order_3:

break;

case stage_5_order_4:

break;

case stage_7_order_4:

break;

case stage_9_order_5:

break;

default:

}

rk_integrator(lsrk);

std::vector<double> ci;

rk_integrator.get_coefficients(ai, bi, ci);

}

unsigned int n_stages() const

{

return bi.size();

}

template <typename VectorType, typename Operator>

void perform_time_step(const Operator &pde_operator,

const double current_time,

const double time_step,

VectorType &solution,

VectorType &vec_ri,

VectorType &vec_ki) const

{

vec_ki.swap(solution);

double sum_previous_bi = 0;

for (unsigned int stage = 0; stage < bi.size(); ++stage)

{

const double c_i = stage == 0 ? 0 : sum_previous_bi + ai[stage - 1];

pde_operator.perform_stage(stage,

current_time + c_i * time_step,

bi[stage] * time_step,

(stage == bi.size() - 1 ?

0 :

ai[stage] * time_step),

(stage % 2 == 0 ? vec_ki : vec_ri),

(stage % 2 == 0 ? vec_ri : vec_ki),

solution);

if (stage > 0)

sum_previous_bi += bi[stage - 1];

}

}

private:

std::vector<double> bi;

std::vector<double> ai;

};

enum EulerNumericalFlux

{

lax_friedrichs_modified,

harten_lax_vanleer,

};

constexpr EulerNumericalFlux numerical_flux_type = lax_friedrichs_modified;

template <int dim>

class ExactSolution :

public Function<dim>

{

public:

ExactSolution(const double time)

{}

const unsigned int component = 0) const override;

};

template <int dim>

double ExactSolution<dim>::value(

const Point<dim> &x,

const unsigned int component) const

{

switch (testcase)

{

case 0:

{

Assert(dim == 2, ExcNotImplemented());

const double beta = 5;

x0[0] = 5.;

const double radius_sqr =

(x - x0).norm_square() - 2. * (x[0] - x0[0]) * t + t * t;

const double factor =

const double density_log = std::log2(

std::abs(1. - (gamma - 1.) / gamma * 0.25 * factor * factor));

const double density = std::exp2(density_log * (1. / (gamma - 1.)));

const double u = 1. - factor * (x[1] - x0[1]);

const double v = factor * (x[0] - t - x0[0]);

if (component == 0)

return density;

else if (component == 1)

return density * u;

else if (component == 2)

return density * v;

else

{

const double pressure =

std::exp2(density_log * (gamma / (gamma - 1.)));

return pressure / (

gamma - 1.) +

0.5 * (density * u * u + density * v * v);

}

}

case 1:

{

if (component == 0)

return 1.;

else if (component == 1)

return 0.4;

else if (component == dim + 1)

return 3.097857142857143;

else

return 0.;

}

default:

return 0.;

}

}

template <int dim, typename Number>

{

const Number inverse_density = Number(1.) / conserved_variables[0];

for (

unsigned int d = 0;

d < dim; ++

d)

velocity[d] = conserved_variables[1 + d] * inverse_density;

return velocity;

}

template <int dim, typename Number>

Number

{

euler_velocity<dim>(conserved_variables);

Number rho_u_dot_u = conserved_variables[1] * velocity[0];

for (

unsigned int d = 1;

d < dim; ++

d)

rho_u_dot_u += conserved_variables[1 + d] * velocity[d];

return (gamma - 1.) * (conserved_variables[dim + 1] - 0.5 * rho_u_dot_u);

}

template <int dim, typename Number>

{

euler_velocity<dim>(conserved_variables);

const Number pressure = euler_pressure<dim>(conserved_variables);

for (

unsigned int d = 0;

d < dim; ++

d)

{

flux[0][

d] = conserved_variables[1 +

d];

for (

unsigned int e = 0;

e < dim; ++

e)

flux[e + 1][d] = conserved_variables[e + 1] * velocity[d];

flux[

d + 1][

d] += pressure;

velocity[

d] * (conserved_variables[dim + 1] + pressure);

}

return flux;

}

template <int n_components, int dim, typename Number>

{

for (

unsigned int d = 0;

d < n_components; ++

d)

result[d] = matrix[d] * vector;

return result;

}

template <int dim, typename Number>

{

const auto velocity_m = euler_velocity<dim>(u_m);

const auto velocity_p = euler_velocity<dim>(u_p);

const auto pressure_m = euler_pressure<dim>(u_m);

const auto pressure_p = euler_pressure<dim>(u_p);

const auto flux_m = euler_flux<dim>(u_m);

const auto flux_p = euler_flux<dim>(u_p);

switch (numerical_flux_type)

{

case lax_friedrichs_modified:

{

gamma * pressure_p * (1. / u_p[0]),

velocity_m.norm_square() +

gamma * pressure_m * (1. / u_m[0])));

return 0.5 * (flux_m * normal + flux_p * normal) +

0.5 * lambda * (u_m - u_p);

}

case harten_lax_vanleer:

{

const auto avg_velocity_normal =

0.5 * ((velocity_m + velocity_p) * normal);

0.5 * gamma *

(pressure_p * (1. / u_p[0]) + pressure_m * (1. / u_m[0]))));

const Number s_pos =

std::max(Number(), avg_velocity_normal + avg_c);

const Number s_neg =

std::min(Number(), avg_velocity_normal - avg_c);

const Number inverse_s = Number(1.) / (s_pos - s_neg);

return inverse_s *

((s_pos * (flux_m * normal) - s_neg * (flux_p * normal)) -

s_pos * s_neg * (u_m - u_p));

}

default:

{

return {};

}

}

}

template <int dim, typename VectorizedArrayType>

VectorizedArrayType

const unsigned int component)

{

VectorizedArrayType result;

for (unsigned int v = 0; v < VectorizedArrayType::size(); ++v)

{

for (

unsigned int d = 0;

d < dim; ++

d)

p[d] = p_vectorized[d][v];

result[v] = function.

value(p, component);

}

return result;

}

template <int dim, typename VectorizedArrayType, int n_components = dim + 2>

{

for (unsigned int v = 0; v < VectorizedArrayType::size(); ++v)

{

for (

unsigned int d = 0;

d < dim; ++

d)

p[d] = p_vectorized[d][v];

for (

unsigned int d = 0;

d < n_components; ++

d)

result[d][v] = function.

value(p, d);

}

return result;

}

template <int dim, int degree, int n_points_1d>

class EulerOperator

{

public:

static constexpr unsigned int n_quadrature_points_1d = n_points_1d;

~EulerOperator();

void set_subsonic_outflow_boundary(

void

perform_stage(const unsigned int stage,

const Number cur_time,

const Number bi,

const Number ai,

std::array<double, 3> compute_errors(

double compute_cell_transport_speed(

void

private:

std::map<types::boundary_id, std::unique_ptr<Function<dim>>>

inflow_boundaries;

std::map<types::boundary_id, std::unique_ptr<Function<dim>>>

subsonic_outflow_boundaries;

std::set<types::boundary_id> wall_boundaries;

std::unique_ptr<Function<dim>> body_force;

};

template <int dim, int degree, int n_points_1d>

EulerOperator<dim, degree, n_points_1d>::EulerOperator(

TimerOutput &timer)

: timer(timer)

{

#ifdef DEAL_II_WITH_MPI

if (group_size == 1)

{

this->subcommunicator = MPI_COMM_SELF;

}

{

MPI_Comm_split_type(MPI_COMM_WORLD,

MPI_COMM_TYPE_SHARED,

rank,

MPI_INFO_NULL,

&subcommunicator);

}

else

{

}

#else

(void)subcommunicator;

(void)group_size;

this->subcommunicator = MPI_COMM_SELF;

#endif

}

template <int dim, int degree, int n_points_1d>

EulerOperator<dim, degree, n_points_1d>::~EulerOperator()

{

#ifdef DEAL_II_WITH_MPI

if (this->subcommunicator != MPI_COMM_SELF)

MPI_Comm_free(&subcommunicator);

#endif

}

template <int dim, int degree, int n_points_1d>

void EulerOperator<dim, degree, n_points_1d>::reinit(

{

const std::vector<const DoFHandler<dim> *> dof_handlers = {&dof_handler};

const std::vector<const AffineConstraints<double> *> constraints = {&dummy};

const std::vector<Quadrature<1>> quadratures = {

QGauss<1>(n_q_points_1d),

additional_data;

additional_data);

data.reinit(

mapping, dof_handlers, constraints, quadratures, additional_data);

}

template <int dim, int degree, int n_points_1d>

void EulerOperator<dim, degree, n_points_1d>::perform_stage(

const unsigned int stage,

const Number current_time,

const Number bi,

const Number ai,

{

for (auto &i : inflow_boundaries)

i.

second->set_time(current_time);

for (auto &i : subsonic_outflow_boundaries)

i.

second->set_time(current_time);

data.template loop_cell_centric<LinearAlgebra::distributed::Vector<Number>,

[&](const auto &data, auto &dst, const auto &src, const auto cell_range) {

degree,

n_points_1d,

dim + 2,

Number,

VectorizedArrayType>;

degree,

n_points_1d,

dim + 2,

Number,

VectorizedArrayType>;

FECellIntegral phi(data);

FECellIntegral phi_temp(data);

FEFaceIntegral phi_m(data, true);

FEFaceIntegral phi_p(data, false);

if (constant_function)

constant_body_force =

evaluate_function<dim, VectorizedArrayType, dim>(

dim,

n_points_1d,

n_points_1d,

VectorizedArrayType,

Number>

eval({},

data.get_shape_info().data[0].shape_gradients_collocation_eo,

{});

phi.n_components);

for (unsigned int cell = cell_range.first; cell < cell_range.second;

++cell)

{

phi.reinit(cell);

if (ai != Number())

phi_temp.reinit(cell);

if (ai != Number() && stage == 0)

{

phi.read_dof_values(src);

for (unsigned int i = 0;

i < phi.static_dofs_per_component * (dim + 2);

++i)

phi_temp.begin_dof_values()[i] = phi.begin_dof_values()[i];

}

else

{

}

for (unsigned int i = 0; i < phi.static_n_q_points * (dim + 2); ++i)

buffer[i] = phi.begin_values()[i];

for (const unsigned int q : phi.quadrature_point_indices())

{

const auto w_q = phi.get_value(q);

phi.submit_gradient(euler_flux<dim>(w_q), q);

if (body_force.get() != nullptr)

{

constant_function ?

constant_body_force :

evaluate_function<dim, VectorizedArrayType, dim>(

*body_force, phi.quadrature_point(q));

for (

unsigned int d = 0;

d < dim; ++

d)

forcing[d + 1] = w_q[0] * force[d];

for (

unsigned int d = 0;

d < dim; ++

d)

forcing[dim + 1] += force[d] * w_q[d + 1];

phi.submit_value(forcing, q);

}

}

{

auto *values_ptr = phi.begin_values();

auto *gradient_ptr = phi.begin_gradients();

for (unsigned int c = 0; c < dim + 2; ++c)

{