|

Reference documentation for deal.II version 9.6.0

|

|

Reference documentation for deal.II version 9.6.0

|

#include <deal.II/lac/la_parallel_vector.h>

Public Types | |

| using | memory_space = MemorySpace |

| using | value_type = Number |

| using | pointer = value_type * |

| using | const_pointer = const value_type * |

| using | iterator = value_type * |

| using | const_iterator = const value_type * |

| using | reference = value_type & |

| using | const_reference = const value_type & |

| using | size_type = types::global_dof_index |

| using | real_type = typename numbers::NumberTraits<Number>::real_type |

Public Member Functions | |

| template<typename number > | |

| Vector & | operator= (const ::Vector< number > &v) |

| template<class Archive > | |

| void | serialize (Archive &ar, const unsigned int version) |

1: Basic Object-handling | |

| Vector () | |

| Vector (const Vector< Number, MemorySpace > &in_vector) | |

| Vector (Vector< Number, MemorySpace > &&in_vector) | |

| Vector (const size_type size) | |

| Vector (const IndexSet &local_range, const IndexSet &ghost_indices, const MPI_Comm communicator) | |

| Vector (const IndexSet &local_range, const MPI_Comm communicator) | |

| Vector (const std::shared_ptr< const Utilities::MPI::Partitioner > &partitioner) | |

| ~Vector () | |

| void | reinit (const size_type size, const bool omit_zeroing_entries=false) |

| template<typename Number2 > | |

| void | reinit (const Vector< Number2, MemorySpace > &in_vector, const bool omit_zeroing_entries=false) |

| void | reinit (const IndexSet &local_range, const IndexSet &ghost_indices, const MPI_Comm communicator) |

| void | reinit (const IndexSet &local_range, const MPI_Comm communicator) |

| void | reinit (const std::shared_ptr< const Utilities::MPI::Partitioner > &partitioner, const MPI_Comm comm_sm=MPI_COMM_SELF) |

| void | reinit (const std::shared_ptr< const Utilities::MPI::Partitioner > &partitioner, const bool make_ghosted, const MPI_Comm &comm_sm=MPI_COMM_SELF) |

| void | reinit (const types::global_dof_index local_size, const types::global_dof_index ghost_size, const MPI_Comm comm, const MPI_Comm comm_sm=MPI_COMM_SELF) |

| void | swap (Vector< Number, MemorySpace > &v) noexcept |

| Vector< Number, MemorySpace > & | operator= (Vector< Number, MemorySpace > &&in_vector) |

| Vector< Number, MemorySpace > & | operator= (const Vector< Number, MemorySpace > &in_vector) |

| template<typename Number2 > | |

| Vector< Number, MemorySpace > & | operator= (const Vector< Number2, MemorySpace > &in_vector) |

2: Parallel data exchange | |

| void | compress (VectorOperation::values operation) |

| void | update_ghost_values () const |

| void | compress_start (const unsigned int communication_channel=0, VectorOperation::values operation=VectorOperation::add) |

| void | compress_finish (VectorOperation::values operation) |

| void | update_ghost_values_start (const unsigned int communication_channel=0) const |

| void | update_ghost_values_finish () const |

| void | zero_out_ghost_values () const |

| bool | has_ghost_elements () const |

| template<typename Number2 > | |

| void | copy_locally_owned_data_from (const Vector< Number2, MemorySpace > &src) |

| template<typename MemorySpace2 > | |

| void | import_elements (const Vector< Number, MemorySpace2 > &src, VectorOperation::values operation) |

| template<typename MemorySpace2 > | |

| void | import (const Vector< Number, MemorySpace2 > &src, VectorOperation::values operation) |

3: Implementation of vector space operations | |

| Vector< Number, MemorySpace > & | operator*= (const Number factor) |

| Vector< Number, MemorySpace > & | operator/= (const Number factor) |

| Vector< Number, MemorySpace > & | operator+= (const Vector< Number, MemorySpace > &V) |

| Vector< Number, MemorySpace > & | operator-= (const Vector< Number, MemorySpace > &V) |

| void | import_elements (const LinearAlgebra::ReadWriteVector< Number > &V, const VectorOperation::values operation, const std::shared_ptr< const Utilities::MPI::CommunicationPatternBase > &communication_pattern={}) |

| void | import (const LinearAlgebra::ReadWriteVector< Number > &V, VectorOperation::values operation, std::shared_ptr< const Utilities::MPI::CommunicationPatternBase > communication_pattern={}) |

| Number | operator* (const Vector< Number, MemorySpace > &V) const |

| void | add (const Number a) |

| void | add (const Number a, const Vector< Number, MemorySpace > &V) |

| void | add (const Number a, const Vector< Number, MemorySpace > &V, const Number b, const Vector< Number, MemorySpace > &W) |

| void | add (const std::vector< size_type > &indices, const std::vector< Number > &values) |

| void | sadd (const Number s, const Number a, const Vector< Number, MemorySpace > &V) |

| void | scale (const Vector< Number, MemorySpace > &scaling_factors) |

| void | equ (const Number a, const Vector< Number, MemorySpace > &V) |

| real_type | l1_norm () const |

| real_type | l2_norm () const |

| real_type | norm_sqr () const |

| real_type | linfty_norm () const |

| Number | add_and_dot (const Number a, const Vector< Number, MemorySpace > &V, const Vector< Number, MemorySpace > &W) |

| virtual size_type | size () const override |

| ::IndexSet | locally_owned_elements () const |

| void | print (std::ostream &out, const unsigned int precision=3, const bool scientific=true, const bool across=true) const |

| std::size_t | memory_consumption () const |

4: Other vector operations | |

| Vector< Number, MemorySpace > & | operator= (const Number s) |

| template<typename OtherNumber > | |

| void | add (const std::vector< size_type > &indices, const ::Vector< OtherNumber > &values) |

| template<typename OtherNumber > | |

| void | add (const size_type n_elements, const size_type *indices, const OtherNumber *values) |

| void | sadd (const Number s, const Vector< Number, MemorySpace > &V) |

5: Entry access and local data representation | |

| size_type | locally_owned_size () const |

| bool | in_local_range (const size_type global_index) const |

| iterator | begin () |

| const_iterator | begin () const |

| iterator | end () |

| const_iterator | end () const |

| Number | operator() (const size_type global_index) const |

| Number & | operator() (const size_type global_index) |

| Number | operator[] (const size_type global_index) const |

| Number & | operator[] (const size_type global_index) |

| Number | local_element (const size_type local_index) const |

| Number & | local_element (const size_type local_index) |

| Number * | get_values () const |

| template<typename OtherNumber > | |

| void | extract_subvector_to (const std::vector< size_type > &indices, std::vector< OtherNumber > &values) const |

| virtual void | extract_subvector_to (const ArrayView< const types::global_dof_index > &indices, ArrayView< Number > &elements) const override |

| template<typename ForwardIterator , typename OutputIterator > | |

| void | extract_subvector_to (ForwardIterator indices_begin, const ForwardIterator indices_end, OutputIterator values_begin) const |

| bool | all_zero () const |

| Number | mean_value () const |

| real_type | lp_norm (const real_type p) const |

6: Mixed stuff | |

| MPI_Comm | get_mpi_communicator () const |

| const std::shared_ptr< const Utilities::MPI::Partitioner > & | get_partitioner () const |

| bool | partitioners_are_compatible (const Utilities::MPI::Partitioner &part) const |

| bool | partitioners_are_globally_compatible (const Utilities::MPI::Partitioner &part) const |

| void | set_ghost_state (const bool ghosted) const |

| const std::vector< ArrayView< const Number > > & | shared_vector_data () const |

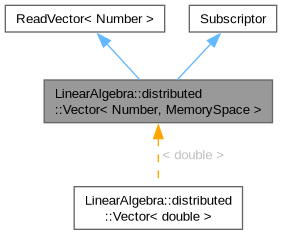

Subscriptor functionality | |

Classes derived from Subscriptor provide a facility to subscribe to this object. This is mostly used by the SmartPointer class. | |

| void | subscribe (std::atomic< bool > *const validity, const std::string &identifier="") const |

| void | unsubscribe (std::atomic< bool > *const validity, const std::string &identifier="") const |

| unsigned int | n_subscriptions () const |

| template<typename StreamType > | |

| void | list_subscribers (StreamType &stream) const |

| void | list_subscribers () const |

Static Public Member Functions | |

| static ::ExceptionBase & | ExcVectorTypeNotCompatible () |

| static ::ExceptionBase & | ExcNonMatchingElements (Number arg1, Number arg2, unsigned int arg3) |

| static ::ExceptionBase & | ExcAccessToNonLocalElement (size_type arg1, size_type arg2, size_type arg3, size_type arg4) |

| static ::ExceptionBase & | ExcInUse (int arg1, std::string arg2, std::string arg3) |

| static ::ExceptionBase & | ExcNoSubscriber (std::string arg1, std::string arg2) |

Private Types | |

| using | map_value_type = decltype(counter_map)::value_type |

| using | map_iterator = decltype(counter_map)::iterator |

Private Member Functions | |

| void | add_local (const Number a, const Vector< Number, MemorySpace > &V) |

| void | sadd_local (const Number s, const Number a, const Vector< Number, MemorySpace > &V) |

| template<typename Number2 > | |

| Number | inner_product_local (const Vector< Number2, MemorySpace > &V) const |

| real_type | norm_sqr_local () const |

| Number | mean_value_local () const |

| real_type | l1_norm_local () const |

| real_type | lp_norm_local (const real_type p) const |

| real_type | linfty_norm_local () const |

| Number | add_and_dot_local (const Number a, const Vector< Number, MemorySpace > &V, const Vector< Number, MemorySpace > &W) |

| void | clear_mpi_requests () |

| void | resize_val (const size_type new_allocated_size, const MPI_Comm comm_sm=MPI_COMM_SELF) |

| void | check_no_subscribers () const noexcept |

Private Attributes | |

| std::shared_ptr< const Utilities::MPI::Partitioner > | partitioner |

| size_type | allocated_size |

| mutable ::MemorySpace::MemorySpaceData< Number, MemorySpace > | data |

| std::shared_ptr<::parallel::internal::TBBPartitioner > | thread_loop_partitioner |

| mutable ::MemorySpace::MemorySpaceData< Number, MemorySpace > | import_data |

| bool | vector_is_ghosted |

| std::vector< MPI_Request > | compress_requests |

| std::vector< MPI_Request > | update_ghost_values_requests |

| std::mutex | mutex |

| MPI_Comm | comm_sm |

| std::atomic< unsigned int > | counter |

| std::map< std::string, unsigned int > | counter_map |

| std::vector< std::atomic< bool > * > | validity_pointers |

| const std::type_info * | object_info |

Friends | |

| template<typename Number2 , typename MemorySpace2 > | |

| class | Vector |

| template<typename Number2 > | |

| class | BlockVector |

Implementation of a parallel vector class. The design of this class is similar to the standard Vector class in deal.II, with the exception that storage is distributed with MPI.

The vector is designed for the following scheme of parallel partitioning:

[my_first_index,my_last_index). reinit (locally_owned, ghost_indices, communicator), and retained until the partitioning is changed. This allows for efficient parallel communication of indices. In particular, it stores the communication pattern, rather than having to compute it again for every communication. For more information on ghost vectors, see also the glossary entry on vectors with ghost elements. local_element(). Locally owned indices are placed first, [0, locally_owned_size()), and then all ghost indices follow after them contiguously, [locally_owned_size(), locally_owned_size()+get_partitioner()->n_ghost_indices()). Functions related to parallel functionality:

compress() goes through the data associated with ghost indices and communicates it to the owner process, which can then add it to the correct position. This can be used e.g. after having run an assembly routine involving ghosts that fill this vector. Note that the insert mode of compress() does not set the elements included in ghost entries but simply discards them, assuming that the owning processor has set them to the desired value already (See also the glossary entry on compress). update_ghost_values() function imports the data from the owning processor to the ghost indices in order to provide read access to the data associated with ghosts. This vector can take two different states with respect to ghost elements:

operator= (0.)), the vector does only allow writing into ghost elements but not reading from ghost elements. true exactly when ghost elements have been updated and false otherwise, irrespective of the actual number of ghost entries in the vector layout (for that information, use get_partitioner()->n_ghost_indices() instead). This vector uses the facilities of the class Vector<Number> for implementing the operations on the local range of the vector. In particular, it also inherits thread parallelism that splits most vector-vector operations into smaller chunks if the program uses multiple threads. This may or may not be desired when working also with MPI.

This vector class is based on two different number types for indexing. The so-called global index type encodes the overall size of the vector. Its type is types::global_dof_index. The largest possible value is 2^32-1 or approximately 4 billion in case 64 bit integers are disabled at configuration of deal.II (default case) or 2^64-1 or approximately 10^19 if 64 bit integers are enabled (see the glossary entry on When to use types::global_dof_index instead of unsigned int for further information).

The second relevant index type is the local index used within one MPI rank. As opposed to the global index, the implementation assumes 32-bit unsigned integers unconditionally. In other words, to actually use a vector with more than four billion entries, you need to use MPI with more than one rank (which in general is a safe assumption since four billion entries consume at least 16 GB of memory for floats or 32 GB of memory for doubles) and enable 64-bit indices. If more than 4 billion local elements are present, the implementation tries to detect that, which triggers an exception and aborts the code. Note, however, that the detection of overflow is tricky and the detection mechanism might fail in some circumstances. Therefore, it is strongly recommended to not rely on this class to automatically detect the unsupported case.

This vector class supports two different memory spaces: Host and Default. If the MemorySpace template argument is not specified, the memory space is Host and all the data is allocated on the CPU. When the memory space is Default, all the data is allocated on Kokkos' default memory space. That means that if Kokkos was configured with a GPU backend, the data is allocated on a GPU. The operations on the vector are performed on the chosen memory space. From the host, there are two methods to access the elements of the Vector when using the Default memory space:

The import method is a lot safer and will perform an MPI communication if necessary. Since an MPI communication may be performed, import needs to be called on all the processors.

In Host mode, this class allows to use MPI-3 shared-memory features by providing a separate MPI communicator that consists of processes on the same shared-memory domain. By calling vector.shared_vector_data();, users have read-only access to both locally-owned and ghost values of processes combined in the shared-memory communicator (comm_sm in reinit()).

For this to work, you have to call the constructor or one of the reinit() functions of this class with a non-default value for the comm_sm argument, where the argument corresponds to a communicator consisting of all processes on the same shared-memory domain. This kind of communicator can be created using the following code snippet:

Definition at line 249 of file la_parallel_vector.h.

| using LinearAlgebra::distributed::Vector< Number, MemorySpace >::memory_space = MemorySpace |

Definition at line 252 of file la_parallel_vector.h.

| using LinearAlgebra::distributed::Vector< Number, MemorySpace >::value_type = Number |

Definition at line 253 of file la_parallel_vector.h.

| using LinearAlgebra::distributed::Vector< Number, MemorySpace >::pointer = value_type * |

Definition at line 254 of file la_parallel_vector.h.

| using LinearAlgebra::distributed::Vector< Number, MemorySpace >::const_pointer = const value_type * |

Definition at line 255 of file la_parallel_vector.h.

| using LinearAlgebra::distributed::Vector< Number, MemorySpace >::iterator = value_type * |

Definition at line 256 of file la_parallel_vector.h.

| using LinearAlgebra::distributed::Vector< Number, MemorySpace >::const_iterator = const value_type * |

Definition at line 257 of file la_parallel_vector.h.

| using LinearAlgebra::distributed::Vector< Number, MemorySpace >::reference = value_type & |

Definition at line 258 of file la_parallel_vector.h.

| using LinearAlgebra::distributed::Vector< Number, MemorySpace >::const_reference = const value_type & |

Definition at line 259 of file la_parallel_vector.h.

| using LinearAlgebra::distributed::Vector< Number, MemorySpace >::size_type = types::global_dof_index |

Definition at line 260 of file la_parallel_vector.h.

| using LinearAlgebra::distributed::Vector< Number, MemorySpace >::real_type = typename numbers::NumberTraits<Number>::real_type |

Definition at line 261 of file la_parallel_vector.h.

|

privateinherited |

The data type used in counter_map.

Definition at line 229 of file subscriptor.h.

|

privateinherited |

The iterator type used in counter_map.

Definition at line 234 of file subscriptor.h.

| LinearAlgebra::distributed::Vector< Number, MemorySpace >::Vector | ( | ) |

Empty constructor.

| LinearAlgebra::distributed::Vector< Number, MemorySpace >::Vector | ( | const Vector< Number, MemorySpace > & | in_vector | ) |

Copy constructor. Uses the parallel partitioning of in_vector. It should be noted that this constructor automatically sets ghost values to zero. Call update_ghost_values() directly following construction if a ghosted vector is required.

| LinearAlgebra::distributed::Vector< Number, MemorySpace >::Vector | ( | Vector< Number, MemorySpace > && | in_vector | ) |

Move constructor. Uses the swap method.

| LinearAlgebra::distributed::Vector< Number, MemorySpace >::Vector | ( | const size_type | size | ) |

Construct a parallel vector of the given global size without any actual parallel distribution.

| LinearAlgebra::distributed::Vector< Number, MemorySpace >::Vector | ( | const IndexSet & | local_range, |

| const IndexSet & | ghost_indices, | ||

| const MPI_Comm | communicator ) |

Construct a parallel vector. The local range is specified by locally_owned_set (note that this must be a contiguous interval, multiple intervals are not possible). The IndexSet ghost_indices specifies ghost indices, i.e., indices which one might need to read data from or accumulate data from. It is allowed that the set of ghost indices also contains the local range, but it does not need to.

This function involves global communication, so it should only be called once for a given layout. Use the constructor with Vector<Number> argument to create additional vectors with the same parallel layout.

| LinearAlgebra::distributed::Vector< Number, MemorySpace >::Vector | ( | const IndexSet & | local_range, |

| const MPI_Comm | communicator ) |

Same constructor as above but without any ghost indices.

| LinearAlgebra::distributed::Vector< Number, MemorySpace >::Vector | ( | const std::shared_ptr< const Utilities::MPI::Partitioner > & | partitioner | ) |

Create the vector based on the parallel partitioning described in partitioner. The input argument is a shared pointer, which stores the partitioner data only once and share it between several vectors with the same layout.

| LinearAlgebra::distributed::Vector< Number, MemorySpace >::~Vector | ( | ) |

Destructor.

| void LinearAlgebra::distributed::Vector< Number, MemorySpace >::reinit | ( | const size_type | size, |

| const bool | omit_zeroing_entries = false ) |

Set the global size of the vector to size without any actual parallel distribution.

| void LinearAlgebra::distributed::Vector< Number, MemorySpace >::reinit | ( | const Vector< Number2, MemorySpace > & | in_vector, |

| const bool | omit_zeroing_entries = false ) |

Uses the parallel layout of the input vector in_vector and allocates memory for this vector. Recommended initialization function when several vectors with the same layout should be created.

If the flag omit_zeroing_entries is set to false, the memory will be initialized with zero, otherwise the memory will be untouched (and the user must make sure to fill it with reasonable data before using it).

| void LinearAlgebra::distributed::Vector< Number, MemorySpace >::reinit | ( | const IndexSet & | local_range, |

| const IndexSet & | ghost_indices, | ||

| const MPI_Comm | communicator ) |

Initialize the vector. The local range is specified by local_range (note that this must be a contiguous interval, multiple intervals are not possible). The IndexSet ghost_indices specifies ghost indices, i.e., indices which one might need to read data from or accumulate data from. It is allowed that the set of ghost indices also contains the local range, but it does not need to.

This function involves global communication, so it should only be called once for a given layout. Use the reinit function with Vector<Number> argument to create additional vectors with the same parallel layout.

| void LinearAlgebra::distributed::Vector< Number, MemorySpace >::reinit | ( | const IndexSet & | local_range, |

| const MPI_Comm | communicator ) |

Same as above, but without ghost entries.

| void LinearAlgebra::distributed::Vector< Number, MemorySpace >::reinit | ( | const std::shared_ptr< const Utilities::MPI::Partitioner > & | partitioner, |

| const MPI_Comm | comm_sm = MPI_COMM_SELF ) |

Initialize the vector given to the parallel partitioning described in partitioner. The input argument is a shared pointer, which stores the partitioner data only once and can be shared between several vectors with the same layout.

The optional argument comm_sm, which consists of processes on the same shared-memory domain, allows users have read-only access to both locally-owned and ghost values of processes combined in the shared-memory communicator. See the general documentation of this class for more information about this argument.

| void LinearAlgebra::distributed::Vector< Number, MemorySpace >::reinit | ( | const std::shared_ptr< const Utilities::MPI::Partitioner > & | partitioner, |

| const bool | make_ghosted, | ||

| const MPI_Comm & | comm_sm = MPI_COMM_SELF ) |

This function exists purely for reasons of compatibility with the PETScWrappers::MPI::Vector and TrilinosWrappers::MPI::Vector classes.

It calls the function above, and ignores the parameter make_ghosted.

| void LinearAlgebra::distributed::Vector< Number, MemorySpace >::reinit | ( | const types::global_dof_index | local_size, |

| const types::global_dof_index | ghost_size, | ||

| const MPI_Comm | comm, | ||

| const MPI_Comm | comm_sm = MPI_COMM_SELF ) |

Initialize vector with local_size locally-owned and ghost_size ghost degrees of freedoms.

The optional argument comm_sm, which consists of processes on the same shared-memory domain, allows users have read-only access to both locally-owned and ghost values of processes combined in the shared-memory communicator. See the general documentation of this class for more information about this argument.

ghost_size variable to an appropriate value provides memory space for the ghost data in a vector's memory allocation as and allows access to it via local_element(). However, the associated global indices must be handled externally in this case.

|

noexcept |

Swap the contents of this vector and the other vector v. One could do this operation with a temporary variable and copying over the data elements, but this function is significantly more efficient since it only swaps the pointers to the data of the two vectors and therefore does not need to allocate temporary storage and move data around.

This function is analogous to the swap function of all C++ standard containers. Also, there is a global function swap(u,v) that simply calls u.swap(v), again in analogy to standard functions.

| Vector< Number, MemorySpace > & LinearAlgebra::distributed::Vector< Number, MemorySpace >::operator= | ( | Vector< Number, MemorySpace > && | in_vector | ) |

Move assignment operator.

noexcept. | Vector< Number, MemorySpace > & LinearAlgebra::distributed::Vector< Number, MemorySpace >::operator= | ( | const Vector< Number, MemorySpace > & | in_vector | ) |

Assigns the vector to the parallel partitioning of the input vector in_vector, and copies all the data.

The semantics of this operator are complex. If the two vectors have the same size, and if either the left or right hand side vector of the assignment (i.e., either the input vector on the right hand side, or the calling vector to the left of the assignment operator) currently has ghost elements, then the left hand side vector will also have ghost values and will consequently be a read-only vector (see also the glossary entry on the issue). Otherwise, the left hand vector will be a writable vector after this operation. These semantics facilitate having a vector with ghost elements on the left hand side of the assignment, and a vector without ghost elements on the right hand side, with the resulting left hand side vector having the correct values in both its locally owned and its ghost elements.

On the other hand, if the left hand side vector does not have the correct size yet, or is perhaps an entirely uninitialized vector, then the assignment is simply a copy operation in the usual sense: In that case, if the right hand side has no ghost elements (i.e., is a completely distributed vector), then the left hand side will have no ghost elements either. And if the right hand side has ghost elements (and is consequently read-only), then the left hand side will have these same properties after the operation.

| Vector< Number, MemorySpace > & LinearAlgebra::distributed::Vector< Number, MemorySpace >::operator= | ( | const Vector< Number2, MemorySpace > & | in_vector | ) |

Assigns the vector to the parallel partitioning of the input vector in_vector, and copies all the data.

If one of the input vector or the calling vector (to the left of the assignment operator) had ghost elements set before this operation, the calling vector will have ghost values set. Otherwise, it will be in write mode. If the input vector does not have any ghost elements at all, the vector will also update its ghost values in analogy to the respective setting the Trilinos and PETSc vectors.

| void LinearAlgebra::distributed::Vector< Number, MemorySpace >::compress | ( | VectorOperation::values | operation | ) |

This function copies the data that has accumulated in the data buffer for ghost indices to the owning processor. For the meaning of the argument operation, see the entry on Compressing distributed vectors and matrices in the glossary.

There are four variants for this function. If called with argument VectorOperation::add adds all the data accumulated in ghost elements to the respective elements on the owning processor and clears the ghost array afterwards. If called with argument VectorOperation::insert, a set operation is performed. Since setting elements in a vector with ghost elements is ambiguous (as one can set both the element on the ghost site as well as the owning site), this operation makes the assumption that all data is set correctly on the owning processor. Upon call of compress(VectorOperation::insert), all ghost entries are thus simply zeroed out (using zero_ghost_values()). In debug mode, a check is performed for whether the data set is actually consistent between processors, i.e., whenever a non-zero ghost element is found, it is compared to the value on the owning processor and an exception is thrown if these elements do not agree. If called with VectorOperation::min or VectorOperation::max, the minimum or maximum on all elements across the processors is set.

+inf for VectorOperation::min, and -inf for VectorOperation::max. If all values are initialized with values below zero and compress is called with VectorOperation::max two times subsequently, the maximal value after the second calculation will be zero. | void LinearAlgebra::distributed::Vector< Number, MemorySpace >::update_ghost_values | ( | ) | const |

Fills the data field for ghost indices with the values stored in the respective positions of the owning processor. This function is needed before reading from ghosts. The function is const even though ghost data is changed. This is needed to allow functions with a const vector to perform the data exchange without creating temporaries.

After calling this method, write access to ghost elements of the vector is forbidden and an exception is thrown. Only read access to ghost elements is allowed in this state. Note that all subsequent operations on this vector, like global vector addition, etc., will also update the ghost values by a call to this method after the operation. However, global reduction operations like norms or the inner product will always ignore ghost elements in order to avoid counting the ghost data more than once. To allow writing to ghost elements again, call zero_out_ghost_values().

| void LinearAlgebra::distributed::Vector< Number, MemorySpace >::compress_start | ( | const unsigned int | communication_channel = 0, |

| VectorOperation::values | operation = VectorOperation::add ) |

Initiates communication for the compress() function with non-blocking communication. This function does not wait for the transfer to finish, in order to allow for other computations during the time it takes until all data arrives.

Before the data is actually exchanged, the function must be followed by a call to compress_finish().

In case this function is called for more than one vector before compress_finish() is invoked, it is mandatory to specify a unique communication channel to each such call, in order to avoid several messages with the same ID that will corrupt this operation. Any communication channel less than 100 is a valid value (in particular, the range [100, 200) is reserved for LinearAlgebra::distributed::BlockVector).

| void LinearAlgebra::distributed::Vector< Number, MemorySpace >::compress_finish | ( | VectorOperation::values | operation | ) |

For all requests that have been initiated in compress_start, wait for the communication to finish. Once it is finished, add or set the data (depending on the flag operation) to the respective positions in the owning processor, and clear the contents in the ghost data fields. The meaning of this argument is the same as in compress().

This function should be called exactly once per vector after calling compress_start, otherwise the result is undefined. In particular, it is not well-defined to call compress_start on the same vector again before compress_finished has been called. However, there is no warning to prevent this situation.

Must follow a call to the compress_start function.

When the MemorySpace is Default and MPI is not GPU-aware, data changed on the device after the call to compress_start will be lost.

| void LinearAlgebra::distributed::Vector< Number, MemorySpace >::update_ghost_values_start | ( | const unsigned int | communication_channel = 0 | ) | const |

Initiates communication for the update_ghost_values() function with non-blocking communication. This function does not wait for the transfer to finish, in order to allow for other computations during the time it takes until all data arrives.

Before the data is actually exchanged, the function must be followed by a call to update_ghost_values_finish().

In case this function is called for more than one vector before update_ghost_values_finish() is invoked, it is mandatory to specify a unique communication channel to each such call, in order to avoid several messages with the same ID that will corrupt this operation. Any communication channel less than 100 is a valid value (in particular, the range [100, 200) is reserved for LinearAlgebra::distributed::BlockVector).

| void LinearAlgebra::distributed::Vector< Number, MemorySpace >::update_ghost_values_finish | ( | ) | const |

For all requests that have been started in update_ghost_values_start, wait for the communication to finish.

Must follow a call to the update_ghost_values_start function before reading data from ghost indices.

| void LinearAlgebra::distributed::Vector< Number, MemorySpace >::zero_out_ghost_values | ( | ) | const |

This method zeros the entries on ghost dofs, but does not touch locally owned DoFs.

After calling this method, read access to ghost elements of the vector is forbidden and an exception is thrown. Only write access to ghost elements is allowed in this state.

| bool LinearAlgebra::distributed::Vector< Number, MemorySpace >::has_ghost_elements | ( | ) | const |

Return whether the vector currently is in a state where ghost values can be read or not. This is the same functionality as other parallel vectors have. If this method returns false, this only means that read-access to ghost elements is prohibited whereas write access is still possible (to those entries specified as ghosts during initialization), not that there are no ghost elements at all.

| void LinearAlgebra::distributed::Vector< Number, MemorySpace >::copy_locally_owned_data_from | ( | const Vector< Number2, MemorySpace > & | src | ) |

This method copies the data in the locally owned range from another distributed vector src into the calling vector. As opposed to operator= that also includes ghost entries, this operation ignores the ghost range. The only prerequisite is that the local range on the calling vector and the given vector src are the same on all processors. It is explicitly allowed that the two vectors have different ghost elements that might or might not be related to each other.

Since no data exchange is performed, make sure that neither src nor the calling vector have pending communications in order to obtain correct results.

| void LinearAlgebra::distributed::Vector< Number, MemorySpace >::import_elements | ( | const Vector< Number, MemorySpace2 > & | src, |

| VectorOperation::values | operation ) |

Import all the elements present in the distributed vector src. VectorOperation::values operation is used to decide if the elements in V should be added to the current vector or replace the current elements. The main purpose of this function is to get data from one memory space, e.g. Default, to the other, e.g. the Host.

|

inline |

Definition at line 717 of file la_parallel_vector.h.

| Vector< Number, MemorySpace > & LinearAlgebra::distributed::Vector< Number, MemorySpace >::operator*= | ( | const Number | factor | ) |

Multiply the entire vector by a fixed factor.

| Vector< Number, MemorySpace > & LinearAlgebra::distributed::Vector< Number, MemorySpace >::operator/= | ( | const Number | factor | ) |

Divide the entire vector by a fixed factor.

| Vector< Number, MemorySpace > & LinearAlgebra::distributed::Vector< Number, MemorySpace >::operator+= | ( | const Vector< Number, MemorySpace > & | V | ) |

Add the vector V to the present one.

| Vector< Number, MemorySpace > & LinearAlgebra::distributed::Vector< Number, MemorySpace >::operator-= | ( | const Vector< Number, MemorySpace > & | V | ) |

Subtract the vector V from the present one.

| void LinearAlgebra::distributed::Vector< Number, MemorySpace >::import_elements | ( | const LinearAlgebra::ReadWriteVector< Number > & | V, |

| const VectorOperation::values | operation, | ||

| const std::shared_ptr< const Utilities::MPI::CommunicationPatternBase > & | communication_pattern = {} ) |

Import all the elements present in the vector's IndexSet from the input vector V. VectorOperation::values operation is used to decide if the elements in V should be added to the current vector or replace the current elements. The last parameter can be used if the same communication pattern is used multiple times. This can be used to improve performance.

|

inline |

Definition at line 776 of file la_parallel_vector.h.

| Number LinearAlgebra::distributed::Vector< Number, MemorySpace >::operator* | ( | const Vector< Number, MemorySpace > & | V | ) | const |

Return the scalar product of two vectors.

| void LinearAlgebra::distributed::Vector< Number, MemorySpace >::add | ( | const Number | a | ) |

Add a to all components. Note that a is a scalar not a vector.

| void LinearAlgebra::distributed::Vector< Number, MemorySpace >::add | ( | const Number | a, |

| const Vector< Number, MemorySpace > & | V ) |

Simple addition of a multiple of a vector, i.e. *this += a*V.

| void LinearAlgebra::distributed::Vector< Number, MemorySpace >::add | ( | const Number | a, |

| const Vector< Number, MemorySpace > & | V, | ||

| const Number | b, | ||

| const Vector< Number, MemorySpace > & | W ) |

Multiple addition of scaled vectors, i.e. *this += a*V+b*W.

| void LinearAlgebra::distributed::Vector< Number, MemorySpace >::add | ( | const std::vector< size_type > & | indices, |

| const std::vector< Number > & | values ) |

A collective add operation: This function adds a whole set of values stored in values to the vector components specified by indices.

| void LinearAlgebra::distributed::Vector< Number, MemorySpace >::sadd | ( | const Number | s, |

| const Number | a, | ||

| const Vector< Number, MemorySpace > & | V ) |

Scaling and simple addition of a multiple of a vector, i.e. this = s(*this)+a*V.

| void LinearAlgebra::distributed::Vector< Number, MemorySpace >::scale | ( | const Vector< Number, MemorySpace > & | scaling_factors | ) |

Scale each element of this vector by the corresponding element in the argument. This function is mostly meant to simulate multiplication (and immediate re-assignment) by a diagonal scaling matrix.

| void LinearAlgebra::distributed::Vector< Number, MemorySpace >::equ | ( | const Number | a, |

| const Vector< Number, MemorySpace > & | V ) |

Assignment *this = a*V.

| real_type LinearAlgebra::distributed::Vector< Number, MemorySpace >::l1_norm | ( | ) | const |

Return the l1 norm of the vector (i.e., the sum of the absolute values of all entries among all processors).

| real_type LinearAlgebra::distributed::Vector< Number, MemorySpace >::l2_norm | ( | ) | const |

Return the l_2 norm of the vector (i.e., the square root of the sum of the square of all entries among all processors).

| real_type LinearAlgebra::distributed::Vector< Number, MemorySpace >::norm_sqr | ( | ) | const |

Return the square of the l_2 norm of the vector.

| real_type LinearAlgebra::distributed::Vector< Number, MemorySpace >::linfty_norm | ( | ) | const |

Return the maximum norm of the vector (i.e., the maximum absolute value among all entries and among all processors).

| Number LinearAlgebra::distributed::Vector< Number, MemorySpace >::add_and_dot | ( | const Number | a, |

| const Vector< Number, MemorySpace > & | V, | ||

| const Vector< Number, MemorySpace > & | W ) |

Perform a combined operation of a vector addition and a subsequent inner product, returning the value of the inner product. In other words, the result of this function is the same as if the user called

The reason this function exists is that this operation involves less memory transfer than calling the two functions separately. This method only needs to load three vectors, this, V, W, whereas calling separate methods means to load the calling vector this twice. Since most vector operations are memory transfer limited, this reduces the time by 25% (or 50% if W equals this).

For complex-valued vectors, the scalar product in the second step is implemented as \left<v,w\right>=\sum_i v_i \bar{w_i}.

|

overridevirtual |

Return the global size of the vector, equal to the sum of the number of locally owned indices among all processors.

Implements ReadVector< Number >.

| ::IndexSet LinearAlgebra::distributed::Vector< Number, MemorySpace >::locally_owned_elements | ( | ) | const |

Return an index set that describes which elements of this vector are owned by the current processor. As a consequence, the index sets returned on different processors if this is a distributed vector will form disjoint sets that add up to the complete index set. Obviously, if a vector is created on only one processor, then the result would satisfy

| void LinearAlgebra::distributed::Vector< Number, MemorySpace >::print | ( | std::ostream & | out, |

| const unsigned int | precision = 3, | ||

| const bool | scientific = true, | ||

| const bool | across = true ) const |

Print the vector to the output stream out.

| std::size_t LinearAlgebra::distributed::Vector< Number, MemorySpace >::memory_consumption | ( | ) | const |

Return the memory consumption of this class in bytes.

| Vector< Number, MemorySpace > & LinearAlgebra::distributed::Vector< Number, MemorySpace >::operator= | ( | const Number | s | ) |

Sets all elements of the vector to the scalar s. If the scalar is zero, also ghost elements are set to zero, otherwise they remain unchanged.

| void LinearAlgebra::distributed::Vector< Number, MemorySpace >::add | ( | const std::vector< size_type > & | indices, |

| const ::Vector< OtherNumber > & | values ) |

This is a collective add operation that adds a whole set of values stored in values to the vector components specified by indices.

| void LinearAlgebra::distributed::Vector< Number, MemorySpace >::add | ( | const size_type | n_elements, |

| const size_type * | indices, | ||

| const OtherNumber * | values ) |

Take an address where n_elements are stored contiguously and add them into the vector.

| void LinearAlgebra::distributed::Vector< Number, MemorySpace >::sadd | ( | const Number | s, |

| const Vector< Number, MemorySpace > & | V ) |

Scaling and simple vector addition, i.e. this = s(*this)+V.

| size_type LinearAlgebra::distributed::Vector< Number, MemorySpace >::locally_owned_size | ( | ) | const |

Return the local size of the vector, i.e., the number of indices owned locally.

| bool LinearAlgebra::distributed::Vector< Number, MemorySpace >::in_local_range | ( | const size_type | global_index | ) | const |

Return true if the given global index is in the local range of this processor.

| iterator LinearAlgebra::distributed::Vector< Number, MemorySpace >::begin | ( | ) |

Make the Vector class a bit like the vector<> class of the C++ standard library by returning iterators to the start and end of the locally owned elements of this vector.

It holds that end() - begin() == locally_owned_size().

| const_iterator LinearAlgebra::distributed::Vector< Number, MemorySpace >::begin | ( | ) | const |

Return constant iterator to the start of the locally owned elements of the vector.

| iterator LinearAlgebra::distributed::Vector< Number, MemorySpace >::end | ( | ) |

Return an iterator pointing to the element past the end of the array of locally owned entries.

| const_iterator LinearAlgebra::distributed::Vector< Number, MemorySpace >::end | ( | ) | const |

Return a constant iterator pointing to the element past the end of the array of the locally owned entries.

| Number LinearAlgebra::distributed::Vector< Number, MemorySpace >::operator() | ( | const size_type | global_index | ) | const |

Read access to the data in the position corresponding to global_index. The index must be either in the local range of the vector or be specified as a ghost index at construction.

Performance: O(1) for locally owned elements that represent a contiguous range and O(log(nranges)) for ghost elements (quite fast, but slower than local_element()).

| Number & LinearAlgebra::distributed::Vector< Number, MemorySpace >::operator() | ( | const size_type | global_index | ) |

Read and write access to the data in the position corresponding to global_index. The index must be either in the local range of the vector or be specified as a ghost index at construction.

Performance: O(1) for locally owned elements that represent a contiguous range and O(log(nranges)) for ghost elements (quite fast, but slower than local_element()).

| Number LinearAlgebra::distributed::Vector< Number, MemorySpace >::operator[] | ( | const size_type | global_index | ) | const |

Read access to the data in the position corresponding to global_index. The index must be either in the local range of the vector or be specified as a ghost index at construction.

This function does the same thing as operator().

| Number & LinearAlgebra::distributed::Vector< Number, MemorySpace >::operator[] | ( | const size_type | global_index | ) |

Read and write access to the data in the position corresponding to global_index. The index must be either in the local range of the vector or be specified as a ghost index at construction.

This function does the same thing as operator().

| Number LinearAlgebra::distributed::Vector< Number, MemorySpace >::local_element | ( | const size_type | local_index | ) | const |

Read access to the data field specified by local_index. Locally owned indices can be accessed with indices [0,locally_owned_size), and ghost indices with indices [locally_owned_size,locally_owned_size+get_partitioner()->n_ghost_indices()].

Performance: Direct array access (fast).

| Number & LinearAlgebra::distributed::Vector< Number, MemorySpace >::local_element | ( | const size_type | local_index | ) |

Read and write access to the data field specified by local_index. Locally owned indices can be accessed with indices [0,locally_owned_size()), and ghost indices with indices [locally_owned_size(), locally_owned_size()+n_ghosts].

Performance: Direct array access (fast).

| Number * LinearAlgebra::distributed::Vector< Number, MemorySpace >::get_values | ( | ) | const |

Return the pointer to the underlying raw array.

| void LinearAlgebra::distributed::Vector< Number, MemorySpace >::extract_subvector_to | ( | const std::vector< size_type > & | indices, |

| std::vector< OtherNumber > & | values ) const |

Instead of getting individual elements of a vector via operator(), this function allows getting a whole set of elements at once. The indices of the elements to be read are stated in the first argument, the corresponding values are returned in the second.

If the current vector is called v, then this function is the equivalent to the code

indices and values arrays must be identical.

|

overridevirtual |

Extract a range of elements all at once.

Implements ReadVector< Number >.

| void LinearAlgebra::distributed::Vector< Number, MemorySpace >::extract_subvector_to | ( | ForwardIterator | indices_begin, |

| const ForwardIterator | indices_end, | ||

| OutputIterator | values_begin ) const |

Instead of getting individual elements of a vector via operator(), this function allows getting a whole set of elements at once. In contrast to the previous function, this function obtains the indices of the elements by dereferencing all elements of the iterator range provided by the first two arguments, and puts the vector values into memory locations obtained by dereferencing a range of iterators starting at the location pointed to by the third argument.

If the current vector is called v, then this function is the equivalent to the code

values_begin as there are iterators between indices_begin and indices_end. | bool LinearAlgebra::distributed::Vector< Number, MemorySpace >::all_zero | ( | ) | const |

Return whether the vector contains only elements with value zero. This is a collective operation. This function is expensive, because potentially all elements have to be checked.

| Number LinearAlgebra::distributed::Vector< Number, MemorySpace >::mean_value | ( | ) | const |

Compute the mean value of all the entries in the vector.

| real_type LinearAlgebra::distributed::Vector< Number, MemorySpace >::lp_norm | ( | const real_type | p | ) | const |

l_p-norm of the vector. The pth root of the sum of the pth powers of the absolute values of the elements.

| MPI_Comm LinearAlgebra::distributed::Vector< Number, MemorySpace >::get_mpi_communicator | ( | ) | const |

Return the underlying MPI communicator.

| const std::shared_ptr< const Utilities::MPI::Partitioner > & LinearAlgebra::distributed::Vector< Number, MemorySpace >::get_partitioner | ( | ) | const |

Return the MPI partitioner that describes the parallel layout of the vector. This object can be used to initialize another vector with the respective reinit() call, for additional queries regarding the parallel communication, or the compatibility of partitioners.

| bool LinearAlgebra::distributed::Vector< Number, MemorySpace >::partitioners_are_compatible | ( | const Utilities::MPI::Partitioner & | part | ) | const |

Check whether the given partitioner is compatible with the partitioner used for this vector. Two partitioners are compatible if they have the same local size and the same ghost indices. They do not necessarily need to be the same data field of the shared pointer. This is a local operation only, i.e., if only some processors decide that the partitioning is not compatible, only these processors will return false, whereas the other processors will return true.

| bool LinearAlgebra::distributed::Vector< Number, MemorySpace >::partitioners_are_globally_compatible | ( | const Utilities::MPI::Partitioner & | part | ) | const |

Check whether the given partitioner is compatible with the partitioner used for this vector. Two partitioners are compatible if they have the same local size and the same ghost indices. They do not necessarily need to be the same data field. As opposed to partitioners_are_compatible(), this method checks for compatibility among all processors and the method only returns true if the partitioner is the same on all processors.

This method performs global communication, so make sure to use it only in a context where all processors call it the same number of times.

| void LinearAlgebra::distributed::Vector< Number, MemorySpace >::set_ghost_state | ( | const bool | ghosted | ) | const |

Change the ghost state of this vector to ghosted.

| const std::vector< ArrayView< const Number > > & LinearAlgebra::distributed::Vector< Number, MemorySpace >::shared_vector_data | ( | ) | const |

Get pointers to the beginning of the values of the other processes of the same shared-memory domain.

|

private |

Simple addition of a multiple of a vector, i.e. *this += a*V without MPI communication.

|

private |

Scaling and simple addition of a multiple of a vector, i.e. this = s(*this)+a*V without MPI communication.

|

private |

Local part of the inner product of two vectors.

|

private |

Local part of norm_sqr().

|

private |

Local part of mean_value().

|

private |

Local part of l1_norm().

|

private |

Local part of lp_norm().

|

private |

Local part of linfty_norm().

|

private |

Local part of the addition followed by an inner product of two vectors. The same applies for complex-valued vectors as for the add_and_dot() function.

|

private |

A helper function that clears the compress_requests and update_ghost_values_requests field. Used in reinit() functions.

|

private |

A helper function that is used to resize the val array.

| Vector & LinearAlgebra::distributed::Vector< Number, MemorySpace >::operator= | ( | const ::Vector< number > & | v | ) |

Definition at line 534 of file trilinos_vector.cc.

|

inherited |

Subscribes a user of the object by storing the pointer validity. The subscriber may be identified by text supplied as identifier.

Definition at line 135 of file subscriptor.cc.

|

inherited |

Unsubscribes a user from the object.

identifier and the validity pointer must be the same as the one supplied to subscribe(). Definition at line 155 of file subscriptor.cc.

|

inlineinherited |

Return the present number of subscriptions to this object. This allows to use this class for reference counted lifetime determination where the last one to unsubscribe also deletes the object.

Definition at line 300 of file subscriptor.h.

|

inlineinherited |

List the subscribers to the input stream.

Definition at line 317 of file subscriptor.h.

|

inherited |

List the subscribers to deallog.

Definition at line 203 of file subscriptor.cc.

|

inlineinherited |

Read or write the data of this object to or from a stream for the purpose of serialization using the BOOST serialization library.

This function does not actually serialize any of the member variables of this class. The reason is that what this class stores is only who subscribes to this object, but who does so at the time of storing the contents of this object does not necessarily have anything to do with who subscribes to the object when it is restored. Consequently, we do not want to overwrite the subscribers at the time of restoring, and then there is no reason to write the subscribers out in the first place.

Definition at line 309 of file subscriptor.h.

|

privatenoexceptinherited |

Check that there are no objects subscribing to this object. If this check passes then it is safe to destroy the current object. It this check fails then this function will either abort or print an error message to deallog (by using the AssertNothrow mechanism), but will not throw an exception.

Definition at line 52 of file subscriptor.cc.

|

friend |

Typedef for the vector type used

Typedef for the vector type used.

Definition at line 1455 of file la_parallel_vector.h.

|

friend |

Typedef for the block-vector type used

Typedef for the type used to describe vectors that consist of multiple blocks.

Definition at line 1459 of file la_parallel_vector.h.

|

private |

Shared pointer to store the parallel partitioning information. This information can be shared between several vectors that have the same partitioning.

Definition at line 1372 of file la_parallel_vector.h.

|

private |

The size that is currently allocated in the val array.

Definition at line 1377 of file la_parallel_vector.h.

|

private |

Underlying data structure storing the local elements of this vector.

Definition at line 1382 of file la_parallel_vector.h.

|

mutableprivate |

For parallel loops with TBB, this member variable stores the affinity information of loops.

Definition at line 1389 of file la_parallel_vector.h.

|

private |

Temporary storage that holds the data that is sent to this processor in compress() or sent from this processor in update_ghost_values().

Definition at line 1396 of file la_parallel_vector.h.

|

mutableprivate |

Stores whether the vector currently allows for reading ghost elements or not. Note that this is to ensure consistent ghost data and does not indicate whether the vector actually can store ghost elements. In particular, when assembling a vector we do not allow reading elements, only writing them.

Definition at line 1405 of file la_parallel_vector.h.

|

private |

A vector that collects all requests from compress() operations. This class uses persistent MPI communicators, i.e., the communication channels are stored during successive calls to a given function. This reduces the overhead involved with setting up the MPI machinery, but it does not remove the need for a receive operation to be posted before the data can actually be sent.

Definition at line 1416 of file la_parallel_vector.h.

|

mutableprivate |

A vector that collects all requests from update_ghost_values() operations. This class uses persistent MPI communicators.

Definition at line 1422 of file la_parallel_vector.h.

|

mutableprivate |

A lock that makes sure that the compress() and update_ghost_values() functions give reasonable results also when used with several threads.

Definition at line 1430 of file la_parallel_vector.h.

|

private |

Communicator to be used for the shared-memory domain. See the general documentation of this class for more information about the purpose of comm_sm.

Definition at line 1437 of file la_parallel_vector.h.

|

mutableprivateinherited |

Store the number of objects which subscribed to this object. Initially, this number is zero, and upon destruction it shall be zero again (i.e. all objects which subscribed should have unsubscribed again).

The creator (and owner) of an object is counted in the map below if HE manages to supply identification.

We use the mutable keyword in order to allow subscription to constant objects also.

This counter may be read from and written to concurrently in multithreaded code: hence we use the std::atomic class template.

Definition at line 218 of file subscriptor.h.

|

mutableprivateinherited |

In this map, we count subscriptions for each different identification string supplied to subscribe().

Definition at line 224 of file subscriptor.h.

|

mutableprivateinherited |

In this vector, we store pointers to the validity bool in the SmartPointer objects that subscribe to this class.

Definition at line 240 of file subscriptor.h.

|

mutableprivateinherited |

Pointer to the typeinfo object of this object, from which we can later deduce the class name. Since this information on the derived class is neither available in the destructor, nor in the constructor, we obtain it in between and store it here.

Definition at line 248 of file subscriptor.h.