|

Reference documentation for deal.II version 9.6.0

|

|

Reference documentation for deal.II version 9.6.0

|

#include <deal.II/base/mpi_noncontiguous_partitioner.h>

Public Member Functions | |

| NoncontiguousPartitioner ()=default | |

| NoncontiguousPartitioner (const IndexSet &indexset_locally_owned, const IndexSet &indexset_ghost, const MPI_Comm communicator) | |

| NoncontiguousPartitioner (const std::vector< types::global_dof_index > &indices_locally_owned, const std::vector< types::global_dof_index > &indices_ghost, const MPI_Comm communicator) | |

| template<typename Number > | |

| void | export_to_ghosted_array (const ArrayView< const Number > &locally_owned_array, const ArrayView< Number > &ghost_array) const |

| template<typename Number > | |

| void | export_to_ghosted_array (const unsigned int communication_channel, const ArrayView< const Number > &locally_owned_array, const ArrayView< Number > &temporary_storage, const ArrayView< Number > &ghost_array, std::vector< MPI_Request > &requests) const |

| template<typename Number > | |

| void | export_to_ghosted_array_start (const unsigned int communication_channel, const ArrayView< const Number > &locally_owned_array, const ArrayView< Number > &temporary_storage, std::vector< MPI_Request > &requests) const |

| template<typename Number > | |

| void | export_to_ghosted_array_finish (const ArrayView< const Number > &temporary_storage, const ArrayView< Number > &ghost_array, std::vector< MPI_Request > &requests) const |

| template<typename Number > | |

| void | import_from_ghosted_array (const VectorOperation::values vector_operation, const ArrayView< Number > &ghost_array, const ArrayView< Number > &locally_owned_storage) const |

| template<typename Number > | |

| void | import_from_ghosted_array (const VectorOperation::values vector_operation, const unsigned int communication_channel, const ArrayView< Number > &ghost_array, const ArrayView< Number > &temporary_storage, const ArrayView< Number > &locally_owned_storage, std::vector< MPI_Request > &requests) const |

| template<typename Number > | |

| void | import_from_ghosted_array_start (const VectorOperation::values vector_operation, const unsigned int communication_channel, const ArrayView< Number > &ghost_array, const ArrayView< Number > &temporary_storage, std::vector< MPI_Request > &requests) const |

| template<typename Number > | |

| void | import_from_ghosted_array_finish (const VectorOperation::values vector_operation, const ArrayView< const Number > &temporary_storage, const ArrayView< Number > &locally_owned_storage, std::vector< MPI_Request > &requests) const |

| std::pair< unsigned int, unsigned int > | n_targets () const |

| unsigned int | temporary_storage_size () const |

| types::global_dof_index | memory_consumption () |

| MPI_Comm | get_mpi_communicator () const override |

| void | reinit (const IndexSet &locally_owned_indices, const IndexSet &ghost_indices, const MPI_Comm communicator) override |

| void | reinit (const std::vector< types::global_dof_index > &locally_owned_indices, const std::vector< types::global_dof_index > &ghost_indices, const MPI_Comm communicator) |

Private Attributes | |

| MPI_Comm | communicator |

| std::vector< unsigned int > | send_ranks |

| std::vector< types::global_dof_index > | send_ptr |

| std::vector< types::global_dof_index > | send_indices |

| std::vector< unsigned int > | recv_ranks |

| std::vector< types::global_dof_index > | recv_ptr |

| std::vector< types::global_dof_index > | recv_indices |

| std::vector< std::uint8_t > | buffers |

| std::vector< MPI_Request > | requests |

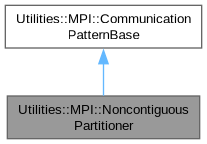

A flexible Partitioner class, which does not impose restrictions regarding the order of the underlying index sets. In other words, this class implements the interface of the Utilities::MPI::CommunicationPatternBase base class with no assumption that every process stores a contiguous part of the array of objects, but that indeed the locally owned indices can be an arbitrary subset of all indices of elements of the array to which they refer.

If you want to store only contiguous parts of these arrays on each process, take a look at Utilities::MPI::Partitioner.

Definition at line 45 of file mpi_noncontiguous_partitioner.h.

|

default |

Default constructor. Requires calling one of the reinit() functions to create a valid object.

| Utilities::MPI::NoncontiguousPartitioner::NoncontiguousPartitioner | ( | const IndexSet & | indexset_locally_owned, |

| const IndexSet & | indexset_ghost, | ||

| const MPI_Comm | communicator ) |

Constructor. Set up point-to-point communication pattern based on the IndexSets arguments indexset_locally_owned and indexset_ghost for the MPI communicator communicator.

Definition at line 30 of file mpi_noncontiguous_partitioner.cc.

| Utilities::MPI::NoncontiguousPartitioner::NoncontiguousPartitioner | ( | const std::vector< types::global_dof_index > & | indices_locally_owned, |

| const std::vector< types::global_dof_index > & | indices_ghost, | ||

| const MPI_Comm | communicator ) |

Constructor. Same as above but for vectors of indices indices_locally_owned and indices_ghost. This allows the indices to not be sorted and the values are read and written automatically at the right position of the vector during update_values(), update_values_start(), and update_values_finish(). It is allowed to include entries with the value numbers::invalid_dof_index which do not take part of the index exchange but are present in the data vectors as padding.

Definition at line 40 of file mpi_noncontiguous_partitioner.cc.

| void Utilities::MPI::NoncontiguousPartitioner::export_to_ghosted_array | ( | const ArrayView< const Number > & | locally_owned_array, |

| const ArrayView< Number > & | ghost_array ) const |

Fill the vector ghost_array according to the precomputed communication pattern with values from locally_owned_array.

| void Utilities::MPI::NoncontiguousPartitioner::export_to_ghosted_array | ( | const unsigned int | communication_channel, |

| const ArrayView< const Number > & | locally_owned_array, | ||

| const ArrayView< Number > & | temporary_storage, | ||

| const ArrayView< Number > & | ghost_array, | ||

| std::vector< MPI_Request > & | requests ) const |

Same as above but with an interface similar to Utilities::MPI::Partitioner::export_to_ghosted_array_start and Utilities::MPI::Partitioner::export_to_ghosted_array_finish. In this function, the user can provide the temporary data structures to be used.

temporary_storage vector has to be at least temporary_storage_size. The reason for this is that this vector is used as buffer for both sending and receiving data.communication_channel. | void Utilities::MPI::NoncontiguousPartitioner::export_to_ghosted_array_start | ( | const unsigned int | communication_channel, |

| const ArrayView< const Number > & | locally_owned_array, | ||

| const ArrayView< Number > & | temporary_storage, | ||

| std::vector< MPI_Request > & | requests ) const |

Start update: Data is packed, non-blocking send and receives are started.

temporary_storage. This allows for padding and other post-processing of the received data.communication_channel. | void Utilities::MPI::NoncontiguousPartitioner::export_to_ghosted_array_finish | ( | const ArrayView< const Number > & | temporary_storage, |

| const ArrayView< Number > & | ghost_array, | ||

| std::vector< MPI_Request > & | requests ) const |

Finish update. The method waits until all data has been sent and received. Once data from any process is received it is processed and placed at the right position of the vector dst.

temporary_storage, since the data has been received into the buffer and not into the destination vector.| void Utilities::MPI::NoncontiguousPartitioner::import_from_ghosted_array | ( | const VectorOperation::values | vector_operation, |

| const ArrayView< Number > & | ghost_array, | ||

| const ArrayView< Number > & | locally_owned_storage ) const |

Similar to the above functions but for importing vector entries from ghost_array to locally_owned_storage.

| void Utilities::MPI::NoncontiguousPartitioner::import_from_ghosted_array | ( | const VectorOperation::values | vector_operation, |

| const unsigned int | communication_channel, | ||

| const ArrayView< Number > & | ghost_array, | ||

| const ArrayView< Number > & | temporary_storage, | ||

| const ArrayView< Number > & | locally_owned_storage, | ||

| std::vector< MPI_Request > & | requests ) const |

Similar to the above function with the difference that users can provide temporaty arrays. This function calls import_from_ghosted_array_start() and import_from_ghosted_array_finish() in sequence.

| void Utilities::MPI::NoncontiguousPartitioner::import_from_ghosted_array_start | ( | const VectorOperation::values | vector_operation, |

| const unsigned int | communication_channel, | ||

| const ArrayView< Number > & | ghost_array, | ||

| const ArrayView< Number > & | temporary_storage, | ||

| std::vector< MPI_Request > & | requests ) const |

Start update for importig values: Data is packed, non-blocking send and receives are started.

| void Utilities::MPI::NoncontiguousPartitioner::import_from_ghosted_array_finish | ( | const VectorOperation::values | vector_operation, |

| const ArrayView< const Number > & | temporary_storage, | ||

| const ArrayView< Number > & | locally_owned_storage, | ||

| std::vector< MPI_Request > & | requests ) const |

Finish update for importing values. The method waits until all data has been sent and received. Once data from any process is received it is processed and placed at the right position of the vector locally_owned_storage.

| std::pair< unsigned int, unsigned int > Utilities::MPI::NoncontiguousPartitioner::n_targets | ( | ) | const |

Returns the number of processes this process sends data to and the number of processes this process receives data from.

Definition at line 51 of file mpi_noncontiguous_partitioner.cc.

| unsigned int Utilities::MPI::NoncontiguousPartitioner::temporary_storage_size | ( | ) | const |

Return the size of the temporary storage needed by the export_to_ghosted_array() functions, if the temporary storage is handled by the user code.

Definition at line 59 of file mpi_noncontiguous_partitioner.cc.

| types::global_dof_index Utilities::MPI::NoncontiguousPartitioner::memory_consumption | ( | ) |

Return memory consumption in Byte.

Definition at line 67 of file mpi_noncontiguous_partitioner.cc.

|

overridevirtual |

Return the underlying MPI communicator.

Implements Utilities::MPI::CommunicationPatternBase.

Definition at line 82 of file mpi_noncontiguous_partitioner.cc.

|

overridevirtual |

Reinitialize the communication pattern.

| [in] | locally_owned_indices | The set of indices of elements in the array mentioned in the class documentation that are stored on the current process. |

| [in] | ghost_indices | The set of indices of elements in the array mentioned in the class documentation that the current process will need to be able to import. |

| [in] | communicator | The MPI communicator used to describe the entire set of processes that participate in the storage and access to elements of the array. |

Implements Utilities::MPI::CommunicationPatternBase.

Definition at line 90 of file mpi_noncontiguous_partitioner.cc.

| void Utilities::MPI::NoncontiguousPartitioner::reinit | ( | const std::vector< types::global_dof_index > & | locally_owned_indices, |

| const std::vector< types::global_dof_index > & | ghost_indices, | ||

| const MPI_Comm | communicator ) |

Initialize the inner data structures using explicit sets of indices. See the documentation of the other reinit() function for what the function does.

Definition at line 167 of file mpi_noncontiguous_partitioner.cc.

|

private |

MPI communicator.

Definition at line 271 of file mpi_noncontiguous_partitioner.h.

|

private |

The ranks this process sends data to.

Definition at line 276 of file mpi_noncontiguous_partitioner.h.

|

private |

Offset of each process within send_buffer.

send_indices this forms a CRS data structure. Definition at line 283 of file mpi_noncontiguous_partitioner.h.

|

private |

Local index of each entry in send_buffer within the destination vector.

send_ptr this forms a CRS data structure. Definition at line 291 of file mpi_noncontiguous_partitioner.h.

|

private |

The ranks this process receives data from.

Definition at line 296 of file mpi_noncontiguous_partitioner.h.

|

private |

Offset of each process within recv_buffer.

recv_indices this forms a CRS data structure. Definition at line 303 of file mpi_noncontiguous_partitioner.h.

|

private |

Local index of each entry in recv_buffer within the destination vector.

recv_ptr this forms a CRS data structure. Definition at line 311 of file mpi_noncontiguous_partitioner.h.

|

mutableprivate |

Buffer containing the values sorted by rank for sending and receiving.

Definition at line 322 of file mpi_noncontiguous_partitioner.h.

|

mutableprivate |

MPI requests for sending and receiving.

Definition at line 329 of file mpi_noncontiguous_partitioner.h.