This tutorial depends on step-15.

This program was contributed by Wolfgang Bangerth, Colorado State University.

This material is based upon work partially supported by National Science Foundation grants OAC-1835673, DMS-1821210, and EAR-1925595; and by the Computational Infrastructure in Geodynamics initiative (CIG), through the National Science Foundation under Award No. EAR-1550901 and The University of California-Davis.

Stefano Zampini (King Abdullah University of Science and Technology) contributed the results obtained with the PETSc variant of this program discussed in the results section below.

Introduction

The step-15 program solved the following, nonlinear equation describing the minimal surface problem:

\begin{align*}

-\nabla \cdot \left( \frac{1}{\sqrt{1+|\nabla u|^{2}}}\nabla u \right) &= 0 \qquad

\qquad &&\textrm{in} ~ \Omega

\\

u&=g \qquad\qquad &&\textrm{on} ~ \partial \Omega.

\end{align*}

step-15 uses a Newton method, and Newton's method works by repeatedly solving a linearized problem for an update \delta u_k – called the "search direction" –, computing a "step length" \alpha_k, and then combining them to compute the new guess for the solution via

\begin{align*}

u_{k+1} = u_k + \alpha_k \, \delta u_k.

\end{align*}

In the course of the discussions in step-15, we found that it is awkward to compute the step length, and so just settled for simple choice: Always choose \alpha_k=0.1. This is of course not efficient: We know that we can only realize Newton's quadratic convergence rate if we eventually are able to choose \alpha_k=1, though we may have to choose it smaller for the first few iterations where we are still too far away to use this long a step length.

Among the goals of this program is therefore to address this shortcoming. Since line search algorithms are not entirely trivial to implement, one does as one should do anyway: Import complicated functionality from an external library. To this end, we will make use of the interfaces deal.II has to one of the big nonlinear solver packages, namely the KINSOL sub-package of the SUNDIALS suite. SUNDIALS is, at its heart, a package meant to solve complex ordinary differential equations (ODEs) and differential-algebraic equations (DAEs), and the deal.II interfaces allow for this via the classes in the SUNDIALS namespace: Notably the SUNDIALS::ARKode and SUNDIALS::IDA classes. But, because that is an important step in the solution of ODEs and DAEs with implicit methods, SUNDIALS also has a solver for nonlinear problems called KINSOL, and deal.II has an interface to it in the form of the SUNDIALS::KINSOL class. This is what we will use for the solution of our problem.

But SUNDIALS isn't just a convenient way for us to avoid writing a line search algorithm. In general, the solution of nonlinear problems is quite expensive, and one typically wants to save as much compute time as possible. One way one can achieve this is as follows: The algorithm in step-15 discretizes the problem and then in every iteration solves a linear system of the form

\begin{align*}

J_k \, \delta U_k = -F_k

\end{align*}

where F_k is the residual vector computed using the current vector of nodal values U_k, J_k is its derivative (called the "Jacobian"), and \delta U_k is the update vector that corresponds to the function \delta u_k mentioned above. The construction of J_k,F_k has been thoroughly discussed in step-15, as has the way to solve the linear system in each Newton iteration. So let us focus on another aspect of the nonlinear solution procedure: Computing F_k is expensive, and assembling the matrix J_k even more so. Do we actually need to do that in every iteration? It turns out that in many applications, this is not actually necessary: These methods often converge even if we replace J_k by an approximation \tilde J_k and solve

\begin{align*}

\tilde J_k \, \widetilde{\delta U}_k = -F_k

\end{align*}

instead, then update

\begin{align*}

U_{k+1} = U_k + \alpha_k \, \widetilde{\delta U}_k.

\end{align*}

This may require an iteration or two more because our update \widetilde{\delta U}_k is not quite as good as \delta U_k, but it may still be a win because we don't have to assemble J_k quite as often.

What kind of approximation \tilde J_k would we like for J_k? Theory says that as U_k converges to the exact solution U^\ast, we need to ensure that \tilde J_k needs to converge to J^\ast = \nabla F(U^\ast). In particular, since J_k\rightarrow J^\ast, a valid choice is \tilde J_k = J_k. But so is choosing \tilde J_k = J_k every, say, fifth iteration k=0,5,10,\ldots and for the other iterations, we choose \tilde J_k equal to the last computed J_{k'}. This is what we will do here: we will just re-use \tilde J_{k-1} from the previous iteration, which may again be what we had used in the iteration before that, \tilde J_{k-2}.

This scheme becomes even more interesting if, for the solution of the linear system with J_k, we don't just have to assemble a matrix, but also compute a good preconditioner. For example, if we were to use a sparse LU decomposition via the SparseDirectUMFPACK class, or used a geometric or algebraic multigrid. In those cases, we would also not have to update the preconditioner, whose computation may have taken about as long or longer than the assembly of the matrix in the first place. Indeed, with this mindset, we should probably think about using the best preconditioner we can think of, even though their construction is typically quite expensive: We will hope to amortize the cost of computing this preconditioner by applying it to more than one just one linear solve.

The big question is, of course: By what criterion do we decide whether we can get away with the approximation \tilde J_k based on a previously computed Jacobian matrix J_{k-s} that goes back s steps, or whether we need to – at least in this iteration – actually re-compute the Jacobian J_k and the corresponding preconditioner? This is, like the issue with line search, one that requires a non-trivial amount of code that monitors the convergence of the overall algorithm. We could implement these sorts of things ourselves, but we probably shouldn't: KINSOL already does that for us. It will tell our code when to "update" the Jacobian matrix.

One last consideration if we were to use an iterative solver instead of the sparse direct one mentioned above: Not only is it possible to get away with replacing J_k by some approximation \tilde J_k when solving for the update \delta U_k, but one can also ask whether it is necessary to solve the linear system

\begin{align*}

\tilde J_k \widetilde{\delta U}_k = -F_k

\end{align*}

to high accuracy. The thinking goes like this: While our current solution U_k is still far away from U^\ast, why would we solve this linear system particularly accurately? The update U_{k+1}=U_k + \widetilde{\delta U}_k is likely still going to be far away from the exact solution, so why spend much time on solving the linear system to great accuracy? This is the kind of thinking that underlies algorithms such as the "Eisenstat-Walker trick" [196] in which one is given a tolerance to which the linear system above in iteration k has to be solved, with this tolerance dependent on the progress in the overall nonlinear solver. As before, one could try to implement this oneself, but KINSOL already provides this kind of information for us – though we will not use it in this program since we use a direct solver that requires no solver tolerance and just solves the linear system exactly up to round-off.

As a summary of all of these considerations, we could say the following: There is no need to reinvent the wheel. Just like deal.II provides a vast amount of finite-element functionality, SUNDIALS' KINSOL package provides a vast amount of nonlinear solver functionality, and we better use it.

- Note

- While this program uses SUNDIAL's KINSOL package as the engine to solve nonlinear problems, KINSOL is not the only option you have. deal.II also has interfaces to PETSc's SNES collection of algorithms (see the PETScWrappers::NonlinearSolver class) as well as to the Trilinos NOX package (see the TrilinosWrappers::NOXSolver class) that provide not only very similar functionality, but also a largely identical interface. If you have installed a version of deal.II that is configured to use either PETSc or Trilinos, but not SUNDIALS, then it is not too difficult to switch this program to use either of the former two packages instead: Basically everything that we say and do below will also be true and work for these other packages! (We will also come back to this point in the results section below.)

How deal.II interfaces with KINSOL

KINSOL, like many similar packages, works in a pretty abstract way. At its core, it sees a nonlinear problem of the form

\begin{align*}

F(U) = 0

\end{align*}

and constructs a sequence of iterates U_k which, in general, are vectors of the same length as the vector returned by the function F. To do this, there are a few things it needs from the user:

- A way to resize a given vector to the correct size.

- A way to evaluate, for a given vector U, the function F(U). This function is generally called the "residual" operation because the goal is of course to find a point U^\ast for which F(U^\ast)=0; if F(U) returns a nonzero vector, then this is the "residual" (i.e., the "rest", or whatever is "left over"). The function that will do this is in essence the same as the computation of the right hand side vector in step-15, but with an important difference: There, the right hand side denoted the negative of the residual, so we have to switch a sign.

- A way to compute the matrix J_k if that is necessary in the current iteration, along with possibly a preconditioner or other data structures (e.g., a sparse decomposition via SparseDirectUMFPACK if that's what we choose to use to solve a linear system). This operation will generally be called the "setup" operation.

- A way to solve a linear system \tilde J_k x = b with whatever matrix \tilde J_k was last computed. This operation will generally be called the "solve" operation.

All of these operations need to be provided to KINSOL by std::function objects that take the appropriate set of arguments and that generally return an integer that indicates success (a zero return value) or failure (a nonzero return value). Specifically, the objects we will access are the SUNDIALS::KINSOL::reinit_vector, SUNDIALS::KINSOL::residual, SUNDIALS::KINSOL::setup_jacobian, and SUNDIALS::KINSOL::solve_with_jacobian member variables. (See the documentation of these variables for their details.) In our implementation, we will use lambda functions to implement these "callbacks" that in turn can call member functions; KINSOL will then call these callbacks whenever its internal algorithms think it is useful.

Details of the implementation

The majority of the code of this tutorial program is as in step-15, and we will not comment on it in much detail. There is really just one aspect one has to pay some attention to, namely how to compute F(U) given a vector U on the one hand, and J(U) given a vector U separately. At first, this seems trivial: We just take the assemble_system() function and in the one case throw out all code that deals with the matrix and in the other case with the right hand side vector. There: Problem solved.

But it isn't quite as simple. That's because the two are not independent if we have nonzero Dirichlet boundary values, as we do here. The linear system we want to solve contains both interior and boundary degrees of freedom, and when eliminating those degrees of freedom from those that are truly "free", using for example AffineConstraints::distribute_local_to_global(), we need to know the matrix when assembling the right hand side vector.

Of course, this completely contravenes the original intent: To not assemble the matrix if we can get away without it. We solve this problem as follows:

- We set the starting guess for the solution vector, U_0, to one where boundary degrees of freedom already have their correct values.

- This implies that all updates can have zero updates for these degrees of freedom, and we can build both residual vectors F(U_k) and Jacobian matrices J_k that corresponds to linear systems whose solutions are zero in these vector components. For this special case, the assembly of matrix and right hand side vectors is independent, and can be broken into separate functions.

There is an assumption here that whenever KINSOL asks for a linear solver with the (approximation of the) Jacobian, that this will be for an update \delta U (which has zero boundary values), a multiple of which will be added to the solution (which already has the right boundary values). This may not be true and if so, we might have to rethink our approach. That said, it turns out that in practice this is exactly what KINSOL does when using a Newton method, and so our approach is successful.

The commented program

Include files

This program starts out like most others with well known include files. Compared to the step-15 program from which most of what we do here is copied, the only difference is the include of the header files from which we import the SparseDirectUMFPACK class and the actual interface to KINSOL:

#include <deal.II/base/quadrature_lib.h>

#include <deal.II/base/function.h>

#include <deal.II/base/timer.h>

#include <deal.II/base/utilities.h>

#include <deal.II/lac/vector.h>

#include <deal.II/lac/full_matrix.h>

#include <deal.II/lac/sparse_matrix.h>

#include <deal.II/lac/dynamic_sparsity_pattern.h>

#include <deal.II/lac/affine_constraints.h>

#include <deal.II/lac/sparse_direct.h>

#include <deal.II/grid/tria.h>

#include <deal.II/grid/grid_generator.h>

#include <deal.II/grid/grid_refinement.h>

#include <deal.II/dofs/dof_handler.h>

#include <deal.II/dofs/dof_accessor.h>

#include <deal.II/dofs/dof_tools.h>

#include <deal.II/fe/fe_values.h>

#include <deal.II/fe/fe_q.h>

#include <deal.II/numerics/vector_tools.h>

#include <deal.II/numerics/data_out.h>

#include <deal.II/numerics/error_estimator.h>

#include <deal.II/numerics/solution_transfer.h>

#include <deal.II/sundials/kinsol.h>

#include <fstream>

#include <iostream>

namespace Step77

{

The MinimalSurfaceProblem class template

Similarly, the main class of this program is essentially a copy of the one in step-15. The class does, however, split the computation of the Jacobian (system) matrix (and its factorization using a direct solver) and residual into separate functions for the reasons outlined in the introduction. For the same reason, the class also has a pointer to a factorization of the Jacobian matrix that is reset every time we update the Jacobian matrix.

(If you are wondering why the program uses a direct object for the Jacobian matrix but a pointer for the factorization: Every time KINSOL requests that the Jacobian be updated, we can simply write jacobian_matrix=0; to reset it to a zero matrix that we can then fill again. On the other hand, the SparseDirectUMFPACK class does not have any way to throw away its content or to replace it with a new factorization, and so we use a pointer: We just throw away the whole object and create a new one whenever we have a new Jacobian matrix to factor.)

Finally, the class has a timer variable that we will use to assess how long the different parts of the program take so that we can assess whether KINSOL's tendency to not rebuild the matrix and its factorization makes sense. We will discuss this in the "Results" section below.

template <int dim>

class MinimalSurfaceProblem

{

public:

MinimalSurfaceProblem();

void run();

private:

void setup_system();

const double tolerance);

void refine_mesh();

void output_results(const unsigned int refinement_cycle);

void compute_and_factorize_jacobian(

const Vector<double> &evaluation_point);

std::unique_ptr<SparseDirectUMFPACK> jacobian_matrix_factorization;

};

const ::parallel::distributed::Triangulation< dim, spacedim > * triangulation

Boundary condition

The classes implementing boundary values are a copy from step-15:

template <int dim>

class BoundaryValues :

public Function<dim>

{

public:

const unsigned int component = 0) const override;

};

template <int dim>

double BoundaryValues<dim>::value(

const Point<dim> &p,

const unsigned int ) const

{

}

virtual RangeNumberType value(const Point< dim > &p, const unsigned int component=0) const

static constexpr double PI

::VectorizedArray< Number, width > sin(const ::VectorizedArray< Number, width > &)

The MinimalSurfaceProblem class implementation

Constructor and set up functions

The following few functions are also essentially copies of what step-15 already does, and so there is little to discuss.

template <int dim>

MinimalSurfaceProblem<dim>::MinimalSurfaceProblem()

, fe(1)

{}

template <int dim>

void MinimalSurfaceProblem<dim>::setup_system()

{

dof_handler.distribute_dofs(fe);

current_solution.reinit(dof_handler.n_dofs());

zero_constraints.clear();

0,

zero_constraints);

zero_constraints.close();

nonzero_constraints.clear();

0,

BoundaryValues<dim>(),

nonzero_constraints);

nonzero_constraints.close();

sparsity_pattern.copy_from(dsp);

jacobian_matrix.reinit(sparsity_pattern);

jacobian_matrix_factorization.reset();

}

void make_hanging_node_constraints(const DoFHandler< dim, spacedim > &dof_handler, AffineConstraints< number > &constraints)

void make_sparsity_pattern(const DoFHandler< dim, spacedim > &dof_handler, SparsityPatternBase &sparsity_pattern, const AffineConstraints< number > &constraints={}, const bool keep_constrained_dofs=true, const types::subdomain_id subdomain_id=numbers::invalid_subdomain_id)

Assembling and factorizing the Jacobian matrix

The following function is then responsible for assembling and factorizing the Jacobian matrix. The first half of the function is in essence the assemble_system() function of step-15, except that it does not deal with also forming a right hand side vector (i.e., the residual) since we do not always have to do these operations at the same time.

We put the whole assembly functionality into a code block enclosed by curly braces so that we can use a TimerOutput::Scope variable to measure how much time is spent in this code block, excluding everything that happens in this function after the matching closing brace }.

template <int dim>

void MinimalSurfaceProblem<dim>::compute_and_factorize_jacobian(

{

{

std::cout << " Computing Jacobian matrix" << std::endl;

jacobian_matrix = 0;

quadrature_formula,

const unsigned int dofs_per_cell = fe.n_dofs_per_cell();

const unsigned int n_q_points = quadrature_formula.size();

std::vector<Tensor<1, dim>> evaluation_point_gradients(n_q_points);

std::vector<types::global_dof_index> local_dof_indices(dofs_per_cell);

for (const auto &cell : dof_handler.active_cell_iterators())

{

cell_matrix = 0;

fe_values.reinit(cell);

fe_values.get_function_gradients(evaluation_point,

evaluation_point_gradients);

for (unsigned int q = 0; q < n_q_points; ++q)

{

const double coeff =

1.0 /

std::sqrt(1 + evaluation_point_gradients[q] *

evaluation_point_gradients[q]);

for (unsigned int i = 0; i < dofs_per_cell; ++i)

{

for (unsigned int j = 0; j < dofs_per_cell; ++j)

cell_matrix(i, j) +=

(((fe_values.shape_grad(i, q)

* coeff

* fe_values.shape_grad(j, q))

-

(fe_values.shape_grad(i, q)

* coeff * coeff * coeff

*

(fe_values.shape_grad(j, q)

* evaluation_point_gradients[q])

* evaluation_point_gradients[q]))

* fe_values.JxW(q));

}

}

cell->get_dof_indices(local_dof_indices);

zero_constraints.distribute_local_to_global(cell_matrix,

local_dof_indices,

jacobian_matrix);

}

}

@ update_JxW_values

Transformed quadrature weights.

@ update_gradients

Shape function gradients.

@ update_quadrature_points

Transformed quadrature points.

::VectorizedArray< Number, width > sqrt(const ::VectorizedArray< Number, width > &)

The second half of the function then deals with factorizing the so-computed matrix. To do this, we first create a new SparseDirectUMFPACK object and by assigning it to the member variable jacobian_matrix_factorization, we also destroy whatever object that pointer previously pointed to (if any). Then we tell the object to factorize the Jacobian.

As above, we enclose this block of code into curly braces and use a timer to assess how long this part of the program takes.

(Strictly speaking, we don't actually need the matrix any more after we are done here, and could throw the matrix object away. A code intended to be memory efficient would do this, and only create the matrix object in this function, rather than as a member variable of the surrounding class. We omit this step here because using the same coding style as in previous tutorial programs breeds familiarity with the common style and helps make these tutorial programs easier to read.)

{

std::cout << " Factorizing Jacobian matrix" << std::endl;

jacobian_matrix_factorization = std::make_unique<SparseDirectUMFPACK>();

jacobian_matrix_factorization->factorize(jacobian_matrix);

}

}

Computing the residual vector

The second part of what assemble_system() used to do in step-15 is computing the residual vector, i.e., the right hand side vector of the Newton linear systems. We have broken this out of the previous function, but the following function will be easy to understand if you understood what assemble_system() in step-15 did. Importantly, however, we need to compute the residual not linearized around the current solution vector, but whatever we get from KINSOL. This is necessary for operations such as line search where we want to know what the residual F(U^k + \alpha_k \delta

U^K) is for different values of \alpha_k; KINSOL in those cases simply gives us the argument to the function F and we then compute the residual F(\cdot) at this point.

The function prints the norm of the so-computed residual at the end as a way for us to follow along the progress of the program.

template <int dim>

void MinimalSurfaceProblem<dim>::compute_residual(

{

std::cout << " Computing residual vector..." << std::flush;

residual = 0.0;

quadrature_formula,

const unsigned int dofs_per_cell = fe.n_dofs_per_cell();

const unsigned int n_q_points = quadrature_formula.size();

std::vector<Tensor<1, dim>> evaluation_point_gradients(n_q_points);

std::vector<types::global_dof_index> local_dof_indices(dofs_per_cell);

for (const auto &cell : dof_handler.active_cell_iterators())

{

cell_residual = 0;

fe_values.reinit(cell);

fe_values.get_function_gradients(evaluation_point,

evaluation_point_gradients);

for (unsigned int q = 0; q < n_q_points; ++q)

{

const double coeff =

1.0 /

std::sqrt(1 + evaluation_point_gradients[q] *

evaluation_point_gradients[q]);

for (unsigned int i = 0; i < dofs_per_cell; ++i)

cell_residual(i) +=

(fe_values.shape_grad(i, q)

* coeff

* evaluation_point_gradients[q]

* fe_values.JxW(q));

}

cell->get_dof_indices(local_dof_indices);

zero_constraints.distribute_local_to_global(cell_residual,

local_dof_indices,

residual);

}

std::cout << " norm=" << residual.l2_norm() << std::endl;

}

Solving linear systems with the Jacobian matrix

Next up is the function that implements the solution of a linear system with the Jacobian matrix. Since we have already factored the matrix when we built the matrix, solving a linear system comes down to applying the inverse matrix to the given right hand side vector: This is what the SparseDirectUMFPACK::vmult() function does that we use here. Following this, we have to make sure that we also address the values of hanging nodes in the solution vector, and this is done using AffineConstraints::distribute().

The function takes an additional, but unused, argument tolerance that indicates how accurately we have to solve the linear system. The meaning of this argument is discussed in the introduction in the context of the "Eisenstat Walker trick", but since we are using a direct rather than an iterative solver, we are not using this opportunity to solve linear systems only inexactly.

template <int dim>

const double )

{

std::cout << " Solving linear system" << std::endl;

jacobian_matrix_factorization->vmult(solution, rhs);

zero_constraints.distribute(solution);

}

Refining the mesh, setting boundary values, and generating graphical output

The following three functions are again simply copies of the ones in step-15:

template <int dim>

void MinimalSurfaceProblem<dim>::refine_mesh()

{

dof_handler,

current_solution,

estimated_error_per_cell);

estimated_error_per_cell,

0.3,

0.03);

solution_transfer.prepare_for_coarsening_and_refinement(coarse_solution);

setup_system();

solution_transfer.interpolate(coarse_solution, current_solution);

nonzero_constraints.distribute(current_solution);

}

template <int dim>

void MinimalSurfaceProblem<dim>::output_results(

const unsigned int refinement_cycle)

{

data_out.add_data_vector(current_solution, "solution");

data_out.build_patches();

const std::string filename =

std::ofstream output(filename);

data_out.write_vtu(output);

}

void attach_dof_handler(const DoFHandler< dim, spacedim > &)

static void estimate(const Mapping< dim, spacedim > &mapping, const DoFHandler< dim, spacedim > &dof, const Quadrature< dim - 1 > &quadrature, const std::map< types::boundary_id, const Function< spacedim, Number > * > &neumann_bc, const ReadVector< Number > &solution, Vector< float > &error, const ComponentMask &component_mask={}, const Function< spacedim > *coefficients=nullptr, const unsigned int n_threads=numbers::invalid_unsigned_int, const types::subdomain_id subdomain_id=numbers::invalid_subdomain_id, const types::material_id material_id=numbers::invalid_material_id, const Strategy strategy=cell_diameter_over_24)

unsigned int n_active_cells() const

virtual void execute_coarsening_and_refinement() override

virtual bool prepare_coarsening_and_refinement() override

void refine_and_coarsen_fixed_number(Triangulation< dim, spacedim > &triangulation, const Vector< Number > &criteria, const double top_fraction_of_cells, const double bottom_fraction_of_cells, const unsigned int max_n_cells=std::numeric_limits< unsigned int >::max())

std::string int_to_string(const unsigned int value, const unsigned int digits=numbers::invalid_unsigned_int)

The run() function and the overall logic of the program

The only function that really is interesting in this program is the one that drives the overall algorithm of starting on a coarse mesh, doing some mesh refinement cycles, and on each mesh using KINSOL to find the solution of the nonlinear algebraic equation we obtain from discretization on this mesh. The refine_mesh() function above makes sure that the solution on one mesh is used as the starting guess on the next mesh. We also use a TimerOutput object to measure how much time every operation on each mesh costs, and reset the timer at the beginning of each cycle.

As discussed in the introduction, it is not necessary to solve problems on coarse meshes particularly accurately since these will only solve as starting guesses for the next mesh. As a consequence, we will use a target tolerance of \tau=10^{-3} \frac{1}{10^k} for the kth mesh refinement cycle.

All of this is encoded in the first part of this function:

template <int dim>

void MinimalSurfaceProblem<dim>::run()

{

setup_system();

nonzero_constraints.distribute(current_solution);

for (unsigned int refinement_cycle = 0; refinement_cycle < 6;

++refinement_cycle)

{

computing_timer.reset();

std::cout << "Mesh refinement step " << refinement_cycle << std::endl;

if (refinement_cycle != 0)

refine_mesh();

const double target_tolerance = 1e-3 *

std::pow(0.1, refinement_cycle);

std::cout << " Target_tolerance: " << target_tolerance << std::endl

<< std::endl;

void refine_global(const unsigned int times=1)

void hyper_ball(Triangulation< dim > &tria, const Point< dim > ¢er=Point< dim >(), const double radius=1., const bool attach_spherical_manifold_on_boundary_cells=false)

::VectorizedArray< Number, width > pow(const ::VectorizedArray< Number, width > &, const Number p)

This is where the fun starts. At the top we create the KINSOL solver object and feed it with an object that encodes a number of additional specifics (of which we only change the nonlinear tolerance we want to reach; but you might want to look into what other members the SUNDIALS::KINSOL::AdditionalData class has and play with them).

{

additional_data;

additional_data.function_tolerance = target_tolerance;

Then we have to describe the operations that were already mentioned in the introduction. In essence, we have to teach KINSOL how to (i) resize a vector to the correct size, (ii) compute the residual vector, (iii) compute the Jacobian matrix (during which we also compute its factorization), and (iv) solve a linear system with the Jacobian.

All four of these operations are represented by member variables of the SUNDIALS::KINSOL class that are of type std::function, i.e., they are objects to which we can assign a pointer to a function or, as we do here, a "lambda function" that takes the appropriate arguments and returns the appropriate information. It turns out that we can do all of this in just over 20 lines of code.

(If you're not familiar what "lambda functions" are, take a look at step-12 or at the wikipedia page on the subject. The idea of lambda functions is that one wants to define a function with a certain set of arguments, but (i) not make it a named functions because, typically, the function is used in only one place and it seems unnecessary to give it a global name; and (ii) that the function has access to some of the variables that exist at the place where it is defined, including member variables. The syntax of lambda functions is awkward, but ultimately quite useful.)

At the very end of the code block we then tell KINSOL to go to work and solve our problem. The member functions called from the 'residual', 'setup_jacobian', and 'solve_with_jacobian' functions will then print output to screen that allows us to follow along with the progress of the program.

x.reinit(dof_handler.n_dofs());

};

nonlinear_solver.residual =

compute_residual(evaluation_point, residual);

};

nonlinear_solver.setup_jacobian =

compute_and_factorize_jacobian(current_u);

};

const double tolerance) {

solve(rhs, dst, tolerance);

};

nonlinear_solver.solve(current_solution);

}

The rest is then just house-keeping: Writing data to a file for visualizing, and showing a summary of the timing collected so that we can interpret how long each operation has taken, how often it was executed, etc:

output_results(refinement_cycle);

computing_timer.print_summary();

std::cout << std::endl;

}

}

}

int main()

{

try

{

using namespace Step77;

MinimalSurfaceProblem<2> problem;

problem.run();

}

catch (std::exception &exc)

{

std::cerr << std::endl

<< std::endl

<< "----------------------------------------------------"

<< std::endl;

std::cerr << "Exception on processing: " << std::endl

<< exc.what() << std::endl

<< "Aborting!" << std::endl

<< "----------------------------------------------------"

<< std::endl;

return 1;

}

catch (...)

{

std::cerr << std::endl

<< std::endl

<< "----------------------------------------------------"

<< std::endl;

std::cerr << "Unknown exception!" << std::endl

<< "Aborting!" << std::endl

<< "----------------------------------------------------"

<< std::endl;

return 1;

}

return 0;

}

Results

When running the program, you get output that looks like this:

Mesh refinement step 0

Target_tolerance: 0.001

Computing residual vector... norm=0.867975

Computing Jacobian matrix

Factorizing Jacobian matrix

Solving linear system

Computing residual vector... norm=0.867975

Computing residual vector... norm=0.212073

Solving linear system

Computing residual vector... norm=0.212073

Computing residual vector... norm=0.202631

Solving linear system

Computing residual vector... norm=0.202631

Computing residual vector... norm=0.165773

Solving linear system

Computing residual vector... norm=0.165774

Computing residual vector... norm=0.162594

Solving linear system

Computing residual vector... norm=0.162594

Computing residual vector... norm=0.148175

Solving linear system

Computing residual vector... norm=0.148175

Computing residual vector... norm=0.145391

Solving linear system

Computing residual vector... norm=0.145391

Computing residual vector... norm=0.137551

Solving linear system

Computing residual vector... norm=0.137551

Computing residual vector... norm=0.135366

Solving linear system

Computing residual vector... norm=0.135365

Computing residual vector... norm=0.130367

Solving linear system

Computing residual vector... norm=0.130367

Computing residual vector... norm=0.128704

Computing Jacobian matrix

Factorizing Jacobian matrix

Solving linear system

Computing residual vector... norm=0.128704

Computing residual vector... norm=0.0302623

Solving linear system

Computing residual vector... norm=0.0302624

Computing residual vector... norm=0.0126764

Solving linear system

Computing residual vector... norm=0.0126763

Computing residual vector... norm=0.00488315

Solving linear system

Computing residual vector... norm=0.00488322

Computing residual vector... norm=0.00195788

Solving linear system

Computing residual vector... norm=0.00195781

Computing residual vector... norm=0.000773169

+---------------------------------------------+------------+------------+

| Total wallclock time elapsed since start | 0.121s | |

| | | |

| Section | no. calls | wall time | % of total |

+---------------------------------+-----------+------------+------------+

| assembling the Jacobian | 2 | 0.0151s | 12% |

| assembling the residual | 31 | 0.0945s | 78% |

| factorizing the Jacobian | 2 | 0.00176s | 1.5% |

| graphical output | 1 | 0.00504s | 4.2% |

| linear system solve | 15 | 0.000893s | 0.74% |

+---------------------------------+-----------+------------+------------+

Mesh refinement step 1

Target_tolerance: 0.0001

Computing residual vector... norm=0.2467

Computing Jacobian matrix

Factorizing Jacobian matrix

Solving linear system

Computing residual vector... norm=0.246699

Computing residual vector... norm=0.0357783

Solving linear system

Computing residual vector... norm=0.0357784

Computing residual vector... norm=0.0222161

Solving linear system

Computing residual vector... norm=0.022216

Computing residual vector... norm=0.0122148

Solving linear system

Computing residual vector... norm=0.0122149

Computing residual vector... norm=0.00750795

Solving linear system

Computing residual vector... norm=0.00750787

Computing residual vector... norm=0.00439629

Solving linear system

Computing residual vector... norm=0.00439638

Computing residual vector... norm=0.00265093

Solving linear system

[...]

The way this should be interpreted is most easily explained by looking at the first few lines of the output on the first mesh:

Mesh refinement step 0

Mesh refinement step 0

Target_tolerance: 0.001

Computing residual vector... norm=0.867975

Computing Jacobian matrix

Factorizing Jacobian matrix

Solving linear system

Computing residual vector... norm=0.867975

Computing residual vector... norm=0.212073

Solving linear system

Computing residual vector... norm=0.212073

Computing residual vector... norm=0.202631

Solving linear system

Computing residual vector... norm=0.202631

Computing residual vector... norm=0.165773

Solving linear system

Computing residual vector... norm=0.165774

Computing residual vector... norm=0.162594

Solving linear system

Computing residual vector... norm=0.162594

Computing residual vector... norm=0.148175

Solving linear system

...

What is happening is this:

- In the first residual computation, KINSOL computes the residual to see whether the desired tolerance has been reached. The answer is no, so it requests the user program to compute the Jacobian matrix (and the function then also factorizes the matrix via SparseDirectUMFPACK).

- KINSOL then instructs us to solve a linear system of the form J_k \, \delta U_k = -F_k with this matrix and the previously computed residual vector.

- It is then time to determine how far we want to go in this direction, i.e., do line search. To this end, KINSOL requires us to compute the residual vector F(U_k + \alpha_k \delta U_k) for different step lengths \alpha_k. For the first step above, it finds an acceptable \alpha_k after two tries, and that's generally what will happen in later line searches as well.

- Having found a suitable updated solution U_{k+1}, the process is repeated except now KINSOL is happy with the current Jacobian matrix and does not instruct us to re-build the matrix and its factorization, instead asking us to solve a linear system with that same matrix. That will happen several times over, and only after ten solves with the same matrix are we instructed to build a matrix again, using what is by then an already substantially improved solution as linearization point.

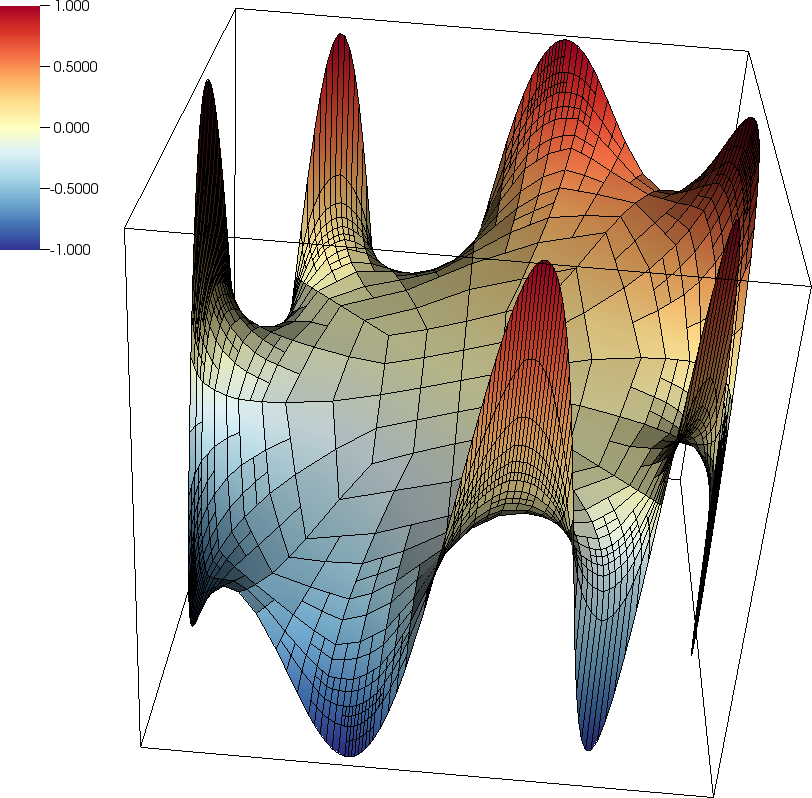

The program also writes the solution to a VTU file at the end of each mesh refinement cycle, and it looks as follows:

The key takeaway messages of this program are the following:

- The solution is the same as the one we computed in step-15, i.e., the interfaces to SUNDIALS' KINSOL package really did what they were supposed to do. This should not come as a surprise, but the important point is that we don't have to spend the time implementing the complex algorithms that underlie advanced nonlinear solvers ourselves.

- KINSOL is able to avoid all sorts of operations such as rebuilding the Jacobian matrix when that is not actually necessary. Comparing the number of linear solves in the output above with the number of times we rebuild the Jacobian and compute its factorization should make it clear that this leads to very substantial savings in terms of compute times, without us having to implement the intricacies of algorithms that determine when we need to rebuild this information.

Possibilities for extensions

Better linear solvers

For all but the small problems we consider here, a sparse direct solver requires too much time and memory – we need an iterative solver like we use in many other programs. The trade-off between constructing an expensive preconditioner (say, a geometric or algebraic multigrid method) is different in the current case, however: Since we can re-use the same matrix for numerous linear solves, we can do the same for the preconditioner and putting more work into building a good preconditioner can more easily be justified than if we used it only for a single linear solve as one does for many other situations.

But iterative solvers also afford other opportunities. For example (and as discussed briefly in the introduction), we may not need to solve to very high accuracy (small tolerances) in early nonlinear iterations as long as we are still far away from the actual solution. This was the basis of the Eisenstat-Walker trick mentioned there. (This is also the underlying reason why one can store the matrix in single precision rather than double precision, see the discussion in the "Possibilities for extensions" section of step-15.)

KINSOL provides the function that does the linear solution with a target tolerance that needs to be reached. We ignore it in the program above because the direct solver we use does not need a tolerance and instead solves the linear system exactly (up to round-off, of course), but iterative solvers could make use of this kind of information – and, in fact, should. Indeed, the infrastructure is already there: The solve() function of this program is declared as

template <int dim>

const double )

i.e., the tolerance parameter already exists, but is unused.

Replacing SUNDIALS' KINSOL by PETSc's SNES

As mentioned in the introduction, SUNDIALS' KINSOL package is not the only player in town. Rather, very similar interfaces exist to the SNES package that is part of PETSc, and the NOX package that is part of Trilinos, via the PETScWrappers::NonlinearSolver and TrilinosWrappers::NOXSolver classes.

It is not very difficult to change the program to use either of these two alternatives. Rather than show exactly what needs to be done, let us point out that a version of this program that uses SNES instead of KINSOL is available as part of the test suite, in the file tests/petsc/step-77-snes.cc. Setting up the solver for PETScWrappers::NonlinearSolver turns out to be even simpler than for the SUNDIALS::KINSOL class we use here because we don't even need the reinit lambda function – SNES only needs us to set up the remaining three functions residual, setup_jacobian, and solve_with_jacobian. The majority of changes necessary to convert the program to use SNES are related to the fact that SNES can only deal with PETSc vectors and matrices, and these need to be set up slightly differently. On the upside, the test suite program mentioned above already works in parallel.

SNES also allows playing with a number of parameters about the solver, and that enables some interesting comparisons between methods. When you run the test program (or a slightly modified version that outputs information to the screen instead of a file), you get output that looks comparable to something like this:

Mesh refinement step 0

Target_tolerance: 0.001

Computing residual vector

0 norm=0.867975

Computing Jacobian matrix

Computing residual vector

Computing residual vector

1 norm=0.212073

Computing Jacobian matrix

Computing residual vector

Computing residual vector

2 norm=0.0189603

Computing Jacobian matrix

Computing residual vector

Computing residual vector

3 norm=0.000314854

[...]

By default, PETSc uses a Newton solver with cubic backtracking, resampling the Jacobian matrix at each Newton step. That is, we compute and factorize the matrix once per Newton step, and then sample the residual to check for a successful line-search.

The attentive reader should have noticed that in this case we are computing one more extra residual per Newton step. This is because the deal.II code is set up to use a Jacobian-free approach, and the extra residual computation pops up when computing a matrix-vector product to test the validity of the Newton solution.

PETSc can be configured in many interesting ways via the command line. We can visualize the details of the solver by using the command line argument -snes_view, which produces the excerpt below at the end of each solve call:

Mesh refinement step 0

[...]

SNES Object: 1

MPI process

type: newtonls

maximum iterations=50, maximum function evaluations=10000

tolerances: relative=1e-08, absolute=0.001, solution=1e-08

total number of linear solver iterations=3

total number of function evaluations=7

norm schedule ALWAYS

Jacobian is applied matrix-free with differencing

Jacobian is applied matrix-free with differencing, no explicit Jacobian

SNESLineSearch Object: 1

MPI process

type: bt

interpolation: cubic

alpha=1.000000e-04

maxstep=1.000000e+08, minlambda=1.000000e-12

tolerances: relative=1.000000e-08, absolute=1.000000e-15, lambda=1.000000e-08

maximum iterations=40

KSP Object: 1

MPI process

type: preonly

maximum iterations=10000, initial guess is zero

tolerances: relative=1e-05, absolute=1e-50, divergence=10000.

left preconditioning

using NONE norm type for convergence test

type: shell

deal.II user solve

linear system matrix followed by preconditioner matrix:

Mat Object: 1

MPI process

type: mffd

rows=89, cols=89

Matrix-free approximation:

err=1.49012e-08 (relative error in function evaluation)

Using wp compute h routine

Does not compute normU

Mat Object: 1

MPI process

type: seqaij

rows=89, cols=89

total: nonzeros=745, allocated nonzeros=745

total number of mallocs used during MatSetValues calls=0

not using I-node routines

[...]

From the above details, we see that we are using the "newtonls" solver type ("Newton line search"), with "bt" ("backtracting") line search.

From the output of -snes_view we can also get information about the linear solver details; specifically, when using the solve_with_jacobian interface, the deal.II interface internally uses a custom solver configuration within a "shell" preconditioner, that wraps the action of solve_with_jacobian.

We can also see the details of the type of matrices used within the solve: "mffd" (matrix-free finite-differencing) for the action of the linearized operator and "seqaij" for the assembled Jacobian we have used to construct the preconditioner.

Diagnostics for the line search procedure can be turned on using the command line -snes_linesearch_monitor, producing the excerpt below:

Mesh refinement step 0

Target_tolerance: 0.001

Computing residual vector

0 norm=0.867975

Computing Jacobian matrix

Computing residual vector

Computing residual vector

Line search: Using full step: fnorm 8.679748230595e-01 gnorm 2.120728179320e-01

1 norm=0.212073

Computing Jacobian matrix

Computing residual vector

Computing residual vector

Line search: Using full step: fnorm 2.120728179320e-01 gnorm 1.896033864659e-02

2 norm=0.0189603

Computing Jacobian matrix

Computing residual vector

Computing residual vector

Line search: Using full step: fnorm 1.896033864659e-02 gnorm 3.148542199408e-04

3 norm=0.000314854

[...]

Within the run, the Jacobian matrix is assembled (and factored) 29 times:

./step-77-snes | grep "Computing Jacobian" | wc -l

29

KINSOL internally decided when it was necessary to update the Jacobian matrix (which is when it would call setup_jacobian). SNES can do something similar: We can compute the explicit sparse Jacobian matrix only once per refinement step (and reuse the initial factorization) by using the command line -snes_lag_jacobian -2, producing:

./step-77-snes -snes_lag_jacobian -2 | grep "Computing Jacobian" | wc -l

6

In other words, this dramatically reduces the number of times we have to build the Jacobian matrix, though at a cost to the number of nonlinear steps we have to take.

The lagging period can also be decided automatically. For example, if we want to recompute the Jacobian at every other step:

./step-77-snes -snes_lag_jacobian 2 | grep "Computing Jacobian" | wc -l

25

Note, however, that we didn't exactly halve the number of Jacobian computations. In this case the solution process will require many more nonlinear iterations since the accuracy of the linear system solve is not enough.

If we switch to using the preconditioned conjugate gradient method as a linear solve, still using our initial factorization as preconditioner, we get:

./step-77-snes -snes_lag_jacobian 2 -ksp_type cg | grep "Computing Jacobian" | wc -l

17

Note that in this case we use an approximate preconditioner (the LU factorization of the initial approximation) but we use a matrix-free operator for the action of our Jacobian matrix, thus solving for the correct linear system.

We can switch to a quasi-Newton method by using the command line -snes_type qn -snes_qn_scale_type jacobian, and we can see that our Jacobian is sampled and factored only when needed, at the cost of an increase of the number of steps:

Mesh refinement step 0

Target_tolerance: 0.001

Computing residual vector

0 norm=0.867975

Computing Jacobian matrix

Computing residual vector

Computing residual vector

1 norm=0.166391

Computing residual vector

Computing residual vector

2 norm=0.0507703

Computing residual vector

Computing residual vector

3 norm=0.0160007

Computing residual vector

Computing residual vector

Computing residual vector

4 norm=0.00172425

Computing residual vector

Computing residual vector

Computing residual vector

5 norm=0.000460486

[...]

Nonlinear preconditioning can also be used. For example, we can run a right-preconditioned nonlinear GMRES, using one Newton step as a preconditioner, with the command:

./step-77-snes -snes_type ngmres -npc_snes_type newtonls -snes_monitor -npc_snes_monitor | grep SNES

As also discussed for the KINSOL use above, optimal preconditioners should be used instead of the LU factorization used here by default. This is already possible within this tutorial by playing with the command line options. For example, algebraic multigrid can be used by simply specifying -pc_type gamg. When using iterative linear solvers, the "Eisenstat-Walker trick" [196] can be also requested at command line via -snes_ksp_ew. Using these options, we can see that the number of nonlinear iterations used by the solver increases as the mesh is refined, and that the number of linear iterations increases as the Newton solver is entering the second-order ball of convergence:

./step-77-snes -pc_type gamg -ksp_type cg -ksp_converged_reason -snes_converged_reason -snes_ksp_ew | grep CONVERGED

Linear solve converged due to CONVERGED_RTOL iterations 1

Linear solve converged due to CONVERGED_RTOL iterations 2

Linear solve converged due to CONVERGED_RTOL iterations 3

Nonlinear solve converged due to CONVERGED_FNORM_ABS iterations 3

Linear solve converged due to CONVERGED_RTOL iterations 1

Linear solve converged due to CONVERGED_RTOL iterations 1

Linear solve converged due to CONVERGED_RTOL iterations 2

Nonlinear solve converged due to CONVERGED_FNORM_ABS iterations 3

Linear solve converged due to CONVERGED_RTOL iterations 1

Linear solve converged due to CONVERGED_RTOL iterations 2

Linear solve converged due to CONVERGED_RTOL iterations 2

Linear solve converged due to CONVERGED_RTOL iterations 2

Linear solve converged due to CONVERGED_RTOL iterations 3

Linear solve converged due to CONVERGED_RTOL iterations 4

Nonlinear solve converged due to CONVERGED_FNORM_ABS iterations 6

Linear solve converged due to CONVERGED_RTOL iterations 1

Linear solve converged due to CONVERGED_RTOL iterations 1

Linear solve converged due to CONVERGED_RTOL iterations 1

Linear solve converged due to CONVERGED_RTOL iterations 1

Linear solve converged due to CONVERGED_RTOL iterations 1

Linear solve converged due to CONVERGED_RTOL iterations 1

Linear solve converged due to CONVERGED_RTOL iterations 1

Linear solve converged due to CONVERGED_RTOL iterations 1

Linear solve converged due to CONVERGED_RTOL iterations 1

Linear solve converged due to CONVERGED_RTOL iterations 2

Linear solve converged due to CONVERGED_RTOL iterations 4

Linear solve converged due to CONVERGED_RTOL iterations 7

Nonlinear solve converged due to CONVERGED_FNORM_ABS iterations 12

Linear solve converged due to CONVERGED_RTOL iterations 1

Linear solve converged due to CONVERGED_RTOL iterations 2

Linear solve converged due to CONVERGED_RTOL iterations 3

Linear solve converged due to CONVERGED_RTOL iterations 4

Linear solve converged due to CONVERGED_RTOL iterations 7

Nonlinear solve converged due to CONVERGED_FNORM_ABS iterations 5

Linear solve converged due to CONVERGED_RTOL iterations 2

Linear solve converged due to CONVERGED_RTOL iterations 3

Linear solve converged due to CONVERGED_RTOL iterations 7

Linear solve converged due to CONVERGED_RTOL iterations 6

Linear solve converged due to CONVERGED_RTOL iterations 7

Linear solve converged due to CONVERGED_RTOL iterations 12

Nonlinear solve converged due to CONVERGED_FNORM_ABS iterations 6

Finally we describe how to get some diagnostic on the correctness of the computed Jacobian. Deriving the correct linearization is sometimes difficult: It took a page or two in the introduction to derive the exact bilinear form for the Jacobian matrix, and it would be quite nice compute it automatically from the residual of which it is the derivative. (This is what step-72 does!) But if one is set on doing things by hand, it would at least be nice if we had a way to check the correctness of the derivation. SNES allows us to do this: we can use the options -snes_test_jacobian -snes_test_jacobian_view:

Mesh refinement step 0

Target_tolerance: 0.001

Computing residual vector

0 norm=0.867975

Computing Jacobian matrix

---------- Testing Jacobian -------------

Testing hand-coded Jacobian, if (for double precision runs) ||J - Jfd||_F/||J||_F is

O(1.e-8), the hand-coded Jacobian is probably correct.

[...]

||J - Jfd||_F/||J||_F = 0.0196815, ||J - Jfd||_F = 0.503436

[...]

Hand-coded minus finite-difference Jacobian with tolerance 1e-05 ----------

Mat Object: 1

MPI process

type: seqaij

row 0: (0, 0.125859)

row 1: (1, 0.0437112)

row 2:

row 3:

row 4: (4, 0.902232)

row 5:

row 6:

row 7:

row 8:

row 9: (9, 0.537306)

row 10:

row 11: (11, 1.38157)

row 12:

[...]

showing that the only errors we commit in assembling the Jacobian are on the boundary dofs. As discussed in the tutorial, those errors are harmless.

The key take-away messages of this modification of the tutorial program are therefore basically the same of what we already found using KINSOL:

- The solution is the same as the one we computed in step-15, i.e., the interfaces to PETSc SNES package really did what they were supposed to do. This should not come as a surprise, but the important point is that we don't have to spend the time implementing the complex algorithms that underlie advanced nonlinear solvers ourselves.

- SNES offers a wide variety of solvers and line search techniques, not only Newton. It also allows us to control Jacobian setups; however, differently from KINSOL, this is not automatically decided within the library by looking at the residual vector but it needs to be specified by the user.

Replacing SUNDIALS' KINSOL by Trilinos' NOX package

Besides KINSOL and SNES, the third option you have is to use the NOX package. As before, rather than showing in detail how that needs to happen, let us simply point out that the test suite program tests/trilinos/step-77-with-nox.cc does this. The modifications necessary to use NOX instead of KINSOL are quite minimal; in particular, NOX (unlike SNES) is happy to work with deal.II's own vector and matrix classes.

Replacing SUNDIALS' KINSOL by a generic nonlinear solver

Having to choose which of these three frameworks (KINSOL, SNES, or NOX) to use at compile time is cumbersome when wanting to compare things. It would be nicer if one could decide the package to use at run time, assuming that one has a copy of deal.II installed that is compiled against all three of these dependencies. It turns out that this is possible, using the class NonlinearSolverSelector that presents a common interface to all three of these solvers, along with the ability to choose which one to use based on run-time parameters.

The plain program

#include <fstream>

#include <iostream>

namespace Step77

{

template <int dim>

class MinimalSurfaceProblem

{

public:

MinimalSurfaceProblem();

void run();

private:

void setup_system();

const double tolerance);

void refine_mesh();

void output_results(const unsigned int refinement_cycle);

void compute_and_factorize_jacobian(

const Vector<double> &evaluation_point);

std::unique_ptr<SparseDirectUMFPACK> jacobian_matrix_factorization;

};

template <int dim>

class BoundaryValues :

public Function<dim>

{

public:

const unsigned int component = 0) const override;

};

template <int dim>

double BoundaryValues<dim>::value(

const Point<dim> &p,

const unsigned int ) const

{

}

template <int dim>

MinimalSurfaceProblem<dim>::MinimalSurfaceProblem()

, fe(1)

{}

template <int dim>

void MinimalSurfaceProblem<dim>::setup_system()

{

dof_handler.distribute_dofs(fe);

current_solution.reinit(dof_handler.n_dofs());

zero_constraints.clear();

0,

zero_constraints);

zero_constraints.close();

nonzero_constraints.clear();

0,

BoundaryValues<dim>(),

nonzero_constraints);

nonzero_constraints.close();

sparsity_pattern.copy_from(dsp);

jacobian_matrix.reinit(sparsity_pattern);

jacobian_matrix_factorization.reset();

}

template <int dim>

void MinimalSurfaceProblem<dim>::compute_and_factorize_jacobian(

{

{

std::cout << " Computing Jacobian matrix" << std::endl;

jacobian_matrix = 0;

quadrature_formula,

const unsigned int dofs_per_cell = fe.n_dofs_per_cell();

const unsigned int n_q_points = quadrature_formula.size();

std::vector<Tensor<1, dim>> evaluation_point_gradients(n_q_points);

std::vector<types::global_dof_index> local_dof_indices(dofs_per_cell);

for (const auto &cell : dof_handler.active_cell_iterators())

{

fe_values.reinit(cell);

fe_values.get_function_gradients(evaluation_point,

evaluation_point_gradients);

for (unsigned int q = 0; q < n_q_points; ++q)

{

const double coeff =

1.0 /

std::sqrt(1 + evaluation_point_gradients[q] *

evaluation_point_gradients[q]);

for (unsigned int i = 0; i < dofs_per_cell; ++i)

{

for (unsigned int j = 0; j < dofs_per_cell; ++j)

(((fe_values.shape_grad(i, q)

* coeff

* fe_values.shape_grad(j, q))

-

(fe_values.shape_grad(i, q)

* coeff * coeff * coeff

*

(fe_values.shape_grad(j, q)

* evaluation_point_gradients[q])

* evaluation_point_gradients[q]))

* fe_values.JxW(q));

}

}

cell->get_dof_indices(local_dof_indices);

zero_constraints.distribute_local_to_global(cell_matrix,

local_dof_indices,

jacobian_matrix);

}

}

{

std::cout << " Factorizing Jacobian matrix" << std::endl;

jacobian_matrix_factorization = std::make_unique<SparseDirectUMFPACK>();

jacobian_matrix_factorization->factorize(jacobian_matrix);

}

}

template <int dim>

void MinimalSurfaceProblem<dim>::compute_residual(

{

std::cout << " Computing residual vector..." << std::flush;

residual = 0.0;

quadrature_formula,

const unsigned int dofs_per_cell = fe.n_dofs_per_cell();

const unsigned int n_q_points = quadrature_formula.size();

std::vector<Tensor<1, dim>> evaluation_point_gradients(n_q_points);

std::vector<types::global_dof_index> local_dof_indices(dofs_per_cell);

for (const auto &cell : dof_handler.active_cell_iterators())

{

fe_values.reinit(cell);

fe_values.get_function_gradients(evaluation_point,

evaluation_point_gradients);

for (unsigned int q = 0; q < n_q_points; ++q)

{

const double coeff =

1.0 /

std::sqrt(1 + evaluation_point_gradients[q] *

evaluation_point_gradients[q]);

for (unsigned int i = 0; i < dofs_per_cell; ++i)

(fe_values.shape_grad(i, q)

* coeff

* evaluation_point_gradients[q]

* fe_values.JxW(q));

}

cell->get_dof_indices(local_dof_indices);

zero_constraints.distribute_local_to_global(cell_residual,

local_dof_indices,

residual);

}

std::cout <<

" norm=" << residual.

l2_norm() << std::endl;

}

template <int dim>

const double )

{

std::cout << " Solving linear system" << std::endl;

jacobian_matrix_factorization->vmult(solution, rhs);

zero_constraints.distribute(solution);

}

template <int dim>

void MinimalSurfaceProblem<dim>::refine_mesh()

{

dof_handler,

current_solution,

estimated_error_per_cell);

estimated_error_per_cell,

0.3,

0.03);

solution_transfer.prepare_for_coarsening_and_refinement(coarse_solution);

setup_system();

solution_transfer.interpolate(coarse_solution, current_solution);

nonzero_constraints.distribute(current_solution);

}

template <int dim>

void MinimalSurfaceProblem<dim>::output_results(

const unsigned int refinement_cycle)

{

const std::string filename =

std::ofstream output(filename);

}

template <int dim>

void MinimalSurfaceProblem<dim>::run()

{

setup_system();

nonzero_constraints.distribute(current_solution);

for (unsigned int refinement_cycle = 0; refinement_cycle < 6;

++refinement_cycle)

{

computing_timer.reset();

std::cout << "Mesh refinement step " << refinement_cycle << std::endl;

if (refinement_cycle != 0)

refine_mesh();

const double target_tolerance = 1

e-3 *

std::pow(0.1, refinement_cycle);

std::cout << " Target_tolerance: " << target_tolerance << std::endl

<< std::endl;

{

additional_data;

additional_data.function_tolerance = target_tolerance;

x.reinit(dof_handler.n_dofs());

};

nonlinear_solver.residual =

compute_residual(evaluation_point, residual);

};

nonlinear_solver.setup_jacobian =

compute_and_factorize_jacobian(current_u);

};

const double tolerance) {

solve(rhs, dst, tolerance);

};

nonlinear_solver.solve(current_solution);

}

output_results(refinement_cycle);

computing_timer.print_summary();

std::cout << std::endl;

}

}

}

int main()

{

try

{

using namespace Step77;

MinimalSurfaceProblem<2> problem;

problem.run();

}

catch (std::exception &exc)

{

std::cerr << std::endl

<< std::endl

<< "----------------------------------------------------"

<< std::endl;

std::cerr << "Exception on processing: " << std::endl

<< exc.what() << std::endl

<< "Aborting!" << std::endl

<< "----------------------------------------------------"

<< std::endl;

return 1;

}

catch (...)

{

std::cerr << std::endl

<< std::endl

<< "----------------------------------------------------"

<< std::endl;

std::cerr << "Unknown exception!" << std::endl

<< "Aborting!" << std::endl

<< "----------------------------------------------------"

<< std::endl;

return 1;

}

return 0;

}

void write_vtu(std::ostream &out) const

void add_data_vector(const VectorType &data, const std::vector< std::string > &names, const DataVectorType type=type_automatic, const std::vector< DataComponentInterpretation::DataComponentInterpretation > &data_component_interpretation={})

virtual void build_patches(const unsigned int n_subdivisions=0)

real_type l2_norm() const

void cell_matrix(FullMatrix< double > &M, const FEValuesBase< dim > &fe, const FEValuesBase< dim > &fetest, const ArrayView< const std::vector< double > > &velocity, const double factor=1.)

void cell_residual(Vector< double > &result, const FEValuesBase< dim > &fe, const std::vector< Tensor< 1, dim > > &input, const ArrayView< const std::vector< double > > &velocity, double factor=1.)

SymmetricTensor< 2, dim, Number > e(const Tensor< 2, dim, Number > &F)