|

Reference documentation for deal.II version 9.3.3

|

|

Reference documentation for deal.II version 9.3.3

|

This tutorial depends on step-6.

| Table of contents | |

|---|---|

In real life, most partial differential equations are really systems of equations. Accordingly, the solutions are usually vector-valued. The deal.II library supports such problems (see the extensive documentation in the Handling vector valued problems module), and we will show that that is mostly rather simple. The only more complicated problems are in assembling matrix and right hand side, but these are easily understood as well.

In this tutorial program we will want to solve the elastic equations. They are an extension to Laplace's equation with a vector-valued solution that describes the displacement in each space direction of a rigid body which is subject to a force. Of course, the force is also vector-valued, meaning that in each point it has a direction and an absolute value.

One can write the elasticity equations in a number of ways. The one that shows the symmetry with the Laplace equation in the most obvious way is to write it as

\[ - \text{div}\, ({\mathbf C} \nabla \mathbf{u}) = \mathbf f, \]

where \(\mathbf u\) is the vector-valued displacement at each point, \(\mathbf f\) the force, and \({\mathbf C}\) is a rank-4 tensor (i.e., it has four indices) that encodes the stress-strain relationship – in essence, it represents the "spring constant" in Hookes law that relates the displacement to the forces. \({\mathbf C}\) will, in many cases, depend on \(\mathbf x\) if the body whose deformation we want to simulate is composed of different materials.

While the form of the equations above is correct, it is not the way they are usually derived. In truth, the gradient of the displacement \(\nabla\mathbf u\) (a matrix) has no physical meaning whereas its symmetrized version,

\[ \varepsilon(\mathbf u)_{kl} =\frac{1}{2}(\partial_k u_l + \partial_l u_k), \]

does and is typically called the "strain". (Here and in the following, \(\partial_k=\frac{\partial}{\partial x_k}\). We will also use the Einstein summation convention that whenever the same index appears twice in an equation, summation over this index is implied; we will, however, not distinguish between upper and lower indices.) With this definition of the strain, the elasticity equations then read as

\[ - \text{div}\, ({\mathbf C} \varepsilon(\mathbf u)) = \mathbf f, \]

which you can think of as the more natural generalization of the Laplace equation to vector-valued problems. (The form shown first is equivalent to this form because the tensor \({\mathbf C}\) has certain symmetries, namely that \(C_{ijkl}=C_{ijlk}\), and consequently \({\mathbf C} \varepsilon(\mathbf u)_{kl} = {\mathbf C} \nabla\mathbf u\).)

One can of course alternatively write these equations in component form:

\[ - \partial_j (c_{ijkl} \varepsilon_{kl}) = f_i, \qquad i=1\ldots d. \]

In many cases, one knows that the material under consideration is isotropic, in which case by introduction of the two coefficients \(\lambda\) and \(\mu\) the coefficient tensor reduces to

\[ c_{ijkl} = \lambda \delta_{ij} \delta_{kl} + \mu (\delta_{ik} \delta_{jl} + \delta_{il} \delta_{jk}). \]

The elastic equations can then be rewritten in much simpler a form:

\[ - \nabla \lambda (\nabla\cdot {\mathbf u}) - (\nabla \cdot \mu \nabla) {\mathbf u} - \nabla\cdot \mu (\nabla {\mathbf u})^T = {\mathbf f}, \]

and the respective bilinear form is then

\[ a({\mathbf u}, {\mathbf v}) = \left( \lambda \nabla\cdot {\mathbf u}, \nabla\cdot {\mathbf v} \right)_\Omega + \sum_{k,l} \left( \mu \partial_k u_l, \partial_k v_l \right)_\Omega + \sum_{k,l} \left( \mu \partial_k u_l, \partial_l v_k \right)_\Omega, \]

or also writing the first term a sum over components:

\[ a({\mathbf u}, {\mathbf v}) = \sum_{k,l} \left( \lambda \partial_l u_l, \partial_k v_k \right)_\Omega + \sum_{k,l} \left( \mu \partial_k u_l, \partial_k v_l \right)_\Omega + \sum_{k,l} \left( \mu \partial_k u_l, \partial_l v_k \right)_\Omega. \]

But let's get back to the original problem. How do we assemble the matrix for such an equation? A very long answer with a number of different alternatives is given in the documentation of the Handling vector valued problems module. Historically, the solution shown below was the only one available in the early years of the library. It turns out to also be the fastest. On the other hand, if a few per cent of compute time do not matter, there are simpler and probably more intuitive ways to assemble the linear system than the one discussed below but that weren't available until several years after this tutorial program was first written; if you are interested in them, take a look at the Handling vector valued problems module.

Let us go back to the question of how to assemble the linear system. The first thing we need is some knowledge about how the shape functions work in the case of vector-valued finite elements. Basically, this comes down to the following: let \(n\) be the number of shape functions for the scalar finite element of which we build the vector element (for example, we will use bilinear functions for each component of the vector-valued finite element, so the scalar finite element is the FE_Q(1) element which we have used in previous examples already, and \(n=4\) in two space dimensions). Further, let \(N\) be the number of shape functions for the vector element; in two space dimensions, we need \(n\) shape functions for each component of the vector, so \(N=2n\). Then, the \(i\)th shape function of the vector element has the form

\[ \Phi_i({\mathbf x}) = \varphi_{\text{base}(i)}({\mathbf x})\ {\mathbf e}_{\text{comp}(i)}, \]

where \(e_l\) is the \(l\)th unit vector, \(\text{comp}(i)\) is the function that tells us which component of \(\Phi_i\) is the one that is nonzero (for each vector shape function, only one component is nonzero, and all others are zero). \(\varphi_{\text{base}(i)}(x)\) describes the space dependence of the shape function, which is taken to be the \(\text{base}(i)\)-th shape function of the scalar element. Of course, while \(i\) is in the range \(0,\ldots,N-1\), the functions \(\text{comp}(i)\) and \(\text{base}(i)\) have the ranges \(0,1\) (in 2D) and \(0,\ldots,n-1\), respectively.

For example (though this sequence of shape functions is not guaranteed, and you should not rely on it), the following layout could be used by the library:

\begin{eqnarray*} \Phi_0({\mathbf x}) &=& \left(\begin{array}{c} \varphi_0({\mathbf x}) \\ 0 \end{array}\right), \\ \Phi_1({\mathbf x}) &=& \left(\begin{array}{c} 0 \\ \varphi_0({\mathbf x}) \end{array}\right), \\ \Phi_2({\mathbf x}) &=& \left(\begin{array}{c} \varphi_1({\mathbf x}) \\ 0 \end{array}\right), \\ \Phi_3({\mathbf x}) &=& \left(\begin{array}{c} 0 \\ \varphi_1({\mathbf x}) \end{array}\right), \ldots \end{eqnarray*}

where here

\[ \text{comp}(0)=0, \quad \text{comp}(1)=1, \quad \text{comp}(2)=0, \quad \text{comp}(3)=1, \quad \ldots \]

\[ \text{base}(0)=0, \quad \text{base}(1)=0, \quad \text{base}(2)=1, \quad \text{base}(3)=1, \quad \ldots \]

In all but very rare cases, you will not need to know which shape function \(\varphi_{\text{base}(i)}\) of the scalar element belongs to a shape function \(\Phi_i\) of the vector element. Let us therefore define

\[ \phi_i = \varphi_{\text{base}(i)} \]

by which we can write the vector shape function as

\[ \Phi_i({\mathbf x}) = \phi_{i}({\mathbf x})\ {\mathbf e}_{\text{comp}(i)}. \]

You can now safely forget about the function \(\text{base}(i)\), at least for the rest of this example program.

Now using this vector shape functions, we can write the discrete finite element solution as

\[ {\mathbf u}_h({\mathbf x}) = \sum_i \Phi_i({\mathbf x})\ U_i \]

with scalar coefficients \(U_i\). If we define an analog function \({\mathbf v}_h\) as test function, we can write the discrete problem as follows: Find coefficients \(U_i\) such that

\[ a({\mathbf u}_h, {\mathbf v}_h) = ({\mathbf f}, {\mathbf v}_h) \qquad \forall {\mathbf v}_h. \]

If we insert the definition of the bilinear form and the representation of \({\mathbf u}_h\) and \({\mathbf v}_h\) into this formula:

\begin{eqnarray*} \sum_{i,j} U_i V_j \sum_{k,l} \left\{ \left( \lambda \partial_l (\Phi_i)_l, \partial_k (\Phi_j)_k \right)_\Omega + \left( \mu \partial_l (\Phi_i)_k, \partial_l (\Phi_j)_k \right)_\Omega + \left( \mu \partial_l (\Phi_i)_k, \partial_k (\Phi_j)_l \right)_\Omega \right\} \\ = \sum_j V_j \sum_l \left( f_l, (\Phi_j)_l \right)_\Omega. \end{eqnarray*}

We note that here and in the following, the indices \(k,l\) run over spatial directions, i.e. \(0\le k,l < d\), and that indices \(i,j\) run over degrees of freedom.

The local stiffness matrix on cell \(K\) therefore has the following entries:

\[ A^K_{ij} = \sum_{k,l} \left\{ \left( \lambda \partial_l (\Phi_i)_l, \partial_k (\Phi_j)_k \right)_K + \left( \mu \partial_l (\Phi_i)_k, \partial_l (\Phi_j)_k \right)_K + \left( \mu \partial_l (\Phi_i)_k, \partial_k (\Phi_j)_l \right)_K \right\}, \]

where \(i,j\) now are local degrees of freedom and therefore \(0\le i,j < N\). In these formulas, we always take some component of the vector shape functions \(\Phi_i\), which are of course given as follows (see their definition):

\[ (\Phi_i)_l = \phi_i \delta_{l,\text{comp}(i)}, \]

with the Kronecker symbol \(\delta_{nm}\). Due to this, we can delete some of the sums over \(k\) and \(l\):

\begin{eqnarray*} A^K_{ij} &=& \sum_{k,l} \Bigl\{ \left( \lambda \partial_l \phi_i\ \delta_{l,\text{comp}(i)}, \partial_k \phi_j\ \delta_{k,\text{comp}(j)} \right)_K \\ &\qquad\qquad& + \left( \mu \partial_l \phi_i\ \delta_{k,\text{comp}(i)}, \partial_l \phi_j\ \delta_{k,\text{comp}(j)} \right)_K + \left( \mu \partial_l \phi_i\ \delta_{k,\text{comp}(i)}, \partial_k \phi_j\ \delta_{l,\text{comp}(j)} \right)_K \Bigr\} \\ &=& \left( \lambda \partial_{\text{comp}(i)} \phi_i, \partial_{\text{comp}(j)} \phi_j \right)_K + \sum_l \left( \mu \partial_l \phi_i, \partial_l \phi_j \right)_K \ \delta_{\text{comp}(i),\text{comp}(j)} + \left( \mu \partial_{\text{comp}(j)} \phi_i, \partial_{\text{comp}(i)} \phi_j \right)_K \\ &=& \left( \lambda \partial_{\text{comp}(i)} \phi_i, \partial_{\text{comp}(j)} \phi_j \right)_K + \left( \mu \nabla \phi_i, \nabla \phi_j \right)_K \ \delta_{\text{comp}(i),\text{comp}(j)} + \left( \mu \partial_{\text{comp}(j)} \phi_i, \partial_{\text{comp}(i)} \phi_j \right)_K. \end{eqnarray*}

Likewise, the contribution of cell \(K\) to the right hand side vector is

\begin{eqnarray*} f^K_j &=& \sum_l \left( f_l, (\Phi_j)_l \right)_K \\ &=& \sum_l \left( f_l, \phi_j \delta_{l,\text{comp}(j)} \right)_K \\ &=& \left( f_{\text{comp}(j)}, \phi_j \right)_K. \end{eqnarray*}

This is the form in which we will implement the local stiffness matrix and right hand side vectors.

As a final note: in the step-17 example program, we will revisit the elastic problem laid out here, and will show how to solve it in parallel on a cluster of computers. The resulting program will thus be able to solve this problem to significantly higher accuracy, and more efficiently if this is required. In addition, in step-20, step-21, as well as a few other of the later tutorial programs, we will revisit some vector-valued problems and show a few techniques that may make it simpler to actually go through all the stuff shown above, with FiniteElement::system_to_component_index etc.

As usual, the first few include files are already known, so we will not comment on them further.

In this example, we need vector-valued finite elements. The support for these can be found in the following include file :

We will compose the vector-valued finite elements from regular Q1 elements which can be found here, as usual:

This again is C++:

The last step is as in previous programs. In particular, just like in step-7, we pack everything that's specific to this program into a namespace of its own.

ElasticProblem class templateThe main class is, except for its name, almost unchanged with respect to the step-6 example.

The only change is the use of a different class for the fe variable: Instead of a concrete finite element class such as FE_Q, we now use a more generic one, FESystem. In fact, FESystem is not really a finite element itself in that it does not implement shape functions of its own. Rather, it is a class that can be used to stack several other elements together to form one vector-valued finite element. In our case, we will compose the vector-valued element of FE_Q(1) objects, as shown below in the constructor of this class.

Before going over to the implementation of the main class, we declare and define the function which describes the right hand side. This time, the right hand side is vector-valued, as is the solution, so we will describe the changes required for this in some more detail.

To prevent cases where the return vector has not previously been set to the right size we test for this case and otherwise throw an exception at the beginning of the function. Note that enforcing that output arguments already have the correct size is a convention in deal.II, and enforced almost everywhere. The reason is that we would otherwise have to check at the beginning of the function and possibly change the size of the output vector. This is expensive, and would almost always be unnecessary (the first call to the function would set the vector to the right size, and subsequent calls would only have to do redundant checks). In addition, checking and possibly resizing the vector is an operation that can not be removed if we can't rely on the assumption that the vector already has the correct size; this is in contract to the Assert call that is completely removed if the program is compiled in optimized mode.

Likewise, if by some accident someone tried to compile and run the program in only one space dimension (in which the elastic equations do not make much sense since they reduce to the ordinary Laplace equation), we terminate the program in the second assertion. The program will work just fine in 3d, however.

The rest of the function implements computing force values. We will use a constant (unit) force in x-direction located in two little circles (or spheres, in 3d) around points (0.5,0) and (-0.5,0), and y-force in an area around the origin; in 3d, the z-component of these centers is zero as well.

For this, let us first define two objects that denote the centers of these areas. Note that upon construction of the Point objects, all components are set to zero.

If points[point_n] is in a circle (sphere) of radius 0.2 around one of these points, then set the force in x-direction to one, otherwise to zero:

Likewise, if points[point_n] is in the vicinity of the origin, then set the y-force to one, otherwise to zero:

ElasticProblem class implementationFollowing is the constructor of the main class. As said before, we would like to construct a vector-valued finite element that is composed of several scalar finite elements (i.e., we want to build the vector-valued element so that each of its vector components consists of the shape functions of a scalar element). Of course, the number of scalar finite elements we would like to stack together equals the number of components the solution function has, which is dim since we consider displacement in each space direction. The FESystem class can handle this: we pass it the finite element of which we would like to compose the system of, and how often it shall be repeated:

In fact, the FESystem class has several more constructors which can perform more complex operations than just stacking together several scalar finite elements of the same type into one; we will get to know these possibilities in later examples.

Setting up the system of equations is identical to the function used in the step-6 example. The DoFHandler class and all other classes used here are fully aware that the finite element we want to use is vector-valued, and take care of the vector-valuedness of the finite element themselves. (In fact, they do not, but this does not need to bother you: since they only need to know how many degrees of freedom there are per vertex, line and cell, and they do not ask what they represent, i.e. whether the finite element under consideration is vector-valued or whether it is, for example, a scalar Hermite element with several degrees of freedom on each vertex).

The big changes in this program are in the creation of matrix and right hand side, since they are problem-dependent. We will go through that process step-by-step, since it is a bit more complicated than in previous examples.

The first parts of this function are the same as before, however: setting up a suitable quadrature formula, initializing an FEValues object for the (vector-valued) finite element we use as well as the quadrature object, and declaring a number of auxiliary arrays. In addition, we declare the ever same two abbreviations: n_q_points and dofs_per_cell. The number of degrees of freedom per cell we now obviously ask from the composed finite element rather than from the underlying scalar Q1 element. Here, it is dim times the number of degrees of freedom per cell of the Q1 element, though this is not explicit knowledge we need to care about:

As was shown in previous examples as well, we need a place where to store the values of the coefficients at all the quadrature points on a cell. In the present situation, we have two coefficients, lambda and mu.

Well, we could as well have omitted the above two arrays since we will use constant coefficients for both lambda and mu, which can be declared like this. They both represent functions always returning the constant value 1.0. Although we could omit the respective factors in the assemblage of the matrix, we use them here for purpose of demonstration.

Like the two constant functions above, we will call the function right_hand_side just once per cell to make things simpler.

Now we can begin with the loop over all cells:

Next we get the values of the coefficients at the quadrature points. Likewise for the right hand side:

Then assemble the entries of the local stiffness matrix and right hand side vector. This follows almost one-to-one the pattern described in the introduction of this example. One of the few comments in place is that we can compute the number comp(i), i.e. the index of the only nonzero vector component of shape function i using the fe.system_to_component_index(i).first function call below.

(By accessing the first variable of the return value of the system_to_component_index function, you might already have guessed that there is more in it. In fact, the function returns a std::pair<unsigned int, unsigned int>, of which the first element is comp(i) and the second is the value base(i) also noted in the introduction, i.e. the index of this shape function within all the shape functions that are nonzero in this component, i.e. base(i) in the diction of the introduction. This is not a number that we are usually interested in, however.)

With this knowledge, we can assemble the local matrix contributions:

The first term is \(\lambda \partial_i u_i, \partial_j

v_j) + (\mu \partial_i u_j, \partial_j v_i)\). Note that shape_grad(i,q_point) returns the gradient of the only nonzero component of the i-th shape function at quadrature point q_point. The component comp(i) of the gradient, which is the derivative of this only nonzero vector component of the i-th shape function with respect to the comp(i)th coordinate is accessed by the appended brackets.

The second term is \((\mu \nabla u_i, \nabla

v_j)\). We need not access a specific component of the gradient, since we only have to compute the scalar product of the two gradients, of which an overloaded version of operator* takes care, as in previous examples.

Note that by using the ?: operator, we only do this if component_i equals component_j, otherwise a zero is added (which will be optimized away by the compiler).

Assembling the right hand side is also just as discussed in the introduction:

The transfer from local degrees of freedom into the global matrix and right hand side vector does not depend on the equation under consideration, and is thus the same as in all previous examples.

The solver does not care about where the system of equations comes, as long as it stays positive definite and symmetric (which are the requirements for the use of the CG solver), which the system indeed is. Therefore, we need not change anything.

The function that does the refinement of the grid is the same as in the step-6 example. The quadrature formula is adapted to the linear elements again. Note that the error estimator by default adds up the estimated obtained from all components of the finite element solution, i.e., it uses the displacement in all directions with the same weight. If we would like the grid to be adapted to the x-displacement only, we could pass the function an additional parameter which tells it to do so and do not consider the displacements in all other directions for the error indicators. However, for the current problem, it seems appropriate to consider all displacement components with equal weight.

The output happens mostly as has been shown in previous examples already. The only difference is that the solution function is vector valued. The DataOut class takes care of this automatically, but we have to give each component of the solution vector a different name.

To do this, the DataOut::add_vector() function wants a vector of strings. Since the number of components is the same as the number of dimensions we are working in, we use the switch statement below.

We note that some graphics programs have restriction on what characters are allowed in the names of variables. deal.II therefore supports only the minimal subset of these characters that is supported by all programs. Basically, these are letters, numbers, underscores, and some other characters, but in particular no whitespace and minus/hyphen. The library will throw an exception otherwise, at least if in debug mode.

After listing the 1d, 2d, and 3d case, it is good style to let the program die if we run upon a case which we did not consider. Remember that the Assert macro generates an exception if the condition in the first parameter is not satisfied. Of course, the condition false can never be satisfied, so the program will always abort whenever it gets to the default statement:

After setting up the names for the different components of the solution vector, we can add the solution vector to the list of data vectors scheduled for output. Note that the following function takes a vector of strings as second argument, whereas the one which we have used in all previous examples accepted a string there. (In fact, the function we had used before would convert the single string into a vector with only one element and forwards that to the other function.)

The run function does the same things as in step-6, for example. This time, we use the square [-1,1]^d as domain, and we refine it globally four times before starting the first iteration.

The reason for refining is a bit accidental: we use the QGauss quadrature formula with two points in each direction for integration of the right hand side; that means that there are four quadrature points on each cell (in 2D). If we only refine the initial grid once globally, then there will be only four quadrature points in each direction on the domain. However, the right hand side function was chosen to be rather localized and in that case, by pure chance, it happens that all quadrature points lie at points where the right hand side function is zero (in mathematical terms, the quadrature points happen to be at points outside the support of the right hand side function). The right hand side vector computed with quadrature will then contain only zeroes (even though it would of course be nonzero if we had computed the right hand side vector exactly using the integral) and the solution of the system of equations is the zero vector, i.e., a finite element function that is zero everywhere. In a sense, we should not be surprised that this is happening since we have chosen an initial grid that is totally unsuitable for the problem at hand.

The unfortunate thing is that if the discrete solution is constant, then the error indicators computed by the KellyErrorEstimator class are zero for each cell as well, and the call to Triangulation::refine_and_coarsen_fixed_number() will not flag any cells for refinement (why should it if the indicated error is zero for each cell?). The grid in the next iteration will therefore consist of four cells only as well, and the same problem occurs again.

The conclusion needs to be: while of course we will not choose the initial grid to be well-suited for the accurate solution of the problem, we must at least choose it such that it has the chance to capture the important features of the solution. In this case, it needs to be able to see the right hand side. Thus, we refine globally four times. (Any larger number of global refinement steps would of course also work.)

main functionAfter closing the Step8 namespace in the last line above, the following is the main function of the program and is again exactly like in step-6 (apart from the changed class names, of course).

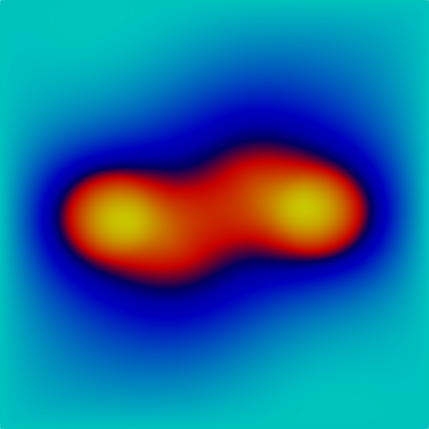

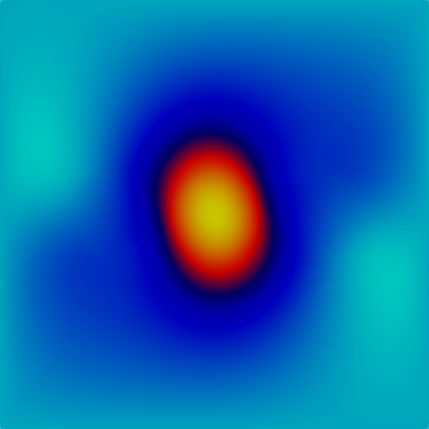

There is not much to be said about the results of this program, other than that they look nice. All images were made using VisIt from the output files that the program wrote to disk. The first two pictures show the \(x\)- and \(y\)-displacements as scalar components:

|  |

You can clearly see the sources of \(x\)-displacement around \(x=0.5\) and \(x=-0.5\), and of \(y\)-displacement at the origin.

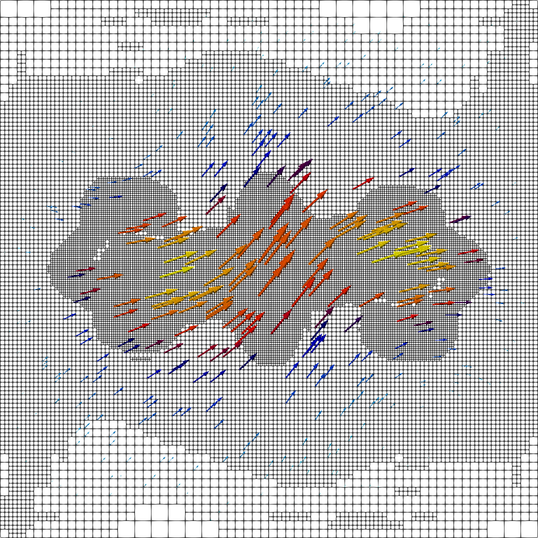

What one frequently would like to do is to show the displacement as a vector field, i.e., vectors that for each point illustrate the direction and magnitude of displacement. Unfortunately, that's a bit more involved. To understand why this is so, remember that we have just defined our finite element as a collection of two components (in dim=2 dimensions). Nowhere have we said that this is not just a pressure and a concentration (two scalar quantities) but that the two components actually are the parts of a vector-valued quantity, namely the displacement. Absent this knowledge, the DataOut class assumes that all individual variables we print are separate scalars, and VisIt and Paraview then faithfully assume that this is indeed what it is. In other words, once we have written the data as scalars, there is nothing in these programs that allows us to paste these two scalar fields back together as a vector field. Where we would have to attack this problem is at the root, namely in ElasticProblem::output_results(). We won't do so here but instead refer the reader to the step-22 program where we show how to do this for a more general situation. That said, we couldn't help generating the data anyway that would show how this would look if implemented as discussed in step-22. The vector field then looks like this (VisIt and Paraview randomly select a few hundred vertices from which to draw the vectors; drawing them from each individual vertex would make the picture unreadable):

We note that one may have intuitively expected the solution to be symmetric about the \(x\)- and \(y\)-axes since the \(x\)- and \(y\)-forces are symmetric with respect to these axes. However, the force considered as a vector is not symmetric and consequently neither is the solution.